In the last article we saw some testing of the simplest autoregressive model AR(1). I still have an outstanding issue raised by one commenter relating to the hypothesis testing that was introduced, and I hope to come back to it at a later stage.

Different Noise Types

Before we move onto more general AR models, I did do some testing of the effectiveness of the hypothesis test for AR(1) models with different noise types.

The testing shown in Part Four has Gaussian noise (a “normal distribution”), and the theory applied is only apparently valid for Gaussian noise, so I tried uniform distribution of noise and also a Gamma noise distribution:

Figure 1

The Gaussian and uniform distribution produce the same results. The Gamma noise result isn’t shown because it was also the same.

A Gamma distribution can be quite skewed, which was why I tried it – here is the Gamma distribution that was used (with the same variance as the Gaussian, and shifted to produce the same mean = 0):

Figure 2

So in essence I have found that the tests work just as well when the noise component is uniformly distributed or Gamma distributed as when it has a Gaussian distribution (normal distribution).

Hypothesis Testing of AR(1) Model When the Model is Actually AR(2)

The next idea I was interested to try was to apply the hypothesis testing from Part Three on an AR(2) model, when we assume incorrectly that it is an AR(1) model.

Remember that the hypothesis test is quite simple – we produce a series with a known mean, extract a sample, and then using the sample find out how many times the test rejects the hypothesis that the mean is different from its actual value:

Figure 3

As we can see, the test, which should be only rejecting 5% of the tests, rejects a much higher proportion as φ2 increases. This simple test is just by way of introduction.

Higher Order AR Series

The AR(1) model is very simple. As we saw in Part Three, it can be written as:

xt – μ = φ(xt-1 – μ) + εt

where xt = the next value in the sequence, xt-1 = the last value in the sequence, μ = the mean, εt = random quantity and φ = auto-regression parameter

[Minor note, the notation is changed slightly from the earlier article]

In non-technical terms, the next value in the series is made up of a random element plus a dependence on the last value – with the strength of this dependence being the parameter φ.

The more general autoregressive model of order p, AR(p), can be written as:

xt – μ = φ1(xt-1 – μ) + φ2(xt-2 – μ) + .. + φp(xt-p – μ) + εt

φ1..φp = the series of auto-regression parameters

In non-technical terms, the next value in the series is made up of a random element plus a dependence on the last few values. So of course, the challenge is to determine the order p, and then the parameters φ1..φp

There is a bewildering array of tests that can be applied, so I started simply. With some basic algebraic manipulation (not shown – but if anyone is interested I will provide more details in the comments), we can produce a series of linear equations known as the Yule-Walker equations, which allow us to calculate φ1..φp from the estimates of the autoregression.

If you look back to Figure 2 in Part Three you see that by regressing the time series with itself moved by k time steps we can calculate the lag-k correlation, rk, for k=1, 2, 3, etc. So we estimate r1, r2, r3, etc., from the sample of data that we have, and then solve the Yule-Walker equations to get φ1..φp

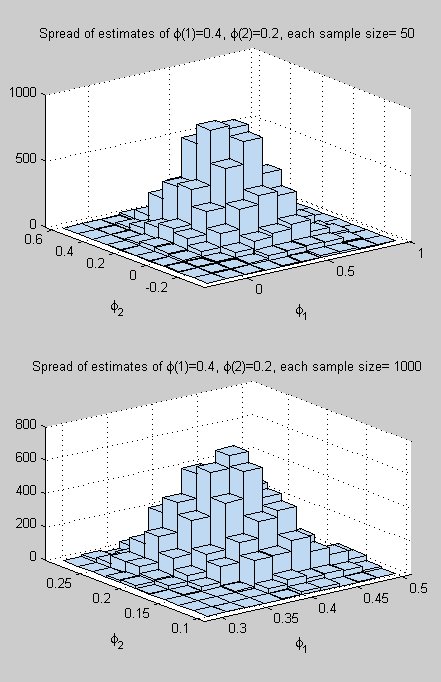

First of all I played around with simple AR(2) models. The results below are for two different sample sizes.

A population of 90,000 is created (actually 100,000 then the first 10% is deleted), and then a sample is randomly selected 10,000 times from this population. For each sample, the Yule-Walker equations are solved (each of 10,000 times) and then the results are averaged.

In these results I normalized the mean and standard deviation of the parameters by the original values (later I decided that made it harder to see what was going on and reverted to just displaying the actual sample mean and sample standard deviation):

Figure 4

Notice that the sample size of 1,000 produces very accurate results in the estimation of φ1 & φ2, with a small spread. The sample size of 50 appears to produce a low bias in the calculated results, especially for φ2, which is no doubt due to not reading the small print somewhere..

Here is a histogram of the results, showing the spread across φ1 & φ2 – note the values on the axes, the sample size of 1000 produces a much tighter set of results, the sample size of 50 has a much wider spread:

Figure 5

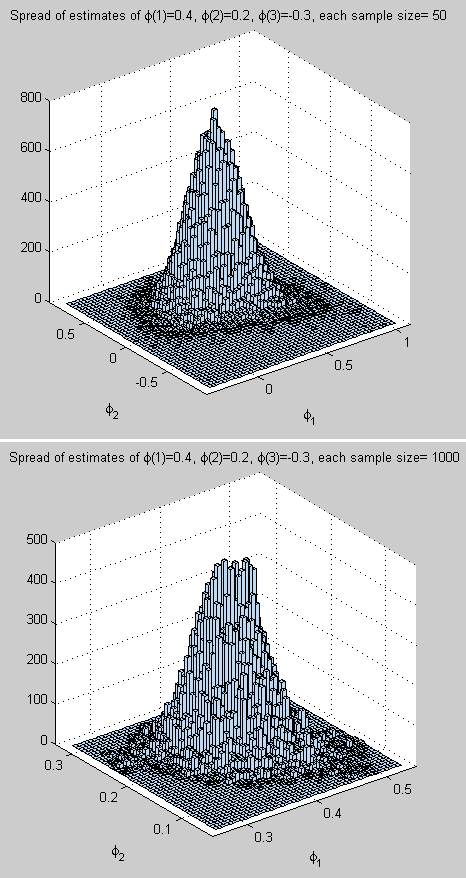

Then I played around with a more general model. With this model I send in AR parameters to create the population, but can define a higher order of AR to test against, to see how well the algorithm estimates the AR parameters from the samples.

In the example below the population is created as AR(3), but tested as if it might be an AR(4) model. The AR(3) parameters (shown on the histogram in the figure below) are φ1= 0.4, φ2= 0.2, φ3= -0.3.

The estimation seems to cope quite well as φ4 is estimated at about zero:

Figure 6

The histogram of results for the first two parameters, note again the difference in values on the axes for the different sample sizes:

Figure 7

[The reason for the finer detail on this histogram compared with figure 5 is just discovery of the Matlab parameters for 3d histograms].

Rotating the histograms around in 3d appears to confirm a bell-curve. Something to test formally at a later stage.

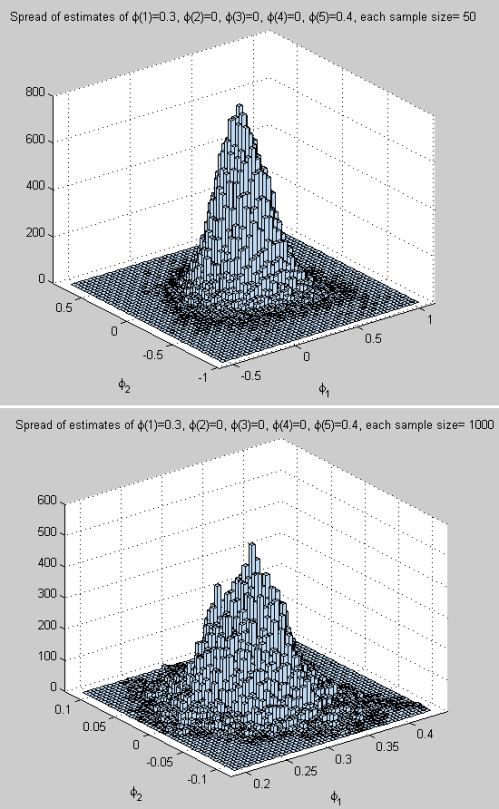

Here’s an example of a process which is AR(5) with φ1= 0.3, φ2= 0, φ3= 0, φ4= 0, φ5= 0.4; tested against AR(6):

Figure 8

And the histogram of estimates of φ1& φ2:

Figure 8

ARMA

We haven’t yet seen ARMA models – auto-regressive moving average models. And we haven’t seen MA models – moving average models with no auto-regressive behavior.

What is an MA or “moving average” model?

The term in the moving average is a “linear filter” on the random elements of the process. So instead of εt as the “uncorrelated noise” in the AR model we have εt plus a weighted sum of earlier random elements. The MA process, of order q, can be written as:

xt – μ = εt + θ1εt-1+ θ2εt-2 + .. + θpεt-p

θ1..θp = the series of moving average parameters

The term “moving average” is a little misleading, as Box and Jenkins also comment.

Why is it misleading?

Because for AR (auto-regressive) and MA (moving average) and ARMA (auto-regressive moving average = combination of AR & MA) models the process is stationary.

This means, in non-technical terms, that the mean of the process is constant through time. That doesn’t sound like “moving average”.

So think of “moving average” as a moving average (filter) of the random elements, or noise, in the process. By their nature these will average out over time (because if the average of the random elements = 0, the average of the moving average of the random elements = 0).

An example of this in the real world might be a chemical introduced randomly into a physical process – this is the εt term – but because the chemical gets caught up in pipework and valves, the actual value of the chemical released into the process at time t is the sum of a proportion of the current value released plus a proportion of earlier values released. Examples of the terminology used for the various processes:

- AR(3) is an autoregressive process of order 3

- MA(2) is a moving average process of order 2

- ARMA(1,1) is a combination of AR(1) and MA(1)

References

Time Series Analysis: Forecasting & Control, 3rd Edition, Box, Jenkins & Reinsel, Prentice Hall (1994)