In the first two parts we looked at some basic statistical concepts, especially the idea of sampling from a distribution, and investigated how this question is answered: Does this sample come from a population of mean = μ?

If we can answer this abstract-looking question then we can consider questions such as:

- “how likely is it that the average temperature has changed over the last 30 years?”

- “is the temperature in Boston different from the temperature in New York?”

It is important to understand the assumptions under which we are able to put % probabilities on the answers to these kind of questions.

The statistical tests so far described rely upon each event being independent from every other event. Typical examples of independent events in statistics books are:

- the toss of a coin

- the throw of a dice

- the measurement of the height of a resident of Burkina Faso

In each case, the result of one measurement does not affect any other measurement.

If we measure the max and min temperatures in Ithaca, NY today, and then measure it tomorrow, and then the day after, are these independent (unrelated) events?

No.

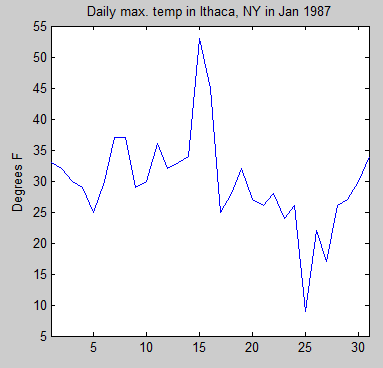

Here is the daily maximum temperature for January 1987 for Ithaca, NY:

Figure 1

Now we want to investigate how values on one day are correlated with values on another day. So we look at the correlation of the temperature on each day with progressively larger lags in days. The correlation goes by the inspiring and memorable name of the Pearson product-moment correlation coefficient.

This correlation is the value commonly known as “r”.

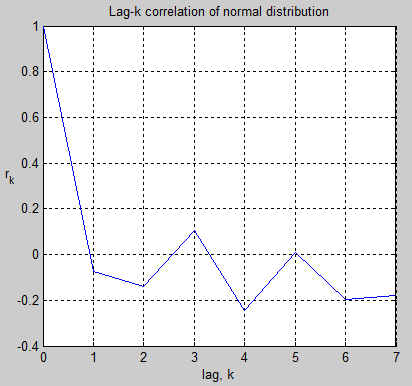

So for k=0 we are comparing each day with itself, which obviously has a perfect correlation. And for k=1 we are comparing each day with the one afterwards – and finding the (average) correlation. For k=2 we are comparing 2 days afterwards. And so on. Here are the results:

Figure 2

As you can see, the autocorrelation decreases as the number of days increases, which is intuitively obvious. And by the time we get to more than 5 days, the correlation has decreased to zero.

By way of comparison, here is one random (normal) distribution with the same mean and standard deviation as the Ithaca temperature values:

Figure 3

And the autocorrelation values:

Figure 4

As you would expect, the correlation of each value with the next value is around zero. The reason it is not exactly zero is just the randomness associated with only 31 values.

Digression: Time-Series and Frequency Transformations

Many people will be new to the concept of how time-series values convert into frequency plots – the Fourier transform. For those who do understand this subject, skip forward to the next sub-heading..

Suppose we have a 50Hz sine wave. If we plot amplitude against time we get the first graph below.

Figure 5

If we want to investigate the frequency components we do a fourier transform and we get the 2nd graph below. That simply tells us the obvious fact that a 50Hz signal is a 50Hz signal. So what is the point of the exercise?

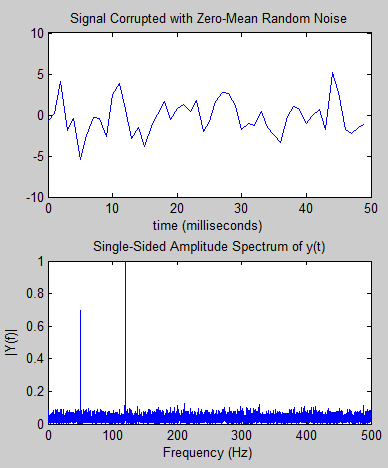

What about if we have the time-based signal shown in the next graph – what can we tell about its real source?

Figure 6

When we see the frequency transform in the 2nd graph we can immediately tell that the signal is made up of two sine waves – one at 50Hz and one at 120Hz – along with some noise. It’s not really possible to deduce that from looking at the time-domain signal (not for ordinary people anyway).

Frequency transforms give us valuable insights into data.

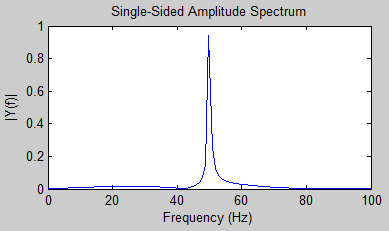

Just as a last point on this digression, in figure 5, why isn’t the frequency plot a perfect line at 50Hz? If the time-domain data went from zero to infinity, the frequency plot would be that perfect line. In figure 5, the time-domain data actually went from zero to 10 seconds (not all of which was plotted).

Here we see the frequency transform for a 50Hz sine wave over just 1 second:

Figure 7

For people new to frequency transforms it probably doesn’t seem clear why this happens but by having a truncated time series we have effectively added other frequency components – from the 1 second envelope surrounding the 50 Hz sine wave. If this last point isn’t clear, don’t worry about it.

Autocorrelation Equations and Frequency

The simplest autocorrelation model is the first-order autoregression, or AR(1) model.

The AR(1) model can be written as:

xt+1 – μ = φ(xt – μ) + εt+1

where xt+1 = the next value in the sequence, xt = the last value in the sequence, μ = the mean, εt+1 = random quantity and φ = auto-regression parameter

In non-technical terms, the next value in the series is made up of a random element plus a dependence on the last value – with the strength of this dependence being the parameter φ.

It appears that there is some confusion about this simple model. Recently, referencing an article via Bishop Hill, Doug Keenan wrote:

To draw that conclusion, the IPCC had to make an assumption about the global temperature series. The assumption that it made is known as the “AR1” assumption (this is from the statistical concept of “first-order autoregression”). The assumption implies, among other things, that only the current value in a time series has a direct effect on the next value. For the global temperature series, it means that this year’s temperature affects next year’s, but temperatures in previous years do not. For example, if the last several years were extremely cold, that on its own would not affect the chance that next year will be colder than average. Hence, the assumption made by the IPCC seems intuitively implausible.

[Update – apologies to Doug Keenan for misunderstanding his point – see his comment below ]

The confusion in the statement above is that mathematically the AR1 model does only rely on the last value to calculate the next value – you can see that in the formula above. But that doesn’t mean that there is no correlation between earlier values in the series. If day 2 has a relationship to day 1, and day 3 has a relationship to day 2, clearly there is a relationship between day 3 and day 1 – just not as strong as the relationship between day 3 and day 2 or between day 2 and day 1.

(And it is easy to demonstrate with a lag-2 correlation of a synthetic AR1 series – the 2-day correlation is not zero).

Well, more for another article when we look at the various autoregression models.

For now we will consider the simplest model, AR1, to learn a few things about time-series data with serial correlation.

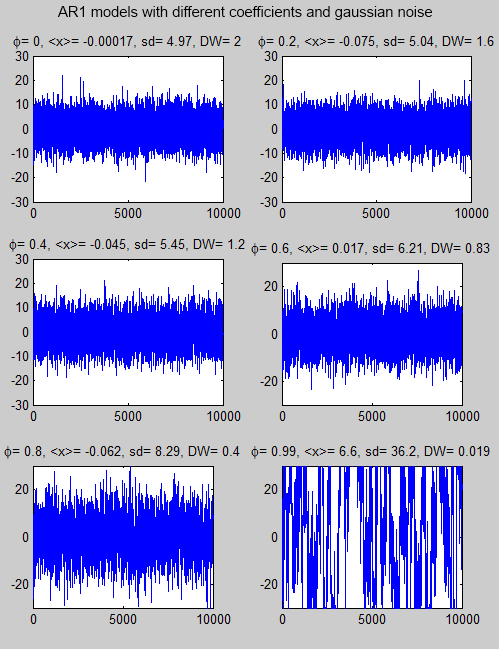

Here are some synthetic time-series with different autoregression parameters (the value φ in the equation) and gaussian (=normal, or the “bell-shaped curve”) noise. The gaussian noise is the same in each series – with a standard deviation=5.

I’ve used long time-series to make the frequency characteristics clearer (later we will see the same models over a shorter time period):

Figure 8

The value <x> is the mean. Note that the standard deviation (sd) of the data gets larger as the autoregressive parameter increases. DW is the Durbin-Watson statistic which we will probably come back to at a later date.

When φ = 0, this is the same as each data value being completely independent of every other data value.

Now the frequency transformation (using a new dataset to save a little programming time on my part):

Figure 9

The first graph in the panel, with φ=0, is known as “white noise“. This means that the energy per unit frequency doesn’t change with frequency. As the autoregressive parameter increases you can see that the energy shifts to lower frequencies. This is known as “red noise“.

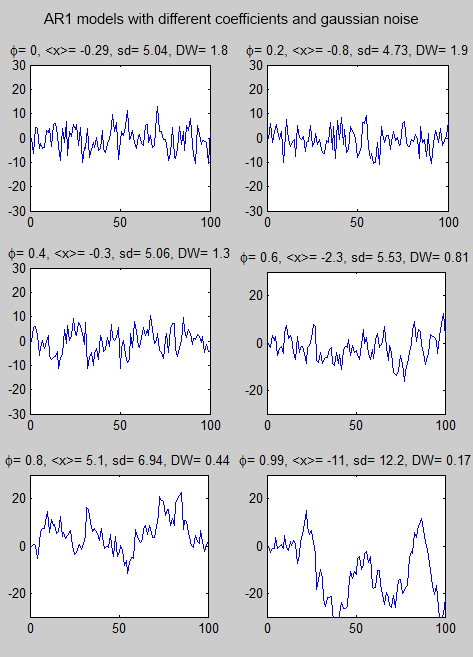

Here are the same models over 100 events (instead of 10,000) to make the time-based characteristics easier to see:

Figure 10

As the autoregression parameter increases you can see that the latest value is more likely to be influenced by the previous value.

The equation is also sometimes known as a red noise process because a positive value of the parameter φ averages or smoothes out short-term fluctuations in the serially independent series of innovations, ε, while affecting the slower variations much less strongly. The resulting time series is called red noise by analogy to visible light depleted in the shorter wavelengths, which appears reddish..

It is evident that the most erratic point to point variations in the uncorrelated series have been smoothed out, but the slower random variations are essentially preserved. In the time domain this smoothing is expressed as positive serial correlation. From a frequency perspective, the resulting series is “reddened”.

From Wilks (2011).

There is more to cover on this simple model but the most important point to grasp is that data which is serially correlated has different statistical properties than data which is a series of independent events.

Luckily, we can still use many standard hypothesis tests but we need to make allowance for the increase in the standard deviation of serially correlated data over independent data.

References

Statistical Methods in the Atmospheric Sciences, 3rd edition, Daniel Wilks, Academic Press (2011)