During a discussion following one of the six articles on Ferenc Miskolczi someone pointed to an article in E&E (Energy & Environment). I took a look and had a few questions.

The article is question is The Thermodynamic Relationship Between Surface Temperature And Water Vapor Concentration In The Troposphere, by William C. Gilbert from 2010. I’ll call this WG2010. I encourage everyone to read the whole paper for themselves.

Actually this E&E edition is a potential collector’s item because they announce it as: Special Issue – Paradigms in Climate Research.

The author comments in the abstract:

The key to the physics discussed in this paper is the understanding of the relationship between water vapor condensation and the resulting PV work energy distribution under the influence of a gravitational field.

Which sort of implies that no one studying atmospheric physics has considered the influence of gravitational fields, or at least the author has something new to offer which hasn’t previously been understood.

Physics

Note that I have added a WG prefix to the equation numbers from the paper, for ease of referencing:

First let’s start with the basic process equation for the first law of thermodynamics

(Note that all units of measure for energy in this discussion assume intensive properties, i.e., per unit mass):dU = dQ – PdV ….[WG1]

where dU is the change in total internal energy of the system, dQ is the change in thermal energy of the system and PdV is work done to or by the system on the surroundings.

This is (almost) fine. The author later mixes up Q and U. dQ is the heat added to the system. dU is change in internal energy which includes the thermal energy.

But equation (1) applies to a system that is not influenced by external fields. Since the atmosphere is under the influence of a gravitational field the first law equation must be modified to account for the potential energy portion of internal energy that is due to position:

dU = dQ + gdz – PdV ….[WG2]

where g is the acceleration of gravity (9.8 m/s²) and z is the mass particle vertical elevation relative to the earth’s surface.

[Emphasis added. Also I changed “h” into “z” in the quotes from the paper to make the equations easier to follow later].

This equation is incorrect, which will be demonstrated later.

The thermal energy component of the system (dQ) can be broken down into two distinct parts: 1) the molecular thermal energy due to its kinetic/rotational/ vibrational internal energies (CvdT) and 2) the intermolecular thermal energy resulting from the phase change (condensation/evaporation) of water vapor (Ldq). Thus the first law can be rewritten as:

dU = CvdT + Ldq + gdz – PdV ….[WG3]

where Cv is the specific heat capacity at constant volume, L is the latent heat of condensation/evaporation of water (2257 J/g) and q is the mass of water vapor available to undergo the phase change.

Ouch. dQ is heat added to the system, and it is dU which is the internal energy which should be broken down into changes in thermal energy (temperature) and changes in latent heat. This is demonstrated later.

Later, the author states:

This ratio of thermal energy released versus PV work energy created is the crux of the physics behind the troposphere humidity trend profile versus surface temperature. But what is it that controls this energy ratio? It turns out that the same factor that controls the pressure profile in the troposphere also controls the tropospheric temperature profile and the PV/thermal energy ratio profile. That factor is gravity. If you take equation (3) and modify it to remove the latent heat term, and assume for an adiabatic, ideal gas system CpT = CvT + PV, you can easily derive what is known in the various meteorological texts as the “dry adiabatic lapse rate”:

dT/dz = –g/Cp = 9.8 K/km ….[WG5]

[Emphasis added]

Unfortunately, with his starting equations you can’t derive this result.

What I am talking about?

The Equations Required to Derive the Lapse Rate

Most textbooks on atmospheric physics include some derivation of the lapse rate. We consider a parcel of air of one mole. (Some terms are defined slightly differently to WG2010 – note 1).

There are 5 basic equations:

The hydrostatic equilibrium equation:

dp/dz = -ρg ….[1]

where p = pressure, z = height, ρ = density and g = acceleration due to gravity (=9.8 m/s²)

The ideal gas law:

pV = RT ….[2]

where V = volume, R = the gas constant, T = temperature in K, and this form of the equation is for 1 mole of gas

The equation for density:

ρ = M/V ….[3]

where M = mass of one mole

The First Law of Thermodynamics:

dU = dQ + dW ….[4]

where dU = change in internal energy, dQ = heat added to the system, dW = work added to the system

..rewritten for dry atmospheres as:

dQ = CvdT + pdV ….[4a]

where Cv = heat capacity at constant volume (for one mole), dV = change in volume

And the (less well-known) equation which links heat capacity at constant volume with heat capacity at constant pressure (derived from statistical thermodynamics and experimentally verifiable):

Cp = Cv + R ….[5]

where Cp = heat capacity (for one mole) at constant pressure

With an adiabatic process no heat is transferred between the parcel and its surroundings. This is a reasonable assumption with typical atmospheric movements. As a result, we set dQ = 0 in equation 4 & 4a.

Using these 5 equations we can solve to find the dry adiabatic lapse rate (DALR):

dT/dz = -g/cp ….[6]

where dT/dz = the change in temperature with height (the lapse rate), g = acceleration due to gravity, and cp = specific heat capacity (per unit mass) at constant pressure

dT/dz ≈ -9.8 K/km

Knowing that many readers are not comfortable with maths I show the derivation in The Maths Section at the end.

And also for those not so familiar with maths & calculus, the “d” in front of a term means “change in”. So, for example, “dT/dz” reads as: “the change in temperature as z changes”.

Fundamental “New Paradigm” Problems

There are two basic problems with his fundamental equations:

- he confuses internal energy and heat added to get a sign error

- he adds a term for gravitational potential energy when it is already implicitly included via the pressure change with height

A sign error might seem unimportant but given the claims later in the paper (with no explanation of how these claims were calculated) it is quite possible that the wrong equation was used to make these calculations.

These problems will now be explained.

Under the New Paradigm – Sign Error

Because William Gilbert mixes up internal energy and heat added, the result is a sign error. Consult a standard thermodynamics textbook and the first law of thermodynamics will be represented something like this:

dU = dQ + dW

Which in words means:

The change in internal energy equals the heat added plus the work done on the system.

And if we talk about dW as the work done by the system then the sign in front of dW will change. So, if we rewrite the above equation:

dU = dQ – pdV

By the time we get to [WG3] we have two problems.

Here is [WG3] for reference:

dU = CvdT + Ldq + gdz – PdV ….[WG3]

The first problem is that for adiabatic process, no heat is added to (or removed from) the system. So dQ = 0. The author says dU = 0 and makes dQ = change in internal energy (=CvdT + Ldq).

Here is the demonstration of the problem using his equation..

If we have no phase change then Ldq = 0. The gdz term is a mistake – for later consideration – but if we consider an example with no change in height in the atmosphere, we would have (using his equation):

CvdT – PdV = 0 ….[WG3a]

So if the parcel of air expands, doing work on its environment, what happens to temperature?

dV is positive because the volume is increasing. So to keep the equation valid, dT must be positive, which means the temperature must increase.

This means that as the parcel of air does work on its environment, using up energy, its temperature increases – adding energy. A violation of the first law of thermodynamics.

Hopefully, everyone can see that this is not correct. But it is the consequence of the incorrectly stated equation. In any case, I will use both the flawed and the fixed version to demonstrate the second problem.

Under the New Paradigm – Gravity x 2

This problem won’t appear so obvious, which is probably why William Gilbert makes the mistake himself.

In the list of 5 equations, I wrote:

dQ = CvdT + pdV ….[4a]

This is for dry atmospheres, to keep it simple (no Ldq term for water vapor condensing). If you check the Maths Section at the end, you can see that using [4a] we get the result that everyone agrees with for the lapse rate.

I didn’t write:

dQ = CvdT + Mgdz + pdV ….[should this instead be 4a?]

[Note that my equations consider 1 mole of the atmosphere rather than 1 kg which is why “M” appears in front of the gdz term].

So how come I ignored the effect of gravity in the atmosphere yet got the correct answer? Perhaps the derivation is wrong?

The effect of gravity already shows itself via the increase in pressure as we get closer to the surface of the earth.

Atmospheric physics has not been ignoring the effect of gravity and making elementary mistakes. Now for the proof.

If you consult the Maths Section, near the end we have reached the following equation and not yet inserted the equation for the first law of thermodynamics:

pdV – Mgdz = (Cp-Cv)dT ….[10]

Using [10] and “my version” of the first law I successfully derive dT/dz = -g/cp (the right result). Now we will try using William Gilbert’s equation [WG3], with Ldq = 0, to derive the dry adiabatic lapse rate.

0 = CvdT + gdz – PdV ….[WG3b]

and rewriting for one mole instead of 1 kg (and using my terms, see note 1):

pdV = CvdT + Mgdz ….[WG3c]

Inserting WG3c into [10]:

CvdT + Mgdz – Mgdz = (Cp-Cv)dT ….[11]

which becomes:

Cv = (Cp-Cv) ↠ Cp = Cv/2 ….[11a]

A New Paradigm indeed!

Now let’s fix up the sign error in WG3 and see what result we get:

0 = CvdT + gdz + PdV ….[WG3d]

and again rewriting for one mole instead of 1 kg (and again using my terms, see note 1):

pdV = -CvdT – Mgdz ….[WG3e]

Inserting WG3e into [10]:

-CvdT – Mgdz – Mgdz = (Cp-Cv)dT ….[12]

which becomes:

-CvdT – 2Mgdz = CpdT – CvdT ….[12a]

and canceling the -CvdT term from each side:

-2Mgdz = CpdT ….[12b]

So:

dT/dz = -2Mg/Cp, and because specific heat capacity, cp = Cp/M

dT/dz = -2g/cp ….[12c]

The result of “correctly including gravity” is that the dry adiabatic lapse rate ≈ -19.6 K/km.

Note the factor of 2. This is because we are now including gravity twice. The pressure in the atmosphere reduces as we go up – this is because of gravity. When a parcel of air expands due to its change in height, it does work on its surroundings and therefore reduces in temperature – adiabatic expansion. Gravity is already taken into account with the hydrostatic equation.

The Physics of Hand-Waving

The author says:

As we shall see, PV work energy is very important to the understanding of this thermodynamic behavior of the atmosphere, and the thermodynamic role of water vapor condensation plays an important part in this overall energy balance. But this is unfortunately often overlooked or ignored in the more recent climate science literature. The atmosphere is a very dynamic system and cannot be adequately analyzed using static, steady state mental models that primarily focus only on thermal energy.

Emphasis added. This is an unproven assertion because it comes with no references.

In the next stage of the “physics” section, the author doesn’t bother with any equations, making it difficult to understand exactly what he is claiming.

Keeping this gravitational steady state equilibrium in mind, let’s look again at what happens when latent heat is released (condensation) during air parcel ascension.

Latent heat release immediately increases the parcel temperature. But that also results in rapid PV expansion which then results in a drop in parcel temperature. Buoyancy results and the parcel ascends and is driven by the descending pressure profile created by gravity.

The rate of ascension, and the parcel temperature, is a function of the quantity of latent heat released and the PV work needed to overcome the gravitational field to reach a dynamic equilibrium. The more latent heat that is released, the more rapid the expansion / ascension. And the more rapid the ascension, the more rapid is the adiabatic cooling of the parcel. Thus the PV/thermal energy ratio should be a function of the amount of latent heat available for phase conversion at any given altitude. The corresponding physics shows the system will try to force the convecting parcel to approach the dry adiabatic or “gravitational” lapse rate as internal latent heat is released.

For the water vapor remaining uncondensed in the parcel, saturation and subsequent condensation will occur at a more rapid rate if more latent heat is released. In fact if the cooling rate is sufficiently large, super saturation can occur, which can then cause very sudden condensation in greater quantity. Thus the thermal/PV energy ratio is critical in determining the rate of condensation occurring. The higher this ratio, the more complete is the condensation in the parcel, and the lower the specific humidity will be at higher elevations.

I tried (unsuccessfully) to write down some equations to reflect the above paragraphs. The correct approach for the author would be:

- A. Here is what atmospheric physics states now (with references)

- B. Here are the flaws/omissions due to theoretical consideration i), ii), etc

- C. Here is the new derivation (with clear statement of physics principles upon which the new equations are based)

One point I think the author is claiming is that the speed of ascent is a critical factor. Yet the equation for the moist adiabatic lapse rate doesn’t allow for a function of time in the equation.

The (standard) equation has the form (note 2):

dT/dz = g/cp {[1+Lq*/RT]/[1+βLq*/cp]} ….[13]

where q* is the saturation specific humidity and is a function of p & T (i.e. not a constant), and β = 0.067/°C. (See, for example: Atmosphere, Ocean & Climate Dynamics by Marshall & Plumb, 2008)

And this means that if the ascent is – for example – twice as fast, the amount of water vapor condensed at any given height will still be the same. It will happen in half the time, but why will this change any of the thermodynamics of the process?

It might, but it’s not clearly stated, so who can determine the “new physics”?

I can see that something else is claimed to do with the ratio CvdT /pV but I don’t know what it is, or what is behind the claim.

Writing the equations down is important so that other people can evaluate the claim.

And the final “result” of the hand waving is what appears to be the crux of the paper – more humidity at the surface will cause so much “faster” condensation of the moisture that the parcel of air will be drier higher up in the atmosphere. (Where “faster” could mean dT/dt, or could mean dT/dz).

Assuming I understood the claim of the paper correctly it has not been proven from any theoretical considerations. (And I’m not sure I have understood the claim correctly).

Empirical Observations

The heading is actually “Empirical Observations to Verify the Physics”. A more accurate title is “Empirical Observations”.

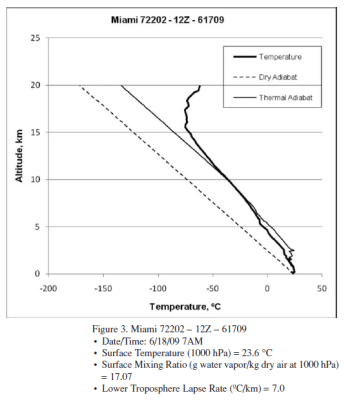

The author provides 3 radiosonde profiles from Miami. Here is one example:

Figure 1 – “Thermal adiabat” in the legend = “moist adiabat”

With reference to the 3 profiles, a higher surface humidity apparently leads to complete condensation at a lower altitude.

This is, of course, interesting. This would mean a higher humidity at the surface leads to a drier upper troposphere.

But it’s just 3 profiles. From one location on two different days. Does this prove something or should a few more profiles be used?

A few statements that need backing up:

The lower troposphere lapse rate decreases (slower rate of cooling) with increasing system surface humidity levels, as expected. But the differences in lapse rate are far less than expected based on the relative release of latent heat occurring in the three systems.

What equation determines “than expected”? What result was calculated vs measured? What implications result?

The amount of PV work that occurs during ascension increases markedly as the system surface humidity levels increase, especially at lower altitudes..

How was this calculated? What specifically is the claim? The equation 4a, under adiabatic conditions, with the additional of latent heat reads like this:

CvdT + Ldq + pdV = 0 ….[4a]

Was this equation solved from measured variables of pressure, temperature & specific humidity?

Latent heat release is effectively complete at 7.5 km for the highest surface humidity system (20.06 g/kg) but continues up to 11 km for the lower surface humidity systems (18.17 and 17.07 g/kg). The higher humidity system has seen complete condensation at a lower altitude, and a significantly higher temperature (−17 ºC) than the lower humidity systems (∼ −40 ºC) despite the much greater quantity of latent heat released.

How was this determined?

If it’s true, perhaps the highest humidity surface condition ascended into a colder air front and therefore lost all its water vapor due to the lower temperature?

Why is this (obvious) possibility not commented on or examined??

Textbook Stuff and Why Relative Humidity doesn’t Increase with Height

The radiosonde profiles in the paper are not necessarily following one “parcel” of air.

Consider a parcel of air near saturation at the surface. It rises, cools and soon reaches saturation. So condensation takes place, the release of latent heat causes the air to be more buoyant and so it keeps rising. As it rises water vapor is continually condensing and the air (of this parcel) will be at 100% relative humidity.

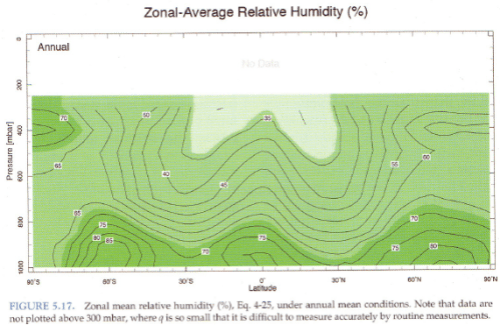

Yet relative humidity doesn’t increase with height, it reduces:

Figure 2

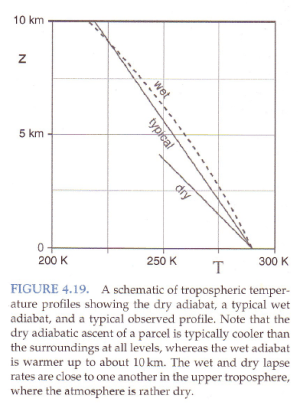

Standard textbook stuff on typical temperature profiles vs dry and moist adiabatic profiles:

Figure 3

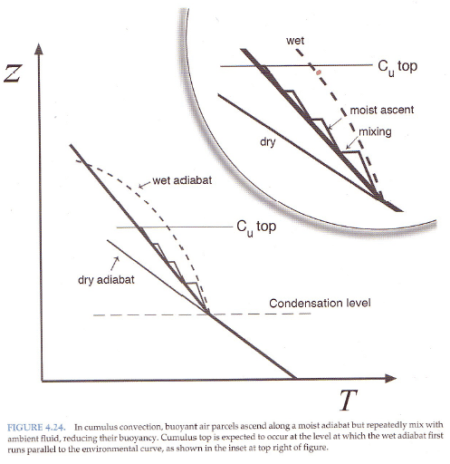

And explaining why the atmosphere under convection doesn’t always follow a moist adiabat:

Figure 4

The atmosphere has descending dry air as well as rising moist air. Mixing of air takes place, which is why relative humidity reduces with height.

Conclusion

The “theory section” of the paper is not a theory section. It has a few equations which are incorrect, followed by some hand-waving arguments that might be interesting if they were turned into equations that could be examined.

It is elementary to prove the errors in the few equations stated in the paper. If we use the author’s equations we derive a final result which contradicts known fundamental thermodynamics.

The empirical results consist of 3 radiosonde profiles with many claims that can’t be tested because the method by which these claims were calculated is not explained.

If it turned out that – all other conditions remaining the same – higher specific humidity at the surface translated into a drier upper troposphere, this would be really interesting stuff.

But 3 radiosonde profiles in support of this claim is not sufficient evidence.

The Maths Section – Real Derivation of Dry Adiabatic Lapse Rate

There are a few ways to get to the final result – this is just one approach. Refer to the original 5 equations under the heading: The Equations for the Lapse Rate.

From [2], pV = RT, differentiate both sides with respect to T:

↠ d(pV)/dT = d(RT)/dT

The left hand side can be expanded as: V.dp/dT + p.dV/dT, and the right hand side = R (as dT/dT=1).

↠ Vdp + pdV = RdT ….[7]

Insert [5], Cp = Cv + R, into [7]:

Vdp + pdV = (Cp-Cv)dT ….[8]

From [1] & [3]:

Vdp = -Mgdz ….[9]

Insert [9] into [8]:

pdV – Mgdz = (Cp-Cv)dT ….[10]

From 4a, under adiabatic conditions, dQ = 0, so CvdT + pdV = 0, and substituting into [10]”

-CvdT – Mgdz = CpdT – CvdT

and adding CvdT to both sides:

-Mgdz = CpdT, or dT/dz = -Mg/Cp ….[11]

and specific heat capacity, cp = Cp/M, so:

dT/dz = g /cp ….[11a]

The correct result, stated as equation [6] earlier.

Notes

Note 1: Definitions in equations. WG2010 has:

- P = pressure, while this article has p = pressure (lower case instead of upper case0

- Cv = heat capacity for 1 kg, this article has Cv = heat capacity for one mole, and cv = heat capacity for 1 kg.

Note 2: The moist adiabatic lapse rate is calculated using the same approach but with an extra term, Ldq, in equation 4a, which accounts for the latent heat released as water vapor condenses.

SoD

The internal energy(U) is a state function and contains any relevant energy in the given situation.

Kinetic energy of molecules (but not motion of the centre of mass)

Potential energy (in this case gravitational)

Perhaps chemical and electrical energy (if appropriate) here the mL factor(latent heat)

and so on.

Perhaps W C Gilbert thought that to expand the Internal Energy collection (by removing the brackets) so to speak might illustrate the mechanics of the system.

I would agree with you that great care needs to be exercised here to avoid double counting.

However I thought that the main contribution that W C Gilbert and Hans Jelbring brought to the debate is that greenhouse effect pays no part whatsoever in the dry adiabatic lapse rate.

That this is now accepted by all rational people with the exception of some unreconstructed greenhouse diehards is due to their popularising of the issue.

Bryan,

I’ve never seen anyone claim that the dry adiabatic lapse rate has anything to do with the greenhouse effect. I’ve seen several derivations; none of them even mention carbon dioxide.

So that is no contribution of Gilbert & Jelbring.

Neal J. King

When did you see your first derivation of the dry adiabatic lapse rate?

I can assure you that “out there”, several commentators who consider themselves quite expert are astonished to find that the “greenhouse effect” plays no part in the basic temperature profile of the troposphere.

I think that this is the first post that SoD has headlined it.

W C Gilbert and Leonard Weinstein were major contributors to a posting on the atmosphere of Venus.

SoD did not raise any issues with W C Gilbert then, as far as my memory goes.

Perhaps SoD will give you a link to that posting, I cant remember the exact title.

Bryan,

The first derivation I saw around 1977, from a book first published in 1936: Fermi’s book on thermodynamics. A simple two-page derivation, based only on the adiabatic gas law and hydrostatic equilibrium.

Neal J. King

I agree there was nothing new in the thermodynamics calculation of Gilbert & Jelbring.

Rather it has been “overlooked” by people following a basic climate science course.

Otherwise I cannot account for the astonishment I find when it is brought to the attention of greenhouse enthusiasts.

My clinching argument if the point comes up is to say that even SoD accepts this fact.

I was hoping you could have found it in an early climate science textbook.

The famous historical “greenhouse” experiment by R W Wood was similarly overlooked by some quite eminent authors of thermodynamics textbooks.

I have some textbook examples of an actual greenhouse being used to describe the greenhouse effect.

Even now some people (thankfully fewer and fewer) think that R W Wood got it wrong.

Bryan,

From what SoD documents, there is not much that is right about G&J’s calculation.

I have stumbled across other derivations of the adiabatic lapse rate, but I’ve never seen any suggestion that it depended in any way on the greenhouse effect.

It would be nice to get SoD to confirm (or deny) the absence of any role by the “greenhouse effect” in determining the temperature profile in the troposphere (apart, perhaps, from effects on the height of the troposphere itself?).

omnologos:

The subject gets confused by people wanting simple answers to non-precise questions.

Many times the question is asked by people who don’t grasp the difference between the more specific questions that can be posed. I am not making any comment about you by the way – just the reason for my lengthy answer..

1. If you asked the question: “Is the adiabatic lapse rate determined by any radiation considerations?” – the answer is No, radiation has absolutely nothing to do with the adiabatic lapse rate.

2. To the precise question you actually asked maybe without realizing it, the answer is – Deny (=Deny the absence of any role by the “greenhouse effect” in determining the temperature profile in the troposphere apart, perhaps, from effects on the height of the troposphere itself)

3. To the question you probably meant to ask – and let me rephrase it – “Is dT/dz of the atmosphere determined by the greenhouse effect?” – the answer is – Yes, but not in the way you might think. Without a greenhouse effect, dT/dz is on average =0; with a greenhouse effect, dT/dz = the adiabatic lapse rate.

4. To a more precise phrasing of your question “Would the top of the troposphere be at the surface with no greenhouse effect? – the answer is Yes, i.e., there would be no troposphere (although a more difficult subject), which is why I answered “Deny” to your actual question.

Note that points 3 & 4 depend on the definition of the troposphere, which is usually taken to be the region where convection operates.

To understand the answers, first a citation from an earlier article, followed by a more lengthy explanation.

From Things Climate Science has Totally Missed? – Convection

With no radiatively-active gases it is difficult to see how the lapse rate can be sustained. The lapse rate is not determined by any radiative physics. But if no process exists to initiate convection then you will not see the adiabatic lapse rate operating.

The stratosphere, for example, does not follow the adiabatic lapse rate. Why not? The same laws of physics operate in the stratosphere.. The reason is because radiation is a more effective mover of energy than convection. So convection does not take place (see note 1)

So in an atmosphere where no gases absorb longwave radiation, the question becomes “what initiates convection?”

At the moment, in our atmosphere, convection is a net mover of energy from the surface into the troposphere. And radiation from the atmosphere to the surface returns this energy.

If the surface radiated all of the absorbed solar radiation back into space (as would eventually happen with no “greenhouse” gases), then if convection became a net mover of energy from the surface, what returns this energy to the surface?

There was an interesting discussion about this in Convection, Venus, Thought Experiments and Tall Rooms Full of Gas – A Discussion. You can see the view of Leonard Weinstein who (massive over-simplification coming, sorry Leonard, just trying to keep my explanation short), sees the mechanical energy of planetary rotation plus differential solar heating as providing energy to initiate & sustain convection (both up and down obviously).

Sorry for my long answer to your short question. Perhaps I should have written an article.

Note 1 – Some convection does take place in the stratosphere. But as a general rule the vertical convection that we see in the troposphere does not operate in the stratosphere.

Note 2 – Often I write “the inappropriately-named ‘greenhouse’ effect” and nearly always use quotes around “greenhouse” effect. Due to multiple quote marks I removed them for readability.

thank you SoD for your kind answer(s). Somehow I think if there were more of you and less of realclimaters or skepticalscientists there would be no controversy at all. 😎

I shall read the other link, most likely will be back for more q’s.

And the short answer:

The adiabatic lapse rate describes what happens to air that is convected.

The adiabatic lapse rate does not determined whether convection takes place.

If no convection takes place, dT/dz will therefore not be determined by the adiabatic lapse rate. If convection does take place, dT/dz = adiabatic lapse rate.

Adiabatic lapse rate is not determined by radiative processes.

Convection (whether or not it takes place) is affected by radiative processes.

Note: in common convection we write: dT/dz = -Γ where Γ is a positive number. Γ = 9.8 K/km for dry air and a number between 3 K/km up to 9.8 K/km for moist air.

Γ is the greek capital Gamma.

SOD,

Thank you for spending the time to review my paper. Feedback is always welcome (with the exception of the water vapor kind). This has also been very helpful to me in better understanding the broken paradigm that so many of the radiation heat transfer aficionados seem to suffer from. It appears to be simply a general lack of understanding of basic thermodynamics outside of radiation heat transfer. So be it.

I began the section on “Physics” with people such as yourself in mind when I said:

There is nothing controversial in this entire section – it is just basic physics. It has been reviewed by several physicists both before and after publication and the only criticism I received was that it was too basic to be included in a scientific paper. But I felt it was necessary to keep it in since it would probably be read by some “Climate Scientists” and they would need all the help they could get. It seems that I was right. (Note: there is a misstatement on pg. 266 where I said for adiabatic processes dU = 0. I tried to correct it before publication but was too late. But it does not affect the validity of the rest of the discussion).

I will not try to address all your points – they are a jumbled mess – but I will address a few key items to help you get back on track.

First, you seem to tie yourself in knots over the sign of the work term in the first law. It is very simple and you explained it correctly in your discussion. The term is positive if work is being done on the system by the surroundings. The term is negative if the system is doing work on the surroundings. I show a negative sign since I am dealing with upward convection and the parcel is expanding and doing work on the surroundings. If I was dealing with downward convection (subsidence) I would show a positive sign for work energy since the parcel is being compressed by the surroundings. It’s all just common sense. Unfortunately the bulk of your discussion on this point is non-sense.

Did you actually read the paper? Just in case, here is a very brief synopsis. If the system (parcel) does work on the surroundings, the parcel expands (- PdV). This energy has to come from somewhere and since the process is assumed to be adiabatic, the energy will come from CvdT and the temperature will decrease. But the parcel is now more buoyant and it will rise; and gdz will increase, offsetting the loss in CvdT. Thus, according to the first law dU = 0. But you don’t “believe” in “gdz” so it is pointless to spend more time on this. Once you get things figured out, and know the difference between dQ and dU, I can spend some time going into “free energy” and maybe you will understand better.

Second, you seem to have had a problem with my statement:

You said:

Let me help you. Here is my equation (3):

dU = CvdT + Ldq + gdh – PdV (3)

Remove the latent heat term (the parcel is dry) and you get my equation (2):

dU = CvdT + gdh – PdV (2)

But

CvT + PdV = CpT

How? (I skipped that part in my paper because I thought everyone knew it already)

Cp – Cv = R and

PV = nRT

Substituting:

Cp – Cv = PV/T

CpT – CvT = PV

CpT = CvT + PV

You can ignore the (n) if you convert the terms to intensive units (J/Kg). Also you need to use the correct sign for PV (work is being done on the parcel in this case). Now equation (2) becomes:

dU = CpdT + gdh

But since dH = 0 (read the paper);

dT/dh = – g/Cp

Voila!

I have no idea what you were doing when you came up with Cp = Cv/2. But it shows you need a refresher course in basic physics (why “gdh” is important for instance – that pesky gravity thing). Now, if you want to derive the same relationship using 5 equations instead of 1 (first law), go right ahead. But I would prefer going through the Panama Canal rather than round Cape Horn (I hear it is a very treacherous journey).

By the way, the first law is generally written in the form of the next to last equation above: dU = CpdT + gdh. I purposely used the Cv version to point out the role of PV work in atmospheric thermodynamics. The energy distribution in the atmosphere is constantly shifting between the four terms in equation (3); thermal energy (CvdT), potential thermal energy (Ldq and gdh) and work energy (PdV). The radiation heat transfer aficionados generally forget (or are unaware of) this basic fact. But it is the distribution of these forms of energy at any point in time that determines the radiative properties of the atmosphere. (Repeat that last sentence 10 times before you go to bed at night – and continue to repeat each night until it has sunk in).

Just a few other comments concerning your article and I will return to my Sunday plans:

• I strongly advise you not to use molar quantities when dealing with thermodynamic equations. Molar quantities are useful in balancing chemical equations but are pretty worthless elsewhere (one of my degrees is in chemistry and I know from experience). Using molar quantities in thermodynamic equations can lead to awkward results – as you have aptly demonstrated.

• You make the following statement concerning one of my graphs:

No! The “thermal adiabat” is defined as I describe it in the paper. If you would read and understand that very salient point, you may understand the rest of it.

• As with the Miskolczi paper, you seem to struggle with the concept of “empirical”. As an example, you said this:

It was determined directly from the radiosonde data – it’s called “measurement”. That is also why this section was titled “Empirical Observations”. You do realize that a lot of the equations you worship in the textbooks were actually the result of “empirical observations” don’t you?

One last comment. You say:

Yes, that is the whole point! Reread the paper. Good luck!

Bill Gilbert

P.S., Contrary to your statement, the dry adiabatic lapse rate does not require convection to exist. It can exist in a static as well as a dynamic atmosphere. Convection is the means for maintaining the dry adiabatic lapse rate, not for creating it. It’s that gravity thing again. But I will save that discussion for another time. But if anyone wants to pursue the topic it can be found in the SOD thread starting at:

For some reason SOD does not usually refer back to this thread.

SoD says

……”And the (less well-known) equation which links heat capacity at constant volume with heat capacity at constant pressure (derived from statistical thermodynamics and experimentally verifiable):

Cp = Cv + R ….[5]”…..

In fact this derivation is done in one page from the classical thermodynamic theory as taught to first year physics students.

No need to complicate matters here.

See University Physics Young and Freedman page 547

williamcg,

Thanks for replying. I will take it one point at a time.

This is my first point of difference.

The fact that you explain it differently in words doesn’t mean your sign error has gone away in the equation. We both agree with the physics explanation of adiabatic cooling. We have a different equation.

It is easy to prove which one is correct.

When dV of the parcel increases (expansion), what happens to dT? Well, the parcel cools, so dT is negative.

dV +ve, dT -ve.

An equation with CvdT + pdV = 0 fulfils this.

An equation with CvdT – pdV = 0 cannot fulfil this.

That’s a sign error in your equation.

I haven’t said you don’t understand adiabatic cooling, I have said your equation is incorrect.

Would you like to explain how CvdT – pdV = 0 can be valid with expansion and cooling?

And for the second step..

I was substituting your equation 2 [ 0 = CvdT + gdh – PdV ] into the correct derivation.

Thankyou for showing how you got your result and for your kind words. Both have made my day.

Just to get other readers thinking, I will wait to see who claims first prize for spotting your rather large error – in the section between “Here is my equation (3):” and “Voila!”

The Panama Canal method:

Recommended for all budding maths students who want to reduce the time to the result, perhaps compromising very slightly on mathematical integrity, but, heh, let’s not get hung up on details..

Your equation 2 for dry conditions: CvdT + gdh – PdV = 0 … [2]

You explain your derivation of the dry adiabatic lapse rate thus – and I’ve got to tell you, it is awesome:

[Note: I added “[4]” as a reference]

So you substitute PdV in [2] with the value for PV from [4]. Tidy!

First, the sign in [2] changes as I already said it should, so equation 2 is rewritten as:

CvdT + gdh + PdV = 0 [2a]

Substitute via Panama Canal method:

CvdT + gdh + CpT – CvT = 0 [2a]

And using said “Panama Canal” method:

Cv.dT – Cv.T = 0? (I’m guessing here)

⇒ gdh = – CpT, we’ll just call this one CpdT, via the “let’s be friends” argument and VOILA:

gdh = -CpdT and so we have proven dT/dh = -g/Cp !!!!!!!

I remember (a long time ago) a friend of mine telling me about one of his first year maths classes on vectors, where at the end of the first year course with half an hour remaining in the lecture the lecturer said “Ok, so we have a bit of time left, shall we start our end of term party, or are there any questions?”

A slight pause and one guy shouted out from the back – “Yes, I’ve got a question. All term I’ve noticed that you put a line under some of the letters, but not under others.. why is that?”

It’s a hilarious maths joke but not that funny for anyone who wasn’t there, or who doesn’t know how vectors are written in maths..

But I was reminded of it when I saw the Panama Canal method.

William, what do you think the “d’s” actually mean in all these equations?

I agree that it’s quicker to just drop them wherever convenient for expediency, but – and call me old-fashioned – I think “VOILA” is a little premature.

P x V does not equal P x dV.

Cv x T does not equal Cv x dT

If you check the derivation in the Maths Section of my article you will find that it is mathematically correct and follows sound thermodynamic principles.

And the result that we both agree on can only be derived with the first law of thermodynamics written as:

Cv.dT + P.dV = 0

– for reasons already explained.

Unless – and you have made my month with this – you use the Panama Canal method.

And on the third point of empirical observations.

First, thank you again for your kind words, it does you credit.

Let’s take the first two ridiculous questions I asked demonstrating my confusing over the concept of empirical measurements:

“Less than expected” is not a measurement term I have seen before. I always understood it to mean a comparison between an empirical result and a theory.

What value did you measure and what theoretical value did you compare it with? How did you calculate this theoretical value? What are the actual numbers – was it like 99% of the expected theoretical value or 50% of the value – for example?

Forgive my huge confusion over the meaning of empirical. Let’s review my 2nd question on this topic:

Obviously, from your response you measured PV work. What model radiosonde was it?

Forgive my confusion, I thought you had calculated this value from some other measured parameters and wondered how you did the calculation. Now that I know the radiosonde did the measurement, all is well.

My last question that you chose was less important. It was really about how you determined that “complete condensation” had been reached? I’m not sure what “complete condensation” means. Specific humidity = 0? Specific humidity = saturation humidity for that pressure and temperature? If so, how did you calculate it?

I’m just curious about these things and like to understand them.

SoD says

……”And the (less well-known) equation which links heat capacity at constant volume with heat capacity at constant pressure (derived from statistical thermodynamics and experimentally verifiable):

Cp = Cv + R ….[5]”…..

In fact this derivation is done in one page from the classical thermodynamic theory as taught to first year physics students.

No need to complicate matters here.

In fact it is derived in 3 lines.

SoD it is quite clear that you have not completed a first year physics course or you would have known that.

You are well outside your comfort zone.

Stop before you make more basic errors.

Bryan, are you quite sure that “less well-known” isn’t meant to be ironic?

Bryan,

Actually, if you’ve been following this thread, you would notice that SoD is more than holding his own with respect to the physics.

Yes, he should have remembered the

Cp = Cv + R

with less strain, but thermo is a somewhat unintuitive subject for most physicists, because it is purely mathematical.

I recall the remark made by one unquestionably great physicist as he was cleaning off some thermodynamic equations from the blackboard for a lecture: “That’s the subject where you can only remember the equations when you’re taking the class, or teaching the class.”

Actually I thought many of my readers wouldn’t know it.

Most articles which point out flaws in “popular” papers get all manner of criticism. People challenge anything and everything. Given that this E&E paper has made a dog’s breakfast of fundamental physics but reaches a popular conclusion I expected people to challenge:

a) the ideal gas law – the atmosphere is not an ideal gas

b) hydrostatic equilibrium – the atmosphere is not static

c) where does that Cp=Cv-R equation come from? you made that up!

..

and so on (even though the author also needs to use them).

In fact I even start writing a bunch of stuff on why the hydrostatic equilibrium equation was valid, and why the adiabatic condition was valid (but deleted it due to length). Such is the tribal mentality.

I look forward to further ravaging attacks.

And Bryan is usually the one who challenges basic science. Kirchhoff’s law, Stefan-Boltzmann law, ability of a body to absorb radiation.. let’s not draw up a list and point to past sins. It’s a happy day when Bryan accepts stuff in textbooks. Let’s leave it there.

Neal J. King

You say

…”but thermo is a somewhat unintuitive subject for most physicists, because it is purely mathematical.”…..

I would disagree with that.

Most actual physicists will acquire a realistic feeling for the topic they are dealing with.

Thermodynamics is not remote from the real world.

Practitioners in the field will know well the formulas and also the background that gives rise to the formulas.

They will have a feel for when some formula should be accurate and when it is at best an approximation.

W C Gilbert spent a lifetime working with thermodynamics in the real world and has demonstrated exactly that here.

Contrast this with SoD

It appears that he has never taken a physics course at university even at a first year level.

He is like an American tourist who goes to France with no knowledge of the language but hopes to get by with a tourist phrase book.

Some of the time it works but if the transaction departs from the predictable he is lost.

For an in depth examination of this topic read

This set of exchanges showed the SoD site at its best.

The dialog on all sides of the discussion contributed in a non polemical way and there even appeared to be a consensus at the end.

Months later SoD throws all that constructive dialog aside and decides to launch a “hatchet ” job on W C Gilbert .

This in a way was so predictable and follows a path as SoD puts” right ” the “mistakes” of Gerlich & Tscheuschner, Nicol ,Miskolczi and so on.

SoD drops some clangers along the way but he still stumbles on.

Ask him if its possible that heat can transfer spontaneously from a colder object to an object at a higher temperature.

Bryan:

wrt the unintuitive nature of thermo, you say: “I would disagree with that.”

You can disagree if you like. My quote was from Richard Feynman – and I heard it first-hand: “That’s the subject where you can only remember the equations when you’re taking the class, or teaching the class.”

Gilbert’s ability to manipulate basic equations seems to be highly questionable – as amply demonstrated by SoD’s deconstruction of it.

The paper by G&T is laughable; I’m still in discussion with Miskolczi, so I won’t talk about that now.

And, yes, it’s obviously possible to transfer heat spontaneously from a colder to a hotter body: So long as the NET transfer is from hotter to colder. Put a dim lightbulb next to a bright lightbulb: Both will transfer light & heat to the other; but the NET transfer will be from the hotter to the colder.

Neal J. King

So many mistakes, so little time!

“And, yes, it’s obviously possible to transfer heat spontaneously ”

What is your definition of heat?

Neal J. King

….”And, yes, it’s obviously possible to transfer heat spontaneously from a colder to a hotter body: “……

Not according to Clausius and his famous second law.

Perhaps you have a different definition of heat.

Heat as defined in the classic thermodynamics textbooks never moves spontaneously from a lower temperature object to a higher temperature object.

Bryan,

Your interpretation of Clausius is quite wrong.

Just to quote the wiki article on Clausius’ statement on thermodynamics:

“No process is possible whose sole result is the transfer of heat from a body of lower temperature to a body of higher temperature.”

Note the words “SOLE RESULT”: This fits in precisely with what I was saying before. There is no problem with cooler objects transmitting heat to warmer objects, provided the warmer object is transmitting MORE heat to the cooler. In fact, when this heat is transmitted by radiation, it’s practically unavoidable.

For people reading Bryan’s comment:

Amazing Things we Find in Textbooks – The Real Second Law of Thermodynamics. And see the comments in that article from Bryan..

Oh my, Bryan proves parallel universes really do exist…

Bryan,

I don’t have much interest in getting caught up in word games. But basically, heat is energy transferred by methods not reversible by adjustment of an external parameter. Mathematically:

dQ = dU + dW

where:

dW = pdV + (magnetic terms) + (stretching terms) + etc.; all these work terms can be reversed by changing the sign of the parameter. Systems that include magnetic terms incorporate magnetic moments, rubber bands include a stretching terms, etc.

Traditionally, modes of heat transfer are listed as conduction, convection and radiation.

The concept of heat itself is a bit fuzzy, because there is no such thing as “the amount of heat” in a system, that can be meaningfully distinguished from the “amount of energy” of the system. The old idea of “caloric” which was based on that concept died in the early days of the development of thermodynamics.

Basically, the point is that the internal energy of a system U is a well-defined state function, and the amount of work done is quantifiable in terms of changes to a macroscopic parameter (volume, magnetic field, length, etc.); and heat is what is transferred by other methods, including conduction, convection and radiation.

The other useful point is that the entropy change is:

dS ≥ dQ/T

scienceofdoom,

That’s what I used to think too before the Venus threads. You would be correct if the problem were one dimensional. But it’s not. There will be a meridional temperature gradient because high latitudes on a sphere receive less insolation. That meridional gradient leads to a meridional pressure difference gradient as altitude increases causing circulation. The end result will be a non-zero lapse rate, probably near adiabatic. The temperature difference between the poles and the equator provides the free energy for the work needed to establish and maintain a positive lapse rate (decrease in temperature with altitude).

That doesn’t explain why temperature increases with altitude in the stratosphere. The stratosphere wouldn’t exist in anything like its present form if free oxygen weren’t present. The reason the temperature inversion blocking convection exists at the tropopause is that oxygen absorbs incoming UV radiation which produces ozone which absorbs even more UV. Given the chemistry and kinetics of ozone formation leading to the ozone concentration profile in the stratosphere, the temperature in the stratosphere must increase with altitude.

Trying to keep it simple in this reply.

I don’t think it is simple and I lean towards lack of convection in this fictitious planet. It is simple and easily experimentally verifiable to show convection in an atmosphere heated from beneath. It is less easy to demonstrate convection in an atmosphere heated from above.

But as I said in Convection, Venus, Thought Experiments and Tall Rooms Full of Gas – A Discussion, perhaps Goody & Walker have made a valid point:

It seems to me that Bryan (June 14, 2011 at 10:55 am) is telling everyone that he doesn’t like my conclusion but can’t explain what’s wrong with it.

If any readers can come up with something of scientific substance from Bryan’s poetry, please let me know and we can assess it.

SoD says with apparent approval

….” If the circulation is sufficiently rapid, and if the air does not cool too fast by emission of radiation, the temperature will increase at the adiabatic rate. This is precisely what is observed on Venus.”…..

The derivations used by W C Gilbert used the hydrostatic condition to derive the adiabatic lapse rate.

Hydrostatic means stationary or moving at constant speed.

So the convection effect (though hard to isolate) is not part of the derivation.

The convection effect is in addition to the adiabatic lapse rate and will in fact make it depart from ALR.

The only significant part played by radiation seems to be at the TOA.

Without radiative loss there, the atmosphere would eventually be isothermal at the surface temperature

scienceofdoom,

Yes we can. Venera 9 took pictures of the surface of Venus with available visible light. If I remember correctly, the average insolation at the surface is ~18 W/m², or about the level seen on Earth under heavy cloud cover.

That was the Goody & Walker quote from before this was known.

Bryan,

Wrong. The surface of a sphere with plane parallel illumination isn’t isothermal. If the surface isn’t isothermal, then the atmosphere won’t be either. The rate of pressure decrease with altitude, atmospheric density, is a function of surface temperature. If you have a surface temperature that decreases with latitude, then the pressure at any given altitude will be higher at low latitudes than at high latitudes. A pressure difference will cause circulation. That circulation will not be restricted to high altitudes. Any vertical circulation will result in a near adiabatic lapse rate.

DeWitt Payne

We are exploring the hypothetical.

If we have NO RADIATION leaving the Earth the atmosphere will tend to become increasingly isothermal.

With each new daily supply of solar energy causing a new Sun facing earth surface temperature ever higher than the night surface temperature.

However I agree it will never become completely isothermal.

However we are exploring the hypothetical, since radiation to the universe from TOA day and night keep the planet cool.

SOD said: “a) the ideal gas law – the atmosphere is not an ideal gas”

I was not clear if you agree with this or disagree with this.

The atmosphere can be considered an ideal gas as the major constituents are far above their critical temperatures and PV=nRT for the atmosphere will yield a less than 1% error. That is a rough quote from my thermo book. Maybe I could scan it and sent it.

I pointed this out to you almost two years ago and you said you had not considered it.

Using the ideal gas law i.e. STP the temperaure is 0 C. That alone accounts for 18 degrees of the 33 degrees of poorly named GHE.

Bryan,

Could have fooled me. In fact, please cite a direct quote that at least implies that hypothetical rather than the hypothetical of an optically transparent atmosphere where all radiation is absorbed and emitted by the surface. The trivial case of a planet with no solar illumination at 2.7 K is not very interesting or controversial.

DeWitt Payne

You cannot put restrictions on hypothetical situations.

The planet that does not radiate does not exist, except perhaps in a black hole.

To make a hypothetical non radiating planet as you say opens a can of worms.

I said.

……”The only significant part played by radiation seems to be at the TOA.”….

I should have stopped at that, since the next part does not happen;

……”Without radiative loss there, the atmosphere would eventually be isothermal at the surface temperature.”………………..

mkelly,

You forgot the smiley face sarcasm tag. You can’t possibly mean that seriously.

mkelly:

I agree that the atmosphere is very close to an ideal gas – that’s why I use the equation. I was anticipating comments from people who don’t know that the atmosphere can be closely approximated to an ideal gas. I see these comments regularly on popular blogs.

The way you write your comment makes it sound like you explained to me the atmosphere was an ideal gas and I had never considered it..

You claimed some strange point about PV=nRT being responsible for the “greenhouse” effect and I correctly said I had not considered it up to that point. That’s true because it was such a strange idea. I have a vague memory of later explaining what was wrong with the idea that you proposed. But I can’t now remember what your strange idea was.

mkelly:

I tracked down some old comments, under username “Mike Kelly”. In among lots of other statements you made a claim about the effect of the idea gas law..

This quote:

And this quote:

And this explanation:

I have now seen many other people with similar mistaken claims.

You are claiming that pressure accounts for the earth’s surface temperature?

You can see an explanation of why that is wrong in Convection, Venus, Thought Experiments and Tall Rooms Full of Gas – A Discussion – under the heading “Introductory Ideas – Pumping up a Tyre“.

Increased pressure can cause a smaller volume or a higher temperature. Increasing pressure quickly does work on a system and can increase temperature in the short term.

The surface temperature of the earth with no sun and the exact same pressure would be close to absolute zero. Pressure does not cause temperature.

SOD says: “Pressure does not cause temperature.”

So how are stars made? Gravity.

Gravity causes pressure. I agree if the sun went out all things go to “absolute” zero.

No I am not claiming and have never claimed that pressure accounts for the surface temperature of the earth. Air cannot heat ground. At least it is very difficult.

I was not explaining to you. I have far more regard for you than you appear to have for a fan of this blog as I have stated several times.

I have said before and say again now that some portion of the poorly named GHE can be explained via the ideal gas law for near surface temperature.

DeWitt if you disagree then please state why some of the supposed 33 degrees cannot be accounted for by pressure.

I’m just trying to understand your claim. Sorry if I misunderstood it.

I have seen elsewhere this very strange idea that “high temperature can be attributed to high pressure”. This is a complete misunderstanding.

There are three intensive variables (aside from composition) for a gas: pressure (p), density (n) and temperature (T). Because the equation of state relates them, there are two degrees of freedom.

Therefore, one cannot say that “p determines T”, because there is the variable n. If p is large, but n is also large, T can be quite small:

T = (1/k)*(p/n)

With respect to stars: Gravity does not cause the heating of stars:

– Gravity forces nuclei together

– Nuclear fusion releases kinetic and radiant energy

– This power release slows down the collapse of the star, producing a near-steady-state; and

– This power release also produces the high temperatures

Mr. King if you know all the variables is a equation but one you can find the last one. P is 101.3 kpa or 14.7 psi. n is number of moles in a volume and R is a constant please solve for T. As far as I know the volume of the atmosphere has not changed in any significant way in a very long time.

As for the star explanation you seem to contradict DeWitt as to the reason stars ignite. Pressure caused by gravity. Without the pressure there is no reason for ignition.

mkelly:

– “if you know all the variables is a equation but one you can find the last one. P is 101.3 kpa or 14.7 psi. n is number of moles in a volume and R is a constant please solve for T. As far as I know the volume of the atmosphere has not changed in any significant way in a very long time.” First: it doesn’t make a whole lot of sense to talk about the “volume” of a gas for which the temperature and pressure change from point to point: That’s why I talk about density, which is a locally defined characteristic. Secondly, your assumption that pressure is the “cause” of temperature fails to take into account, among other things, the fact that temperature changes on an minute-by-minute basis: Are you assuming that the weight of the atmosphere becomes lighter at night?

The basic problem is that you’ve gotten cause & effect mixed into a situation in which that doesn’t apply, and you’re neglecting dynamical considerations, like power input/output.

– “As for the star explanation you seem to contradict DeWitt as to the reason stars ignite. Pressure caused by gravity. Without the pressure there is no reason for ignition.” Not really: What happens is that as the compressed plasma descends, it loses gravitational potential energy, and this loss is converted into kinetic energy (=> increase in temperature). It is this increase in temperature that allows the electrical repulsion between protons to be overcome. In other words: If you could remove a cup-full of plasma at that same temperature/pressure/composition/radiative environment and maintain it at those conditions but at a very different gravitational potential, it would behave the same way. Gravitational potential energy is important insofar as it keeps the situation together and as motion induces changes that ARE reflected in thermodynamic characteristics. Specifically, when a packet of gas ascends or descends in a g-field, its pressure and volume are forced to change: this changes the thermodynamic situation. But it’s not the change in g-potential directly that does this. That’s why we refer to the “internal” energy of the gas: it’s not due to external forces like gravity.

The situation would be different if the most important interaction between the constituent particles were gravitational. But that’s very far from being the case, by a factor of roughly 10^40.

Misunderstanding or not, were there be a kilometer-deep depression with a sea-level rim, the bottom temperature would be around 9.8K warmer than the rim’s.

Perhaps one should say that it’s the decrease in pressure that decreases temperature, rather than the increase in pressure increasing temperature. On a practical level though, the two descriptions are one and the same.

Perhaps a helpful way to see the role of pressure in temperature is like this:

Something sets the baseline – the surface temperature.

This is the reference point.

Because rising air expands and because sinking air compresses, and because these motions taking place relatively quickly, the process of rising or sinking is “adiabatic” = no net transfer of heat into, or out of, the parcel of air.

So that’s why the temperature difference between the surface and any given height above or below is set by the adiabatic lapse rate.

But what sets the reference temperature is something else.

William Gilbert writes the following expression (equation 3 of his paper) for the internal energy of a gas in a gravitational field:

dU = CvdT + Ldq + gdh – PdV

For the case of dry air, we can drop the latent heat term, getting:

dU = CvdT + gdh – PdV

I am convinced that these equations are incorrect, but they are incorrect in a very interesting and instructive way. I agree with SOD that Mr. Gilbert has, in effect, double-counted the gravitational work, but I think I can simplify his argument at the cost of a little bit more mathematics. Let me focus on the second.

The internal energy of a gas – ideal or not – in a gravitational field is some function of the number of molecules, temperature, volume, and altitude, U(N,V,T,h). If there are no chemical reactions taking place, N is a constant. We may then write, as a mathematical identity:

dU = (dU/dT) dT + (dU/dV) dV + (dU/dh) dh

where the derivatives should be interpreted as partial derivatives in which all of the other variables are held constant, i.e. (dU/dT) = (partial U/ partial t) at constant V and h.

Now, (dU/dT) at constant V and h is, by definition, equal to Cv. The potential energy of a molecule of mass m in a gravitational field is mgh, so the third term is NMg dh (which for a unit mass is equivalent to Gilbert’s term.)

But what about the second term? For an ideal gas, it vanishes:

(partial U/partial V) at constant T and h = 0 (ideal gas.) In other words, the internal energy of a given mass of an ideal gas is determined solely by its temperature and by its position in the external field.

So: the correct equation is: dU = CvdT + Nmg dh. The -PdV term is wrong.

How can we reconcile this with the first law of thermodynamics, dU = dq + dw ?

The work dw consists of two parts, PV work and work against gravity:

dw = -P dV + Nmg dh

So, dU = dq – PdV + Nmg dh, Equating this with our expression above, we get:

dq – P dV + Nmg dh = Cv dT + Nmg dh

And we see that the gravitational term cancels out of the expression for dq in terms of volume and temperature!

dq = P dV + Cv dT

If you now set dq = 0 (for an adiabatic process), and insert the ideal gas law and the hydrostatic equilibrium condition, you get the standard expression for the adiabatic lapse rate.

I don’t see why anyone is trying to include gravitational potential energy as part of the INTERNAL energy of the gas. It’s not.

For example, the gravitational potential energy of the gas in a small region is not going to affect the chemical interactions going on there, which will be affected by the pressure, the temperature, the density, the chemical potential, the composition, the phases, and other thermodynamic characteristics.

If we consider an extended region, in which there is substantial variation of the gravitational potential energy, this variation will affect chemical interactions ONLY insofar that it changes the directly thermodynamical variables I mentioned above. For example, in the immediate neighborhood of a strong gravitational field, the temperature will vary from place to place (aka Pound-Rebka effect). That means that the chemical interactions will be going on at a higher temperature at lower potential energy than at higher: But this is taken into account by looking at the local temperature, not by incorporating the gravitational potential energy within the internal energy function.

(Hmm, I replied earlier but it doesn’t seem to have shown up, which is just as well since it was partly incorrect.)

In my experience, exactly what counts as a part of the “internal energy” is inconsistent from one discipline to another, and even within disciplines . As a physical chemist I am accustomed to defining the internal energy as the ensemble average of the Hamiltonian, excluding the kinetic energy of the center of mass but including potential energy due to external fields, if those fields couple to anything of interest.

But it hardly matters what you choose to call it; my basic point is that if you write the first law of thermodynamics as dU = dq + dw, then if you are going to explicitly account for the work done when an air parcel moves from one altitude to another on the right hand side, it should also be included as a part of the statee function “U”, whatever you wish to call it, on the left hand side. The usual procedure in atmospheric physics appears to be to account for that work implicitly through the hydrostatic equilibrium, but you could also do it explicitly as I have outlined in my post above. The results should be the same, and they appear to be if I have done my algebra correctly. Mr. Gilbert’s error amounts to adding the gravitational work explicitly to an expression that already included it implicitly.

Internal energy is a state function and treating it as such gives advantages in working out problems on a PV diagram as to work done in going through a particular difficult thermodynamic path.

To solve the problem for a state function you can pick instead an easy path as the state functions actual path gives the same result .

Therefor it is generally wise to include gravitational potential energy in the internal energy folder.

However if you are not using the properties of the state function you can set out the energy quantities as you like.

Indeed from an educational point of view this approach is justified as you can detect the interchange between the energy types.

Likewise with direction of the PV work , different textbooks set it out as sometimes + or sometimes – depending on their initial assumptions.

A bit like convectional current and electron flow current in an electrical circuit.

If some confused person comes along they may think that a grave mistake has been made and twice or no current is flowing.

As long as the definitions are clearly set out there should be no problem.

Bryan,

a) “A bit like convectional current and electron flow current in an electrical circuit.”

What does convectional current have to do with electrical circuits?

b) Nothing you have said suggests to me that there is any value to incorporating altitude as a thermodynamical variable. What makes sense is to make understand the thermodynamical variables as functions (fields, really) of the altitude: T, p, n are functions of altitude; and therefore internal-energy density, work density, etc. are also functions of altitude; or of the three spatial coordinates generally.

(Note that there are a few typos – M for m, t for T – in my last post – I hope they are not confusing.

Here is a more intuitive approach to my preceding argument. Let’s think about this on the molecular level. Each molecule in the gas has the following forms of energy:

Kinetic energy of translational motion: 3/2 kT per molecule.

Kinetic energy of rotational motion: 0 for atoms, kT per molecule for diatomic molecules, 3/2 kT per molecule for polyatomic molecules

Intramolecular potential energy – depends on the molecule, but like the kinetic energy depends only on temperature.

Potential energy in the gravitational field: mgh per molecule of mass m.

Potential energy due to interactions of the molecules with each other. This depends on the gas, but is generally small as long as we don’t need to consider condensation, and is zero by definition for an ideal gas.

That is all. The “internal energy” U of thermodynamics is the ensemble average over the distribution of these microscopic, molecular energies. The first three terms are all incorporated into the heat capacity Cv (leading to a heat capacity Cv = 3/2 R per mole for monatomic gases, 5/2 R per mole for diatomic gases, and a more complicated expression for polyatomic gases.) If we are considering an air parcel that is small enough so that we can regard all the molecules in it as being at the same altitude, the fourth term just averages to Nmgh for N molecules of mass m, or Mgh where M is the mass of the parcel. The fifth term is the only one that depends explicitly on the volume of the sample, but it is generally small (for noncondensing gases) and zero by definition for an ideal gas.

So for an ideal gas, dU = Cv dT – Mg dh. The first term is the intramolecular energy (kinetic plus potential) and depends only on temperature. The second term is the gravitational potential energy.

Robert P. says

So for an ideal gas, dU = Cv dT – Mg dh.

Should this not be dU = MCv dT – Mg dh. ?

You can work with unit mass of 1kilogram or one mole but unless you are very carefull mixing up units on the same line could lead to errors.

In my equation, Cv is the heat capacity (extensive) not the specific heat (intensive). This is the standard usage in my field – an unadorned Cv denotes the extensive quantity, and when you want the intensive quantity you decorate it with an over-bar or a subscript m. I suppose I could have been clearer above, by writing out Cv = (3/2) nR for a monatomic ideal gas instead of saying “Cv = 3/2 R per mole” . It’s very easy to make unit mistakes when typing equations into a comment box.

Robert P

By writing out your line in this way the mass(M) must be put down as 0.029Kg rather than a variable M.

In physics the more usual way is specific heat capacity which has units Joules.Kilogram^-1. Kelvin^-1

mkelly,

What causes the proto-star to heat up to ignition level is gravitational potential energy. (see here) Take a volume of gas equivalent to the volume of the solar system at very low pressure and temperature of 2.7K and allow it to collapse. The gravitational potential energy will be converted to kinetic energy resulting in a core temperature sufficiently high, assuming sufficient initial mass, to ignite fusion.

mkelly,

Assuming the same input of energy to the surface as the Earth get’s now, 240 W/m², and a perfectly transparent atmosphere, The average surface temperature would be slightly less than 254 K, depending on the exact assumptions of heat capacity and thermal conductivity. So precisely none of the ~33 degrees is due to pressure. It’s all energy in and out. If you increase the surface pressure over the whole planet by 10%, the surface temperature will not change. (It might become a little more uniform) It’s only when the atmosphere is not transparent that the thickness of the atmosphere makes the surface hotter or if the hole area is small compared to the total surface area that the bottom of the hole would be hotter.

Let me be more precise. If you suddenly increased the mass of the atmosphere by 10%, the surface temperature would go up temporarily, but it would cool back to the steady state value over time because radiation out would be higher than radiation in.

If you increased the mass, the pressure would increase vrs altitude, raising the tropopause surly? Effectively permanently raising the temperature… Due to its effect on optical depth vrs altitude surly?

So it would permanently increase temperatures, but due to its effect on opacity(assuming uniform mass increase of all the gases that make up atmosphere)

KingOchaos previously posted under Mike Ewing, just havnt been commenting in a long time, just reading blog o late.

This is a scenario I would like to see modeled. We have a planet the size of Venus with a CO2-rich atmosphere of similar mass to Earth’s. Due to a colossal catastrophe, the entire planet is “instantly” resurfaced (iow the whole surface melts down). As a consequence the day becomes of similar lenght as the year, and the atmospheric mass is increased 90 times due to outgassing.

Given the fact that the atmosphere is thick to IR and visible light, how is the surface going to cool itself down and on what timescales? What will be the final steady-state temperature (or tropopause height, it’s the same thing) if any steady-state is achieved?

KingOchaos:

DeWitt Payne is commenting on a transparent atmosphere.

My bad.

DeWitt says: “Assuming the same input of energy to the surface as the Earth get’s now, 240 W/m², and a perfectly transparent atmosphere, The average surface temperature would be slightly less than 254 K, depending on the exact assumptions of heat capacity and thermal conductivity. ”

Again please I never said “surface of the Earth.” In fact I said near surface. The air will not heat soil at least it would be very difficult. We measure temperature in the air not the surface of the earth. Soil etc is heated by the sun. The air is heated several ways, but I never claimed the air heats the soil.

SOD said “pressure does not cause temperature”. Then why is there pressure welding. Why is there heat bursts?

– If the temperature of the air exceeds the temperature of the soil, the air will heat the soil. That’s kind of what temperature is about.

– There are two things I find looking up pressure or cold welding:

1) Welding without energy input and without temperature increase: If no heating is needed, why are you assuming that the pressure causes heating? It seems to apply to cases where there is no barrier to joining together (e.g., when two plates of identical metals are placed together).

2) Welding by application of ultrasound: In this case, the heating comes from conversion of the vibrational energy of the ultrasound into heat, locally; because the fact that you have two different pieces allow relative motion that steals the vibrational power and diverts it into heating. No flames needed, but energy is being provided.

– Heat bursts: These seem to occur when air descends from an altitude. As it loses altitude, the pressure applied to it increases (pressure always increases downward) and it undergoes adiabatic compression. It is the adiabatic compression that results in increased temperature: work is being done on the air packet.

Cold welding ( http://en.wikipedia.org/wiki/Cold_welding ) is an interesting phenomenon. The principle is that if you take two very clean metal surfaces, no oxides or other contaminants, and place them together under high vacuum (no adsorbed gas layer), the atoms at each surface can no longer tell that they are at a surface and the two pieces become one.

mkelly,

STP is an arbitrary reference point picked for convenience. The different international standards organizations even have slightly different definitions of STP. ( http://en.wikipedia.org/wiki/Standard_conditions_for_temperature_and_pressure )There is nothing fundamental about a pressure of 1013mbar and a temperature of 0C. It in no way explains any part of the increase in the surface temperature of the planet caused by an atmosphere that absorbs and emits in the thermal IR.

Neal J. King

I have asked you twice what your definition of heat is!

But no answer!

How can you say something is transferred when you cannot even define what is transferred.

I strongly suspect that you are using the colloquial meaning of heat rather than the thermodynamic meaning of heat.

This is a very common mistake made by proponents of the IPCC position

So for the third time what is your definition of heat!

cynicus says

…….”Oh my, Bryan proves parallel universes really do exist”…

I have never commented on parallel universes however most theoretical physicists on the planet believe they exist.!

Bryan,

You have been reading a bit too much science fiction.

A vocal minority of physicists think the many-worlds interpretation of quantum mechanics has some value. Most think it is unnecessary to speculate that far into QM.

Neal J. King

So for the fourth time what is your definition of heat!

Bryan,

I don’t have much interest in getting caught up in word games. But basically, heat is energy transferred by methods not reversible by adjustment of an external parameter. Mathematically:

dQ = dU + dW

where:

dW = pdV + (magnetic terms) + (stretching terms) + etc.; all these work terms can be reversed by changing the sign of the parameter. Systems that include magnetic terms incorporate magnetic moments, rubber bands include a stretching terms, etc.

Traditionally, modes of heat transfer are listed as conduction, convection and radiation.

The concept of heat itself is a bit fuzzy, because there is no such thing as “the amount of heat” in a system, that can be meaningfully distinguished from the “amount of energy” of the system. The old idea of “caloric” which was based on that concept died in the early days of the development of thermodynamics.

Basically, the point is that the internal energy of a system U is a well-defined state function, and the amount of work done is quantifiable in terms of changes to a macroscopic parameter (volume, magnetic field, length, etc.); and heat is what is transferred by other methods, including conduction, convection and radiation.

The other useful point is that the entropy change is:

dS ≥ dQ/T

Neal J. King