The IPCC 5th Assessment Report (AR5) from 2013 shows the range of results that climate models produce for global warming. These are under a set of conditions which for simplicity is doubling CO2 in the atmosphere from pre-industrial levels. The 2xCO2 result. Also known as ECS or equilibrium climate sensitivity.

The range is about 2-4ºC. That is, different models produce different results.

Other lines of research have tried to assess the past from observations. Over the last 200 years we have some knowledge of changes in CO2 and other “greenhouse” gases, along with changes in aerosols (these usually cool the climate). We also have some knowledge of how the surface temperature has changed and how the oceans have warmed. From this data we can calculate ECS.

This comes out at around 1.5-2ºC.

Some people think there is a conflict, others think that it’s just the low end of the model results. But either way, the result of observations sounds much better than the result of models.

The reason for preferring observations over models seems obvious – even though there is some uncertainty, the results are based on what actually happened rather than models with real physics but also fudge factors.

The reason for preferring models over observations is less obvious but no less convincing – the climate is non-linear and the current state of the climate affects future warming. The climate in 1800 and 1900 was different from today.

“Pattern effects”, as they have come to be known, probably matter a lot.

And that leads me to a question or point or idea that has bothered me ever since I first started studying climate.

Surely the patterns of warming and cooling, the patterns of rainfall, of storms matter hugely for calculating the future climate with more CO2. Yet climate models vary greatly from each other even on large regional scales.

Articles in this Series

Opinions and Perspectives – 1 – The Consensus

Opinions and Perspectives – 2 – There is More than One Proposition in Climate Science

Opinions and Perspectives – 3 – How much CO2 will there be? And Activists in Disguise

Opinions and Perspectives – 3.5 – Follow up to “How much CO2 will there be?”

Opinions and Perspectives – 4 – Climate Models and Contrarian Myths

Opinions and Perspectives – 5 – Climate Models and Consensus Myths

Opinions and Perspectives – 6 – Climate Models, Consensus Myths and Fudge Factors

Opinions and Perspectives – 7 – Global Temperature Change from Doubling CO2

And, of course, this is a major problem with models. It’s well known that they have no skill at the regional scale. This is why sub-scale modeling, where the resolution over a region is increased and a coarser resolution model is used for the rest of the planet, doesn’t lead to improved regional results.

Failure to get the pattern right may also explain the large spread in the absolute global average temperature between models.

It is actually much worse than that. The first assumption always made in model “plugs” is that the temperature rise over the last 150 years is mostly due to human activity, despite the clear evidence that such large variations occurred in the more distant past (over the Holocene), and human activity was not responsible for those.

Leonard notes: “the first assumption always made in model “plugs” is that the temperature rise over the last 150 years is mostly due to human activity, despite the clear evidence that such large variations occurred in the more distant past “.

In contrast to AOGCMs, energy balance models assess climate sensitivity using estimates of forcing and the resulting transient change in temperature. However, there are three kinds of climate variability: naturally-forced (traditionally solar and volcanic), anthropogenically-forced, and “unforced” or internal variability. Climate scientists have accurate information from satellites that allows them to correct for the small amount of natural forcing during the last half-century. leaving only anthropogenicly-forced warming contaminated with unforced variability. Going back to 1900, they infer from attribution studies (the “fingerprint” of warming observed in climate models with various forcing) and from other evidence that the 1920-1945 warming was mostly unforced, perhaps 0.3 K. If one accepts AR5’s best estimate for aerosol forcing (-0.9 W/m2?) rather than the more-negative values produced in AOGCMs during older attribution studies, I suspect that there was some unforced cooling in the 1950-1970 pause. And there probably was unforced variability in the 2000’s “hiatus”. But all of these examples of unforced variability are a small fraction of the 0.9 K of nearly global warming (outside the Antarctic Plateau) in the last half-century.

When I look to earlier periods for Leonard’s “clear evidence of large variations in the past” (comparable to the last half-century), I run into serious problems. Past climate fluctuations can be due to a combination of naturally-forced and unforced variability. We don’t appear to have the tools to separate one from the other (unless you want to accept modelers attempts to explain the whole LIA in terms of solar and volcanic forcing). Unless we can extract the unforced component, it doesn’t serve as a precedent for how much unforced variability might have contributed to the 0.9 K of warming in the last half-century. Nor do we have the proxy data to determine that past variations have been “global” rather merely regional. There are clear signs of large temperature variations in Greenland ice cores (LIA, MWP, RWP, Minoan WP), but no corresponding changes in Antarctic ice cores. Greenland is subject to Arctic amplification and its temperature was notoriously unstable (warming 10? degC for brief periods) during the LGM.

If large global temperature variation existed, the best place to look would be in Marcott’s global composite reconstruction of ocean sediment (80%) and ice cores from the Holocene. If one ignores the dubious 20th-century hockey stick blade debunked by McIntyre, there is no significant variability except a slow decline in temperature from the Holocene Climate Optimum mostly centered in the northern extra-tropics and presumably driven by orbital mechanics. Ocean sediment records (marine isotope stages) convinced geologists that a series of glacials and interglacials had occurred long before ice cores. I’m not sure how clearly warming of the last half-century would appear in the middle of such a record given the century time resolution typical of ocean sediment cores and the potential for noise to suppress variability in temperature reconstructions.

The best I can conclude from the our inadequate proxy record is that no unambiguous evidence exists for near-GLOBAL unforced variability comparable to warming in the last half-century – but the absence of evidence shouldn’t be construed to mean proof of absence. Perhaps I’ve gotten this wrong – all skeptics seem to agree with Leonard.

Ironically, a climate that is relatively stable to forced change has a larger climate feedback parameter (say -2 W/m2/K) that will suppress unforced variations and return to a steady state. One the other hand, high climate sensitive (-1 W/m2/K) implies more susceptibility to unforced variation.

That the GCMs fail to match observed patterns is but one of three reasons their projections should be considered with much skepticism. The other two reasons are 1) that they fail to agree with each other, and 2) almost uniformly estimate sensitivities which are inconsistent with (and greater than!) empirically based estimates.

These all point to the same more fundamental issue: the GCMs obviously do not accurately capture important underlying physical processes. The great George Box noted that even a wrong model can be useful. GCMs are most certainly not useful in guiding sensible public policies, whether regional or global.

For the surface to have warmed less than models because of a pattern effect, there has to be a place where the pattern is less warming. And are that keeps getting discussed is the eastern Pacific. If the eastern Pacific is cooling, estimates of climate sensitivity based upon observations are going to be low. When the eastern Pacific flips to accelerated warming, so does the globe. Since 1900, that seems to be the drill. It looks a lot like the PDO. The negative phase that started around 1943 caused some cooling of the earth’s surface; the negative phase that started around 2000 caused a slowdown in the rate of warming. I don’t see the problem with this as its potential consequence is pretty obvious.

And the wild discrepancies between models?

The inconsistent patterns between models? (rainfall, etc)

You can whistle as you walk past the graveyard, but the ghosts are unlikely to listen.

Per the papers below, there is a big uncertainty in aerosol forcing due to pattern effects and cloud interactions so having observed temperatures doesn’t help much. Climate models have a known forcing but the temperatures are uncertain, so pick your poison.

Since 1970, GHG have swamped aerosols, so there is much less forcing uncertainty. Recent 30 to 40-year temperature trends imply TCR is around 1.8C. Add in large OHC increases and low sensitivity becomes very unlikely.

https://www.nature.com/articles/s41467-018-05838-6

https://www.researchgate.net/publication/330478810_Aerosol-driven_droplet_concentrations_dominate_coverage_and_water_of_oceanic_low_level_clouds

Chubbs,

Since 1970, aerosol emissions in Europe and North America have fallen rather dramatically. Not sure if China has completely compensated for that drop or not, but in any case, it is far from clear what historical aerosol effects have been. Hell, it is far from clear what aerosol effects are right now.

Using CMIP5 forcing data, the ratio of aerosol to GHG forcing was 0.48 for the period 1870–>1970, dropping to 0.10 for the period 1970–>2015. So forcing uncertainty is much lower after 1970. That’s when you want to get Delta T/Delta F correct in an empirical method.

Per the article I linked above, aerosols have a different effect when emitted in Europe vs China vs India. So moving aerosols from Europe to Asia has a pattern effect that isn’t reflected in the single global average forcing # used in EBM.

And which of the models match the pattern effect of moving aerosol emissions around? Should we not then declare the models that don’t match that pattern are wrong? The models broadly disagree with each other on climate sensitivity, rainfall patterns, and warming patterns… and lots of other things. If any actually capture reality in a useful way (that is, able to make useful predictions), then there ought to be rational ways to discount the rest and see how the selected few do predicting patterns and the rate of warming in the coming decades. Won’t happen, of course, since the models are as much creatures of political influence as anything else. When I see modeling groups being disbanded because people conclude their models are rubbish, that is when I’ll know technical progress on models is being made.

stevefitzpatrick,

There is a large volume of literature on how to assess models. They (the models) are all “wrong”, but some might be useful. It’s not clear how to assess them.

Vs current climatology?

Vs recent history on temperature or rainfall or cloud cover or … (other parameter)

Vs reproducing important physical processes

It’s not political. It’s just a very hard problem.

There isn’t a B Team sitting there ready to go, but held back by some vested interests. There is no B Team.

SoD,

I agree it is a hard problem, and the discrepancies between the various models say the same thing. I also see that progress is minimal, while expense, and political hysteria based on model projections, grow ever larger. If there is no broadly recognized way to evaluate the models (which is to say, evaluate which are more accurate and so useful in making accurate projections and which should be discounted), then it is very difficult to see how progress will ever be made. In the current rather crazy situation there are endless excuses on offer for why models disagree with reality (and with each other!), and even more reasons on offer for why all the reasonably consistent EBM estimates ‘must be wrong’. The arm waving is so furious that some people seem likely to take to the air at any moment.

It seems to me the focus should be on narrowing the largest uncertainties: deep ocean heat uptake, influence of aerosols (both direct and on clouds), and understanding all the fudged processes (convection, clouds, rainfall) taking place below the grid scale of models. Taking model projections seriously at this point strikes me as an embrace of irrationality.

SOD writes: “It’s not clear how to assess them.”

The critical question is how the planet responds to warming: How much do OLR and reflected SWR increase per degK of surface warming? These are the key factors that control ECS. The biggest changes in OLR and OSR that we can observe are associated with the 3.5 K of seasonal warming that occurs every year. Models do a poor job and mutually-inconsistent job of reproducing those seasonal changes (except WV+LR feedback through clear skies). So there is no reason to believe that AOGCMs can predict the feedbacks that will occur in response to global warming. Tsushima and Manabe (2013) put it more diplomatically:

“Here, we show that the gain factors obtained from satellite observations of cloud radiative forcing are effective for identifying systematic biases of the feedback processes that control the sensitivity of simulated climate, providing useful information for validating and improving a climate model.”

“One can argue whether the strength of the feedback inferred from the annual variation is relevant to global warming. Nevertheless, it can provide a powerful constraint against which every climate model should be validated.”

Chubbs writes in various comments: “Recent 30 to 40-year temperature trends imply TCR is around 1.8C.” “Per the article I linked above, aerosols have a different effect when emitted in Europe vs China vs India.”

Aerosol forcing is a confusing subject, and I believe some of your information is incorrect. In the case of GHGs, we have laboratory measurements that allow us to calculate their radiative forcing through today’s atmosphere, or in an AOGCM, through a hypothetical future atmosphere. Aerosol forcing is more complicated. The reflection of sunlight is a fairly straightforward problem, and probably varies only slightly with geography. The attenuation of SWR by stratospheric aerosols is directly measured at Mauna Loa, and I suspect we have similar measurements where tropospheric aerosols are common. These forcings are anchored in measurements.

However, to my knowledge, the aerosol indirect effect on clouds can not be measured. This is a quantity that only comes from AOGCMs. Tunable parameters in AOGCMs control the magnitude of the Toomey effect, the decrease in droplet size caused by increasing numbers of cloud condensation nuclei. Theory and laboratory experiments show that smaller droplets reflect more SWR, making aerosol forcing more negative. The number of natural cloud condensation nuclei varies dramatically geographically, so the magnitude of the Toomey effect depends on where aerosols are present. The Toomey effect is transient, smaller water droplets evaporate and make thermodynamically more stable larger droplets. The chapter in AR5 on aerosols concluded that – with some minor exceptions – there is little evidence that a significant Toomey effect is operating in our climate system. Since our only quantitative data about aerosol forcing came from AOGCMs, many of the forcings we encounter are now obsolete. The idea that aerosol forcing varies dramatically with geography may also be obsolete, since this presumably arises mostly from the aerosol indirect effect. IIRC, the direct aerosol effect is -0.5 W/m2, the best estimate for the aerosol indirect effect is -0.4 W/m2 (with some studies reporting zero). AR4 asserted aerosol could be as negative as -1.9 W/m2 and aerosol forcing from the average AOGCM was significantly more negative than the expert’s revised best estimate.

The historic hindcasts from CMIP models and their multi-model mean presumably are biased by obsolete aerosol forcing, including TCR and ECS from historic runs. When one uses obsolete values for aerosol forcing in an EBM, the total forcing is too low and the climate sensitivity is too high. Otto (2013), written by many of the same IPCC authors responsible for the new consensus on aerosol forcing, revised published estimates of aerosol forcing (from AOGCM’s) downward, which is why they reported much lower climate sensitivity than earlier workers. Their best estimate is 1.3 K/doubling (0.9-2.0, 95% ci). Their TCR for 1970-2009 is 1.4 K/doubling (0.7-2.5) and judged less reliable due to Pinatubo. Lewis and Curry (2018) reported a TCR of 1.3 K using C&W’s adjustments to HadCruT (1.2 K without). If you have conflicting TCRs from elsewhere, please cite the source – but first ask yourself if your source is obsolete.

Since about 2000, aerosols have been falling. Soon, it may be possible to analyze data over a period where the total amount of aerosol hasn’t changed. (Those still using models with a large aerosol indirect effect may claim a forcing change from geographic changes in emissions.)

Frank, a recent measurement study found a large indirect aerosol effect, larger than that used in climate models (linked for second time below). Several other recent studies have also found large aerosal effects, direct and indirect, so don’t agree with your text above re-aerosals. Aerosal effects are still uncertain and limit the usefulness of observations for estimating TCR or ECS, particularly pre-1970 observations.

Otto uses a 19’th century baseline, similar to L&C, so not surprising that results are similar to L&C. I do agree that aerosals are becoming less important. Per my comment above, since 1970, GHG forcing has predominated over aerosal forcing by roughly 10 to 1. My TCR estimates are up-to-date and are made quite simply by dividing the average rise in temperature by the average rise in man-made forcing using post-1970 data only. Over recent decades, tempertures are rising faster than predicted by EBM and more in-line with climate models.

https://www.researchgate.net/publication/330478810_Aerosol-driven_droplet_concentrations_dominate_coverage_and_water_of_oceanic_low_level_clouds)

Chubbs: Thanks for the link to Rosenthal (2019). The last paragraph of the paper concludes:

“If the reported observed large sensitivity of shallow marine clouds to aerosols were incorporated in GCMs, they likely would simulate global cooling, whereas the world is actually warming. This argument has been used to dismiss such large sensitivities.”

So this paper isn’t debating whether the AR4 or the modestly less-negative AR5 best estimate for the aerosol indirect effect (-0.1 to -0.9 W/m2) is correct. This paper is saying that the aerosol indirect effect from marine stratocumulus clouds alone more than negates all of the forcing from rising GHGs and everything about clouds in AOGCMs needs gross revision.

Does anyone have access to the perspective that Science published along with this article?

How do aerosols affect cloudiness?

Science 08 Feb 2019:

Vol. 363, Issue 6427, pp. 580-581

DOI: 10.1126/science.aaw3720

FWIW, you can find AR5’s data on forcing vs time used to make Figure 8.18 in Annex II here:

https://www.ipcc-data.org/sim/index.html

Yes, this paper isn’t the final answer on aerosols. If it is correct though we need to be cautious about the aerosol forcing estimates used in EBM and climate models. However climate models can get the right answer by fitting recent observations with compensating errors. Fortunately aerosols have become less important recently, so we can project forward using the recent rise in temperature. Unlikely that the recent 0.18C per decade rate of rise is going to decrease.

There are great exaggeratons and great oversimplification in the aerosol effects. Human emissions is only a part of the total emission. Nature gives a great component. So the offset of warming is much smaller than assumed.

“Without a measure of the amount of natural aerosols that were present in the atmosphere a few hundred years ago, it’s hard to know how much things have changed since humans started adding manmade aerosols into the mix. That makes it difficult to calculate the exact size of the cloud-aerosol cooling effect. Professor Ken Carslaw says these natural aerosol uncertainties have “essentially been neglected in previous studies”.” https://www.carbonbrief.org/natural-aerosols-complicate-climate-understanding

I don’t know how well models get the aerosol pattern effect correct, however I am sure that they do a better job than using a single global # for aerosol forcing. Aerosols are probably the most important reason for low-bias in EBM. Per the paper below, using an ensemble of climate model runs simulating the 20’th century and replicating EBM, returns a TCR of 1.44 vs the true climate model TCR of 1.8.

https://eartharxiv.org/mn68e

While climate models can’t match the patterns in our single climate realization. They do provide an estimate of how much variability we might expect from one realization to another; fairly substantial it turns out, mainly due to variation in ocean circulation interacting with sea ice and clouds.

Exactly why are you sure a single global value for aerosol effect is less accurate? It sounds more like a statement of faith than reason.

You assume (of course) that EBM estimates of sensitivity are biased low. I similarly assume model estimates of sensitivity are biased high on average, and some biased insanely high. Of course, you don’t address why different models project substantially different sensitivities…. some have to be very wrong.

I don’t assume anything. My statement on a single global value for aerosol forcing is taken from the paper I linked. The other paper I linked indicated that EBM were biased low.

However my main problem with EBM is they don’t match recent observations.

We have 40 years of global temperatures increasing by 0.18C per decade, roughly 1.8 TCR. Our current heat imbalance 0.8 W/M2. No climate model needed to see where we are headed. Just project out recent trends. Are you expecting something different?

You say you don’t assume anything. I note again:

“Of course, you don’t address why different models project substantially different sensitivities…. some have to be very wrong.”

It appears to me you do assume broad disagreement between GCMs doesn’t mean much of anything important about the models. But disagreement between the average of GCMs (which disagree with each other!) with convergent EBMs means the EBMs are wrong. I find that most odd.

Climate models are uncertain yes, but I don’t see much bias vs recent observations. We usually focus on the mean, but EBM studies have fairly broad uncertainty ranges and they aren’t including uncertainty due to one realization or aerosol spatial patterns. Switch Best for Hadcrut and EBM estimates increase by 20%. Run EBM using a post-1970 baseline and numbers also increase. Below is a non-linear EBM, which fits obs well, and with ECS similar to climate models.

https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2018EF000889

So there is no lack of EBM spread. In fact a consistent uncertainty analysis of both approaches would probably show broad overlap in ECS range between climate models and EBM.

There are many other ways of estimating ECS besides EBM and climate models, generally in agreement with climate models, but also with a wide spread. That seems to be the nature of the beast.

Chubbs writes: “Below is a non-linear EBM, which fits obs well, and with ECS similar to climate models.”

This isn’t an EBM. This is a model built from an ensemble of possible feedbacks with various strengths and time dependences. A set of feedbacks producing output allegedly compatible with both EBMs and AOGCMs has been selected. It is true that feedbacks operate on different time scales: Planck feedback is instantaneous, water vapor, lapse rate and LWR cloud feedback develop in days to weeks. Changes in surface albedo require months for seasonal snow cover and sea ice and years to millennia for other parts of the cryosphere. Fortunately. the total ice-albedo feedback is small (+0.3 W/m2/K) in most AOGCM’s, so its time dependence is minor issue. Several groups have results suggesting that reflection of SWR by clouds takes several months, but not longer, to fully respond to changes in surface temperature. The term effective climate sensitivity is used when assessing climate sensitivity over periods too short to represent an equilibrium change.

However, these time-dependent feedbacks are clearly not responsible for the discrepancy between EBMs and AOGCMs. AOGCMs incorporate feedbacks over about 150 years (or 70 years in older 1% pa runs). Otto (2013)’s EBM covered 40 years, Lewis and Curry covered 130 years (though most of the change was in the last 40). The time-dependence of the above feedbacks doesn’t explain the inconsistency between EBMs and AOGCMs.

The authors have added a new time-dependent “feedback”, the “cloud response to sea surface temperature adjustment feedback”, to describe the “the slow cloud feedback occurring as the spatial pattern of sea surface temperatures (SSTs) change in response to warming over many decades”. The paper references many other papers discussing time-dependent changes in feedback. The amount of this feedback varies from model to model, and a few show almost none of this phenomena.

We already knew that the spatial pattern of sea surface warming is responsible for the discrepancy between AMIP experiments (those forced with historic SSTs) and historic runs (forced by historic GHGs and aerosols). All AOGCMs exhibit a climate feedback parameter consistent with a low ECS (similar to EBMs) when forced with historic SSTs, and a much higher ECS when forced with rising GHGs. In the latter case, the model regionally accumulates heat in a pattern we don’t observe. EITHER MODELS ARE WRONG or chaotic fluctuations in ocean phenomena like the AMO, PDO and ENSO have directed our climate into a pattern that a hundred historic AOGCM runs didn’t hindcast. (The former possibility is heresy).

The post linked below from Isaac Held shows an AOGCM doing a spectacular job of reproducing seasonal changes in the large scale flow of the atmosphere when forced with observed SSTs, but a poorer job when ocean temperature evolves without direction.

https://www.gfdl.noaa.gov/blog_held/60-the-quality-of-the-large-scale-flow-simulated-in-gcms/

So, this “non-linear EBM” doesn’t “fit observations well”; it simply reproduces a time-dependent change in feedback that has only been observed in AOGCMs.

Goodwin did use an energy-balance model, but it was extended with more fitting parameters and using more data. A linear model only estimates one parameter, so although the fitting period extends over 100+ years, EBM can’t resolve the recent more-rapid rise in temperatures.

I am not convinced that feedback timing is the sole explanation for the non-linear response, aerosols could also cause non-linearity since they do not ramp uniformly in time, but are front-end loaded instead. Bottom-line there are many ways to fit simple models to the available observations. Non-linear fits, which can resolve the recent observations give higher TCR+ECS than EBM.

I see two components to the “pattern effect”.

1) the east Pacific and round Antarctica on average first warm more slowly than other places, then they warm faster (e.g. https://doi.org/10.1073/pnas.1714308114). The models generally agree on this.

2) is whether there’s been a big natural (or maybe aerosol driven) change in the Pacific temperature pattern in obs that is outside what most of the models get (doi: https://doi.org/10.1029/2018GL078887).

I think 1) is pretty easy to understand and seems rock solid. Areas of the ocean where old, colder water comes to the surface take longer to heat up. Eventually the upwelling water comes from the start of the global warming period and temperatures rise faster.

Upwelling regions have colder SSTs and strong inversions with lots of reflective low clouds. The later warming weakens the inversion, reducing the cloud amount and changing global feedback. Antarctica is trickier but it looks like the overall effect is to boost warming.

So long as you agree that upwelling regions warm more slowly and that they tend to have stronger feedbacks than the global average, you get a long term “pattern effect”, I don’t think that’s a great mystery any more and the models largely agree on this one. Pattern effect 2) needs a different discussion.

MarkR: Thanks for the links to articles. However, if you are going to discuss ocean upwelling, you probably don’t want to refer to results from abrupt 4X CO2 experiments. Those experiments result in a large amount of warming (IIRC 1.5 K the first year and 4 K the first decade). There has been little time for heat to be transported below the mixed layer, so the ocean’s stability towards overturning has been artificially increased in a manner that our planet will not experience. Gregory plots (TOA imbalance vs Ts) from 4XCO2 experiments provide simple way to extrapolate an ECS and instantaneous forcing for 4XCO2, but otherwise I’d suggest output from RCP or 1% pa experiments.

I’m confused about when the water upwelling under marine boundary layer clouds is expected to warm. If this involves the deep ocean, the time scale of warming is roughly a millennium. By then, atmospheric CO2 will have begun to equilibrate with the deep ocean sink and dramatically reduced atmospheric levels of CO2. IIRC, the airborne fraction at equilibrium will be about 20%. Shallower overturning

MarkR wrote: “As long as you agree that upwelling regions warm more slowly and that they tend to have stronger feedbacks than the global average, you get a long term “pattern effect”, I don’t think that’s a great mystery any more and the models largely agree on this one.”

Frank suggests: “the models – that can’t replicate the feedbacks observed during seasonal warming nor warming over the past 40 years – largely agree on this one.” The increase in climate sensitivity with time (or is it with warming) in models has been a popular subject ever since Otto (2013) demonstrate that historic effective climate sensitive was low. The question is whether models demonstrate any real skill in predicting changes in boundary layer clouds or the pattern of SST warming. Hopefully someone will address these issues.

Both papers fall in the general category of ‘It’s Models, all the way down.’ At least Andrews et al come right out and say:

“Assuming the patterns of long‐term temperature change simulated by models, and the radiative response to them, are credible; this implies that existing constraints on EffCS from historical energy budget variations give values that are too low and overly constrained, particularly at the upper end.”

Seems to me a pretty big assumption.

You can’t validate a model by comparing the model output with itself. Make predictions (about the future, not post-dictions about the past), then compare to measured future reality.

I’ve never seen any validation of EBM predictions or even a chart showing temperature obs and the EBM fit.

Roy Spencer had a blog post where he compared temperatures from his EBM with the data. The agreement was good.

I guess the question is why anyone would think otherwise.

Chubbs wrote: “I’ve never seen any validation of EBM predictions or even a chart showing temperature obs and the EBM fit.”

An energy balance model in general is simply an application of the law of conservation of energy to a particular situation. In his first few blog posts, Isaac Held describes varies energy balance models that have been applied to climate and the output of climate models. What you are referring to as an EBM is a TWO-COMPARTMENT MODEL consisting of the surface (atmosphere+mixed layer of the ocean) and the deep ocean. Held explains why this EBM predicts a linear relationship between forcing change dF and warming dT on decadal time scale: dT = TCR*dF.

https://www.gfdl.noaa.gov/blog_held/3-transient-vs-equilibrium-climate-responses/

EBM’s are “validated” in the sense that they fit the output of climate models reasonably well. If AOGCMs are valid models of our planet, EBMs can also be applied to observed forced changes in the temperature of our planet.

Does anyone know of a recent textbook or review that deals with the application of various energy balance models (multi-compartment models) to climate. Multi-compartment models for analyzing the output of climate models appear to have been developed rather recently. TCR was first defined in 2001. Most papers seem to rely on an ad hoc collection of equations without addressing the important question Chubbs asked above: How do we know when an EBM is valid? All EBMs are based on conservation of energy, but we often make assumptions when we apply COE to a particular situation.

http://www.drroyspencer.com/2018/02/a-1d-model-of-global-temperature-changes-1880-2017-low-climate-sensitivity-and-more/

I thought this blog post was pretty good and showed that EBM’s can indeed do a pretty good job of matching average temperatures. Of course that should be easy if you believe the IPCC doctrine that average temperatures are a strong function of forcing.

Point 2) is more complex, but 1) uses model output too. The argument being that physics is our best guide to the future.

Do you have an issue with any of the points? i) there are areas where cold water upwells in the ocean, ii) a constant supply of colder water slows down local warming until the upwelling water is from the warming period, iii) a cooler surface increases inversion strength, iv) low clouds respond to inversion strength?

Do you think any of those are wrong? Why?

“The reason for preferring observations over models seems obvious – even though there is some uncertainty, the results are based on what actually happened rather than models with real physics but also fudge factors.” – scienceofdoom

Quite true. There are robust sources going way back in some relevant disciplines bearing upon ocean heat uptake, ocean heat content, sea level impacts (Beyond Fingerprints: Sea Level DNA – 2).

I don’t think that’s right. There are NO observations of ECS. The so-called “observations” are actually estimates using a model (the linearized energy balance model that sets the response equal to surface temperature). If you apply that methodology to a climate model with known ECS, it does not always yield the right answer. So there are valid reasons for looking critically at the “observations of ECS.”

Here’s a paper that shows this: https://www.atmos-chem-phys.net/18/5147/2018/

My comment got mis-formatted. The top three paragraphs (“This comes out … fudge factors.”) should be a quote from SoD’s original post. Sorry!

No problem, I fixed it up.

Yes Andrew, We have been over this before. Nic Lewis wrote a very convincing response to your paper.

On another thread you showed some examples of how large and long surface temperature departures from an ensemble average can be. Your paper I think just highlights that large variability in one GCM. If indeed the real system has that much variability it would in my mind cast doubt on attribution studies too. It’s just another example of the large uncertainties in climate science, making it subject to bias caused by “selecting” a particular model or set of data.

I also suspect that mid tropospheric temperatures have higher uncertainty than surface measurements. The record is much shorter and the coverage spotty with radiosondes.

I am endlessly amused how climate science is totally uncertain — unless it’s a result I like. So there’s too much variability for attribution studies, but no uncertainty at all about low ECS.

Andy wrote: “I am endlessly amused how climate science is totally uncertain — unless it’s a result I like. So there’s too much variability for attribution studies, but no uncertainty at all about low ECS.”

Andy: I agree with you that scientists should adopt consistent view of all of the evidence regardless of their personal biases. However, no matter how one looks at it, models are “likely to be wrong*. Either:

A) models produce too low an ECS to agree with EBMs OR

B) models produce too little unforced/internal variability to explain their inconsistency with EBMs.

Can you resolve this dilemma for me?

By “likely to be wrong”, I mean that if we compare the pdf for ECS for the 100-member historic ensemble in your paper – output which includes unforced/internal variability – and the pdf for an EBM, and calculate the pdf for their difference, the 70% confidence interval will not include zero. This is the far below the normal standard for scientifically demonstrating that EBMs are inconsistent with AOGCMs and invalidate AOGCMs as viable theories, but consistent with IPCC’s policy on how to describe such findings to policymakers and the public.

I don’t have a strongly held view on the value of ECS. It looks to me that estimates are all over the map. I will just note that Nic Lewis has a lot of credibility for me since he has no pubic political or policy opinions (except that he has expressed mild approval for a carbon tax). He seems to stick to the science.

Nic is obviously very skilled in statistics. It does appear to me that this is a defect of climate science and many other fields too. Most papers (even those that rely heavily of complex statistical analysis) don’t seem to have professional statistician involvement. In medicine it is common practice to hire a professional to develop study statistical methods and apply them. And of course reregistration of trials is becoming more common and has in one case resulted in a sharp decline in reported positive results.

I also believe that SOD’s critique of climate models is absolutely supported by first principles numerical analysis. Since the truncation discretization errors are quite large (we know this from 60 years of work on aeronautical turbulent simulation practice and theory) any skill must be due to tuning to produce cancellation of errors. Quantities not related to those used in tuning will be skillful only by change. Aeronautical simulations usually are tremendously simplified of course compared to the real atmosphere.

In addition, it would be a miracle if a strongly turbulent process like convection could be modeled well by a relatively simple sub grid model. We can’t do that for simple large turbulent boundary layer separation or for simple turbulent shear layers despite 60 years of intense research. These phenomena seem to require eddy resolving simulations which of course have their own severe challenges. Among these is the impossibility of using classical methods of numerical error control. Without numerical error control or estimation, it’s really hard to avoid the selection bias problem. Particularly in the new soft money research paradigm, its very tempting to simply run the simulation varying parameters and grid until you find one that looks credible and then you publish that one. It’s a constant danger that I’ve seen play out over and over again in the literature. Those who develop the models know these problems very well. Often those who run the models develop an unjustified faith in their skill at getting “good” answers. At least there seems to be a consensus among climate model developers that these practices need to be ended.

Frank: I think it’s important to realize that the PDFs from the EBM papers (e.g., Lewis and Curry, Otto et al.) is different from the uncertainty in the Dessler et al. PDF. In my paper, the uncertainty is ONLY due to internal variability — because it’s a perfect model study, there is no uncertainty in things like radiative forcing. In the EBM studies, the PDF is due to uncertainty principally in radiative forcing. If I add some fake radiative forcing uncertainty to my PDF, it would expand. I did a quick calculation adding 1 W/m2 of uncertainty to RF (5-95%) and the width of my PDF increased by about 50%. And the EBM papers don’t include internal variability uncertainty in their PDFs, so including that would increase their width. Overall, I’m confident that an apples-to-apples comparison would show considerable overlap between the PDFs.

Andy: Thank you for taking the time to reply to my question about comparing the pdfs of AOGCMs and EBMs.

Andy wrote: “I think it’s important to realize that the PDFs from the EBM papers (e.g., Lewis and Curry, Otto et al.) is different from the uncertainty in the Dessler et al. PDF. In my paper, the uncertainty is ONLY due to internal variability — because it’s a perfect model study, there is no uncertainty in things like radiative forcing. In the EBM studies, the PDF is due to uncertainty principally in radiative forcing. If I add some fake radiative forcing uncertainty to my PDF, it would expand. I did a quick calculation adding 1 W/m2 of uncertainty to RF (5-95%) and the width of my PDF increased by about 50%. ”

Why would you add radiative forcing uncertainty to your pdf? You are inputting historic data on the AMOUNT of various forcing AGENTS and relying on your model to correctly calculate the AMOUNT of RADIATIVE FORCING these agents produce. If your model does that wrong, the model is wrong. Radiative transfer calculations are very accurate – provided that the correct temperature, pressure and composition (especially humidity and clouds) data for all grid cells is used by the radiative transfer module. We know that weather prediction programs fail to be skillful after about a week due to the effect chaos on this input data. The FUNDAMENTAL ASSUMPTION behind AOGCMs is that this chaos will average out over any particular period in the future, say 2090-2110. In other words, AOGCMs can predict climate change without getting the future weather on any day correct or predicting the Super El Nino that will occur in 2102 or the relative paucity of strong El Ninos in the 2090s. With 100 runs, you have excellent information about the combined effects of initialization uncertainty and unforced/internal variability.

Your paper analyzed the period 1850–2005, for which AR5 believes the forcing change is 2.3 W/m2. Now you are telling readers that the forcing your AOGCM generates could be off by +/1 W/m2 or +/-50%. That seems crazy. The output from any single climate model doesn’t have an additional +/-50% fudge factor added to it before being presented to policymakers! So why should this fudge factor be added when validating your model against an EBM?

Andrew, I think Lewis and Curry did take account of internal variability. Nic’s blog post (linked in an earlier comment) on your paper says in the summary:

I would say however that the fact that the IPCC range of ECS hasn’t narrowed since the Charney report shows that climate science is not making much progress on the primary quantification issue in its remit. And now we are hearing that CMIP6 models have significantly higher ECS than CMIP5 models. That I think is Frank’s point.

I do wish that climate science could wean itself off of the reliance on climate models and return to theory and fundamentals. Despite initial overestimation of the effect size, the iris effect seems to me to be one of the few new insights of the last 30 years of research. Lindzen is a prime example of a contrarian who can think outside the box and take chances with his research. One of his students finally demonstrated the cause of the ice age cycles convincingly.

The average quality of what passes for science in computational fluid dynamics generally (which includes weather and climate models) has materially declined over time. That’s partially due to the dramatic increase in the supply of scientists who need to make a living, which these days requires a massive resume of publications, and partly due to the dominance of simulation studies. Most of these are actually flawed by selection and positive results bias, but they seem to be demanded by a flawed system. The big lie here that the scientific soft money culture has generated is that CFD is a solved problem. Thus funding for fundamental work has dried up leaving scientists to just run the codes on ever more complex problems and compete with each other to generate the best “selected” and close to “perfect” results from fundamentally uncertain simulations.

dpy6629 wrote: “In addition, it would be a miracle if a strongly turbulent process like convection could be modeled well by a relatively simple sub grid model. We can’t do that for simple large turbulent boundary layer separation or for simple turbulent shear layers despite 60 years of intense research.”

In the linked blog post, Isaac Held claims that the large scale flow in the atmosphere is primarily 2-dimensional and therefore vastly simpler compute. He also shows that the large scale flows his models do produce agree extraordinarily well with observations (reanalysis). The fact that other models don’t agree as well suggests to me that bias from the climate model used to re-analyze observation is not the reason for this agreement. Any comments?

https://www.gfdl.noaa.gov/blog_held/60-the-quality-of-the-large-scale-flow-simulated-in-gcms/

Am I correct in understanding that this large scale flow is primarily the result of the Coriolis effect operating on the Hadley, Ferrel and polar cells?

The key fact I picked up from this post is that the agreement depends on running the model in AMIP mode – ie providing the model with observed SSTs. Furthermore, CMIP5 models provide climate feedback parameters consist with EBMs when forced with historic SSTs.

Yes Frank, Held and I had a brief email exchange about this.

1. What he is talking about is Rossby waves. These have mild pressure gradients and are close to 2D, well at least one can make the case.

2. It is incorrect however to say that 2D flows don’t have issues with turbulence modeling. Whenever there is flow separation the errors can become large. You can get fully developed vortex streets in 2D too. The atmosphere doesn’t have much separation though and this is Held’s argument.

3. The problem here is that in the real atmosphere turbulence is highly variable and can be very large as anyone who has flown in an aircraft can attest. This is true even outside of convective cells. Wyoming is notorious for severe clear air turbulence which I experienced once. It was quite scary.

4. Turbulent fluid has effectively an augmented viscosity compared to laminar fluid. The higher the level, the higher the viscosity. Turbulence models do just that, they add a variable viscosity that is convected by nonlinear PDE’s. Weather and climate models (and indeed all CFD methods) have numerical viscosity in addition in order to be stable. This viscosity can be very large if the grid is not really fine. Since turbulence is ignored except near the surface, the effect of turbulence is ignored. Now of course years of tuning may have yielded a numerical viscosity that in the average is roughly right, but the dynamics will still be wrong.

4. I have noticed in long term weather forecasts what looks to me like pressure gradients tending to relax over time, perhaps due to this numerical viscosity.

5. Convection is different. It’s fully developed 3D turbulence. So Held’s claim is inapplicable. Convection in the atmosphere is vastly more complex than a simple separated boundary over a backward facing step. Turbulence models fail for this latter problem despite 60 years of intense research. Eddy resolving simulations seem to be required to get in the ball park of experiment. I don’t find it credible that simple sub grid models can possibly give skillful predictions of tropical convection, where vertical velocities can exceed a hundred miles per hour. If they had something it would be being used in other branches of CFD.

6. The modeling community I’m sure knows this and has been working on things like aggregation of convective cells. I fear the road ahead is very long and lined with ravenous wolfs.

7. As always in CFD, the users of the codes, and the hordes of climate scientists mostly just run the codes, don’t understand these issues unless they have more rigorous mathematical training. They often just run the code until they get a defensible result and then publish.

That’s why I lament about the state of fluid dynamics research. The incentives don’t favor making fundamental progress and encourage (as I said above) causing a brownout so as to power ever more massive computers to churn out more and more results that provide little insight and have essentially no error quantification.

I personally believe the uncertainty in climate models is much higher than the IPCC’s analyses, which use an invalid statistical approach. To find a lower bound on uncertainty you need to systematically vary all parameters of the simulation. And of course, uncertainty is highly dependent on the type of problem specified. I have personally generated high quality data proving that aeronautical simulations have much more uncertainty than most in the community believe. Regulatory agencies still require very extensive and very costly campaigns of flight testing for this reason.

Frank, I revisited Held’s post that you linked. I don’t disagree all that much. However, he is showing QUALITATIVE agreement of Rossby waves which is the part models are pretty good at. It’s only a small part of the climate system which includes the oceans and the tropics too.

“That’s why I lament about the state of fluid dynamics research.”

dpy, Why don’t you just solve Laplace’s tidal equations like I did and simply add in the tidal forcings? Then you can model fluid climate behaviors such as ENSO and QBO — follow the recipe in the monogaph, or keep track of the closely related research of Delplace and Marston.

I looked a science paper by your authors and it looks qualitative to me. Can you point me to some analysis of overall statistics for a real world situation?

The assumption you are making is that these behaviors are statistical. They are not. They are single instances of standing waves.

You need to define a standing wave. Chaotic flows generally have patterns of vorticity that are statistically distributed and only the statistics have any chance of being diagnostic of skill of any model of them. We know that ocean dynamics near the equator have chaotic characteristics (at least I gather that from ENSO predictions for example).

The absolutely fixed standing wave in space and the absolutely fixed synching to an annual impulse in time preclude a chaotic origin to ENSO. It’s as if some random guy off the street glanced at a tidal pattern and called that chaotic. In fact, every variation in the earth’s length-of-day has a tidal forcing and this is propagated to the inertial sloshing of the equatorial thermocline, thus leading to ENSO dynamics. And it doesn’t hurt that I know how to solve Navier-Stokes given topological constraints (see Delplace and Marston) while you don’t.

Well, the errors here are manifold Paul. A standing wave usually refers to a resonance for example in electromagnetics. Once the periodic phase is factored out, the wave is absolutely constant. Of course Maxwell’s equations are linear and regularity theorems are available that its well posed.

That’s not the case at all with the ocean or even with the shallow water equations. Tidal records also are not “standing waves.” They reflect all kinds of weather effects especially wind direction or storm surges. These are chaotic of course. The “forcing” at least is chaotic so ENSO has all the statistical properties of regular chaos.

It’s a little bit like saying that a turbulent boundary layer is not chaotic because we have very good turbulence models that can make it a steady state problem at least for very mild pressure gradients. Of course its chaotic. A given model can’t show whether it is or isn’t.

Quibbling about where that chaos comes from is irrelevant. I’m not going to waste any more time on an argument you have been making on the internet for at least a decade that is just childish and really rather meaningless. ENSO is chaotic period.

The state of the art in fluid dynamics research is not for the faint of heart.

Tauber, C, P Delplace, and A Venaille. “A Bulk-Interface Correspondence for Equatorial Waves.” Journal of Fluid Mechanics 868 (2019).

Souslov, Anton, Kinjal Dasbiswas, Michel Fruchart, Suriyanarayanan Vaikuntanathan, and Vincenzo Vitelli. “Topological Waves in Fluids with Odd Viscosity.” Physical Review Letters 122, no. 12 (2019): 128001.

The above two cites are indicative of the recent spate of research linking topological constraints to fluid behavior.

So I recommend that you can either catch up, or continue to “lament about the state of fluid dynamics research”

Paul Pukite, You are trotting this idea out as if its something new and exciting. It’s not. Many chaotic flows have a “standing wave” steady state base flow. That’s even true for flow over a backward facing step. After 100 years of research on turbulence models, we are not much closer to being able to use this decomposition. It appears that resolving the turbulence is needed to get improved answers.

ENSO is chaotic and your repeated assertions that it is not are wrong. Tidal records are chaotic and your reference to them is simply wrong.

And please stop your snide suggestions on twitter that are little more than personal smears.

SOD, what we have here is classical passive aggressive behavior. On your blog, Paul Pukite sticks to the technical while on twitter quoting my comments and attacking.

I have presentations at the last 3 AGU meetings and an AGU monograph chapter published by Wiley on the topic. Have received lots of positive feedback, excepting for AGW skeptics who invariably seem threatened by the findings.

Paul, It’s has nothing to do with “feeling threatened.” Perhaps you are projecting your own emotional needs for approval onto other people.

Technically this idea of “standing waves” is nothing new and doesn’t really help in actually predicting anything. It really is about the chaos and predicting that component.

ENSO is not chaotic. The Lyapunov exponent for any reasonable ENSO model turns out non-chaotic : any selection of initial conditions generates a solution that synchronizes with an annual cycle.

Well then why can’t anyone predict ENSO a year in advance? Your Lyopanov exponent must be for a simplified model as its impossible to calculate something like that for a full model of the ocean/atmosphere. You just keep repeating these snippets with no context and they can’t be right.

Simplified models can be very useful and this work you cite is useful. It’s just that as a predictive tool its not there yet and may never get there.

A climate science skeptic requesting predictions is simply imposing a delaying tactic. Predictions are a moot point when there is enough historical data for many conceivable cross-validation measures to be yet performed.

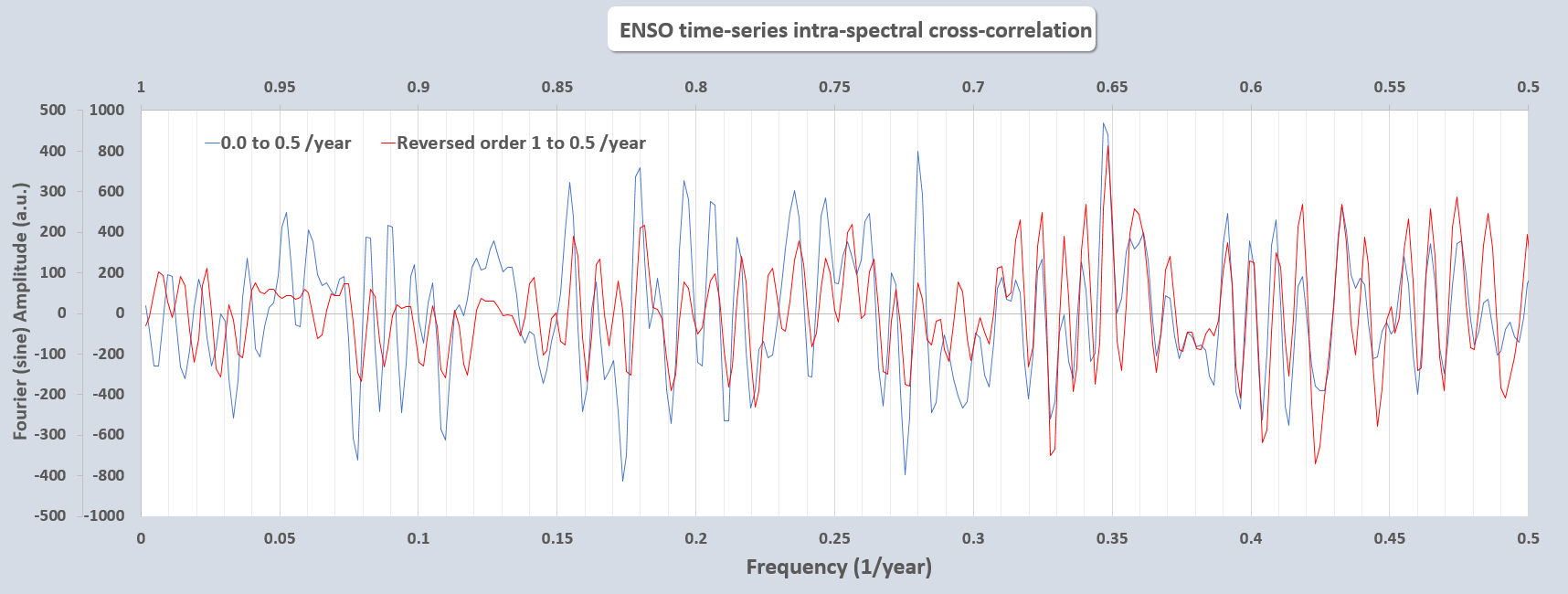

As an example, autocorrelation of the ENSO power spectrum shows a distinct 1-year correlated shift in all frequency components:

This is a consequence of Floquet (math) or Bloch theory (condensed matter physics), concisely expressed as F(t) = exp(-iωt)P(t), whereby a clear periodic function can be extracted from a signal.

All my work and the topological analysis for systems showing chirality is being done by scientists with a strong background in condensed matter physics theory.

Dasbiswas, Kinjal, Kranthi K. Mandadapu, and Suriyanarayanan Vaikuntanathan. “Topological Localization in Out-of-Equilibrium Dissipative Systems.” Proceedings of the National Academy of Sciences 115, no. 39 (September 25, 2018): E9031–40. https://doi.org/10.1073/pnas.1721096115.

This is just the tip of the iceberg as many more research papers and presentations will be coming down the pike.

Well Paul, You say:

“A climate science skeptic requesting predictions is simply imposing a delaying tactic.”

You should stop ascribing motives to people whose motives you can’t possibly know. It’s called reading minds and is an obvious and dishonest rhetorical tactic.

Asking for predictions is what I always ask for people selling a particular modeling method. There are thousands of CFD salesmen out there. Most are more honest and direct than you in that they usually respond to direct questions with a real answer if they want me to buy their research.

You have been asked this same thing scores of times over the last 10 years on various climate blogs and have always done exactly what you are doing here.

In CFD skepticism is justified and I have strong evidence that CFD is in fact much worse than salesmen like you make out. If you won’t answer a direct question, I won’t believe in what you are saying. You won’t help your case with bullying tactics and deflections. The fact that you don’t seem to understand this further harms your case with those reading this thread.

There is some new understanding in this line of work you are advocating. But its so far not relevant to predicting anything which is the gold standard of all CFD.

Well dpy6629 if you really want a prediction all you have to do is run the model. So knock yourself out.

And if you want to debunk the solution to Laplace’s Tidal Equations along the equator, feel free to do that also. I have it all in the can and unless you want to argue particulars, hand-wavy rhetoric asserting “because chaos” is pointless.

Paul, There are literally tens of thousands of CFD codes and methods out there, many freely available from Universities or NASA. It is not my job to evaluate the skill of any of them. The burden of proof is on those who are trying to sell or promote their favorite method or code. It’s your job and your collaborators to show that this method is valuable and skillful.

It is not in my view very interesting or a new fundamental insight. Kind of like a special case of Reynolds averaging which converts a chaotic turbulent flow into a steady state problem for which conventional numerical methods have a better chance of working.

The really important problems in CFD are turbulence modeling and finding some way to evaluate uncertainty in time accurate eddy resolving simulations.

“The burden of proof is on those who are trying to sell or promote their favorite method or code. It’s your job and your collaborators to show that this method is valuable and skillful.”

OK, I did. It was peer-reviewed and published. It’s up to others to either (1) invalidate the model or (2) devise a better model. Option (3) of me coming up with a strategy to invalidate my own model or devise a better model is you once again baiting me into a delaying tactic.

So, it looks like you are the one that is engaging in a discussion and lacking any ammo to back up what you are claiming.

Paul, This conversation has been no more productive than at least 5 others over the last decade.

You have been asked over and over again for an ENSO prediction. You always deflect, dodge, repeat marketing claims, and squirm but never deliver. Virtually any casual observers will conclude that your ideas are incapable of doing so because ENSO is chaotic.

“You have been asked over and over again for an ENSO prediction. You always deflect, dodge, repeat marketing claims, and squirm but never deliver.”

You must not have been one of my peer-reviewers then?

From Dan Hughes, via email. Dan attempted to comment via the website but didn’t succeed.

—-

It is a very hard problem. But it is not an impossible problem.

All aspects that are important relative to applications require “assessment”. The nomenclature in modern scientific and engineering computation is Verification and Validation.

Related:

Richard B. Rood, Validation of Climate Models: An Essential Practice, Post-review Draft – Accepted for Publication, July 26, 2018.

To appear in: “Computer Simulation Validation – Fundamental Concepts, Methodological Frameworks, and Philosophical Perspectives”.

“Abstract

This chapter describes a structure for climate model verification and validation. The construction of models from components and sub-components is discussed, and the construction is related to verification and validation. In addition to quantitative measures of mean, bias, and variability, it is argued that physical consistency must be informed by correlative behavior that is related to underlying physical theory. The more qualitative attributes of validation are discussed. The consideration of these issues leads to the need for deliberative, expert evaluation as a part of the validation process. The narrative maintains a need for a written validation plan that describes the validation criteria and metrics and establishes the protocols for the essential deliberations. The validation plan, also, sets the foundations for independence, transparency, and objectivity. These values support both scientific methodology and integrity in the public forum.”

The book is on the Springer site: https://www.springer.com/us/book/9783319707655. And at Amazon.

I have not yet found an online ToC.

Also somewhat related:

C. Essex, A.A. Tsonis, Model falsifiability and climate slow modes,

Physica A (2018), https://doi.org/10.1016/j.physa.2018.02.090

“Abstract

The most advanced climate models are actually modified meteorological models attempting to capture climate in meteorological terms. This seems a straightforward matter of raw computing power applied to large enough sources of current data. Some believe that models have succeeded in capturing climate in this manner. But have they? This paper outlines difficulties with this picture that derive from the finite representation of our computers, and the fundamental unavailability of future data instead. It suggests that alternative windows onto the multi-decadal timescales are necessary in order to overcome the issues raised for practical problems of prediction.”

“Highlights

1. Climate models do not and cannot employ known physics fully. Thus, they are falsified, a priori.

2. Incomplete physics and the finite representation of computers can induce false instabilities.

3. Eliminating instability can lead to computational overstabilization or false stability.

4. Models on ultra-long timescales are dubiously stable. This is referred to as the “climate state.” Is it real?

5. Decadal variability is understandable in terms of a specific class of nonlinear dynamical systems.”

Unfortunately, the word “falsifiability” appears in the title and the Highlights, but that criterion, is not at all necessary; we need Verification and Validation, not falsifiability. Interestingly, the authors note that existence of slow modes in the physical domain are the only reason that the problem might be called a Boundary Value Problem. Frequently, when only the atmosphere is the focus, the CGMs/ESMs are run with the sea surface temperature specified; application of a quasi-static assumption relative to ocean response and feedback. Comparisons of applications with and without that assumption generally show different responses.

Well yes Dan there are various flavors of validation methodologies. That’s all well and good and as I understand it, these methodologies have some theoretical basis for steady state modeling with traditional turbulence models or structural analysis. Even in this case, I worry about underestimation of uncertainty caused by things like inadequate exploration of parameter space. For eddy resolving time accurate simulations, the theoretical foundation is much weaker at least in so far as I understand it. But I’m not an expert on these methodologies.

Above I was asking dpy6629 about the meaning of Isaac Held’s post showing that an AOGCM (run with observed SSTs) can do an extraordinarily good job of reproducing the large scale flow in the atmosphere (winds, jet streams) that develops from vertical transfer of heat by convection. Upward convection produces clouds and precipitation and must be accompanied by clear regions of subsidence. On the average, these areas likely have a moist adiabatic lapse rate (constant potential temperature). So AOGCMs might get many important features of our climate system right. Systematic errors not involving the climate feedback parameter (the change in radiative imbalance at the TOA with changing Ts) won’t prevent AOGCMs from producing the right climate sensitivity. Where are the weak points in this analysis?

Marine boundary layer (or stratocumulus) clouds are one phenomena that isn’t associated with large scale flow or strong vertical mixing that produces an average constant potential temperature with altitude. These clouds form where relatively warm dry air descends over oceans which are cooled by upwelling or cold currents. So I thought I’d ask how well weather forecast models deal with stratocumulus clouds. I came across this somewhat dated study showing the forecast skill of the ECMWF model over Europe in 2004 at 12 UTC. Even one day in the future, forecast skill, 1-(RMSE_forecast/RMSE_persistence), was only 15%. So even when you know a lot about an air mass, predicting whether marine boundary layer clouds will be present (all day or “burn-off”) is challenging.

Click to access 18106-improved-prediction-boundary-layer-clouds.pdf

The ECMWF has made significant progress, but still has some issues.

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.422.3973&rep=rep1&type=pdf

Since climate models aren’t initialized and don’t do any forecasting, their goal is merely to reproduce current climatology. Since marine boundary layer clouds are highly persistent in many locations at certain times of the year, AOGCMs can appear very accurate without being skillful by the measure used by forecasters: 1-(RMSE_forecast/RMSE_persistence). If we want to know how marine boundary layer clouds will be different on a planet that is 1 K or more warmer than today, merely reproducing current climatology probably isn’t enough. For forecasters, a change in parameterization can always address one narrow problem area. One challenging problem appears to addressing the change from nearly solid stratus to disruption caused by convection breaking through the inversion layer.

Indeed frank, low marine clouds are a challenge. I think you may be confusing Rossby waves and vertical convection. Held’s plots are for wind patterns at large scale. The problem here is that typically in CFD small scales do affect the larger scales. The average effects can be tuned using data but that tuning must be redone for any changes in energy fluxes. Given the terrible skill of models at predicting cloud fraction, they can’t be doing a good job with the small changes in energy flows.

The real problem here is that the energy flux changes are 2 orders less than total fluxes and less than truncation errors or sub grid model errors. There is a math theory for modeling subgrod scales developed by Tom Hughes at Texas and it is hard enough for simple

Arguments that “a miraculous cancellation of errors” takes place are very naive.

dpy6629: I think I understand atmospheric Rossby waves: Hopefully they are the north-south undulations in the jet stream. When I look at Held’s Figure 1, I don’t immediately see Rossby waves, but the seasonal average velocity will depend on where the Rossby waves have caused the jet stream to linger longest.

What I don’t understand and was hoping to learn is what I might conclude from the agreement in Figure 1. What processes can we deduce the model is “getting right” because it is getting the wind, and its seasonal changes, right? What makes the jet stream stronger in the winter and causes it to shift latitudinally toward the summer pole? Why at 200 mb? (The tropopause?) In the re-analysis section of the paper, Held says: “The multivariate nature of the interpolation is critical. As an important example, horizontal gradients in temperature are very closely tied to vertical gradients in the horizontal wind field (for large-scale flow outside of the deep tropics). It makes little sense to look for an optimal estimate of the wind field at some time and place without taking advantage of temperature data.”

Although I am uncertain what aspects of heat flow through the atmosphere are controlled by the large-scale flow, I’m fairly sure the marine boundary layer is not one of them.

Frank

I don’t agree with dpy points above. On average, the model is getting a wide range of atmospheric motions right. The jet stream is located over regions where temperature gradients in the lower atmosphere are largest. The model is responding to forcing and rotation to keep the thermal gradients and the jet stream located properly. Note that large-scale atmospheric motion is not turbulence. In turbulent motion, energy cascades to smaller and smaller scales. In large-scale atmospheric motion energy is exchanged over a range of scales with disturbances/waves growing in scale and then decaying/breaking.

This phrase is the key Frank: “(for large-scale flow outside of the deep tropics).” I think the equator to pole temperature gradient is strongly dependent on Rossby waves for example. The problem here is that vertical energy flows in the tropics have little to do with Rossby waves.

Chubbs: You will note that later in the post Frank is referencing Held makes an admission: “But you can also err on the side of uncritical acceptance of model results; this can result from being seduced by the beauty of the simulations and possibly by a prior research path that was built on utilizing model strengths and avoiding their weaknesses (speaking of myself here). ”

Also:”Note added June 10 in response to some e-mails. For those who have looked at the CMIP archives and seen bigger biases than described here, keep in mind that I am describing an AMIP simulation — with prescribed SSTs. The extratropical circulation will deteriorate depending on the pattern and amplitude of the SST biases that develop in a coupled model. Also this model has roughly 50km horizontal resolution, substantially finer than most of the atmospheric models in the CMIP archives. These biases often improve gradually with increasing resolution. And there are other fields that are more sensitive to the sub-grid scale closures for moist convection, especially in the tropics. I’ll try to discuss some of these eventually”

Held is well aware that the wind field is a strength of models. Tropical convection is a weakness and a pretty serious one for modeling small changes in energy flows which is what we care about in climate.

We know from 60 years of research on simpler CFD problems that there is little expectation that climate models will be skillful much less “accurate.” The more honest climate scientists know this.

dpy

Models do a reasonable job with OHC, so can’t agree with your energy concerns. You are misinterpreting Held. His blog is full of information gleaned from climate models. How does he do that? – by using simple and complex models and observations; and understanding the strengths and weaknesses of each tool.

Chubbs, I am quoting Held. You did not do that yourself. I’ve been in the field for 40 years and everyone in the field knows what Ive been saying here. It’s really well established. If you are interested, you could start with an introductory book on numerical solution of PDE’s.

And just because models are tuned (perhaps unconsciously) to replicate with reasonable skill the historical temperature time series doesn’t mean much. sI believe they are also tuned explicitly for TOA flux balance and probably for ocean heat uptake too.

I explained this above. Basically when the truncation and sub grid model errors are larger than the quantities you are interesting in, skill is due to tuning and cancellation of errors. That’s OK, but in a complex system like the climate system, that means many many other important measures are not very good. Regional climate, cloud fraction as a function of attitude, SST temperature patterns, etc. etc.

The CMIP5 models were run 10+ years ago and predicted OHC is still tracking the obs quite well. A simple back-of-the-envelop calculation shows that the energy accumulating in the climate system is massive; hard to see how random error could possibly have an impact. So without any supporting evidence your assertions re: energy aren’t very persuasive.

Chubbs, You are picking one integrated quantity and claiming its well modeled without evidence and without quantification of uncertainty. What’s the spread of the models? There must be short term errors because of poor cloud simulation. Are the models tuned for OHC? They are for TOA fluxes.

The problem here is that the distribution of the fluxes is also very important not just their average. Recall the “pattern of SST changes” argument over the last decade or so. Models fail to get that pattern right.

The main point is a mathematical one. The changes in the fluxes are 100 times smaller than the total fluxes. That’s smaller than the truncation errors in such models.

dpy6629: IIRC, Models show a 2-fold range for “diffusion” of heat into the deep ocean. High ocean heat uptake could negate some of the excess warming models with high climate sensitivity would be expected produce in historic experiments – but Nic Lewis assures me that some models with high ECS do not compensate with high ocean heat uptake. Some models show TCR/ECS ratios that are too small to be consistent with ARGO:

TCR/ECS = 1 – dW/dF

where dW is the current rate of ocean heat uptake and dF is the current forcing.

Sure there is scatter in OHC, just like there is scatter in another integrated property, ECS. Building on Frank comment the OHC/ECS scatter can be related directly to scatter in clouds and ocean mixing. So I don’t see any evidence to support your “flux/energy” concerns and you can’t supply any.

Chubbs, You should address my actual argument which is first principles numerical analysis fundamentals that everyone who’s actually done this type of modeling knows about. Meaningful skill can only be achieved as truncation errors get smaller so that numerical errors are less than the quantities you want to simulate skillfully. Richtmyer and Morton’s old but good book is a good place for you to start. But its a complex subject.

A lot of arguments over whether models are right or wrong are IMHO misplaced. If you think of climate science as an iceberg, the 10% above water, which everyone sees, are climate models. But there’s 90% below water that is made up of simple physical arguments and data.

The reason climate scientists are so confident in the main conclusions is not because of models, but because of simple physical arguments. Not to say models are not useful — they are, but mainly to validate our understanding.

E.g., we are confident in our understanding of the water vapor feedback not because models tell us it’s there, but because we have simple physical arguments and data. Models confirm our theories, giving us great confidence in in our understanding.

People not familiar with climate science don’t realize that 90% is there, so are overly dismissive of climate science. That is a mistake.

There is some truth in what you say here Andrew. But I think it gives a somewhat unbalanced picture and could lead to overconfidence.

What you call “simple physical arguments” are often vague verbal formulations without quantification. These give a false sense of understanding in CFD too. For example, we have “understood” aeronautical flutter for 50 years but this has close to zero practical value. Quantification is of course what is really needed.

Medicine is plagued by the same issue. It’s a complex system and individuals are quite variable. Medicine also has huge problems with selection bias and publication bias.

It seems to me that the fundamental problem in climate science is estimating sensitivity. On that issue little progress has been made. Not a good track record considering the billions invested. I don’t think climate models have really added much in terms of new understanding or quantification. Weather models by contrast have been very successful and there is a very good understanding of the uncertainties involved. But we are eventually going to hit the wall of chaos and better theory will be the only way to improve.

The problem in science generally is that the current soft money culture gives rise to a focus on computational studies which don’t really advance understanding. Climate science just as other fields of science have been complicit in generating a false sense of confidence in CFD generally that makes funding for fundamental work quite hard to find. This is causing a stagnation of these fields that is tragic. CFD simulation is a big business these days with hundreds of companies with products. They of course also benefit from echoing the academic selection bias driven dogma that CFD is a solved problem. So far, government regularity agencies have not been fooled which is very fortunate for the general public.

The recent recognition of the replication crisis does somewhat undermine the narrative scientists have an interest in propagating that science can be the basis for all human actions and decisions. There are fundamental theoretical limits to what science can achieve just as there are limits to axiomatic deductive systems. Denial of these problems and refusal to address them within your own field is not going to help anyone in the long term. Science needs to address the inherent biases and in some cases outright deception if it is going to continue to be looked to by the public as having high value. We make progress by being self-critical and admitting that real issues need to be addressed.