A couple of recent articles covered ground related to clouds, but under Models –Models, On – and Off – the Catwalk – Part Seven – Resolution & Convection & Part Five – More on Tuning & the Magic Behind the Scenes. In the first article Andrew Dessler, day job climate scientist, made a few comments and in one comment provided some great recent references. One of these was by Paulo Ceppi and colleagues published this year and freely accessible. Another paper with some complementary explanations is from Mark Zelinka and colleagues, also published this year (but behind a paywall).

In this article we will take a look at the breakdown these papers provide. There is a lot to the Ceppi paper so we’re not going to review it all in this article, hopefully in a followup article.

Globally and annually averaged, clouds cool the planet by around 18W/m² – that’s large compared with the radiative effect of doubling CO2, a value of 3.7W/m². The net effect is made up of two larger opposite effects:

- cooling from reflecting sunlight (albedo effect) of about 46W/m²

- warming from the radiative effect of about 28W/m² – clouds absorb terrestrial radiation and reemit from near the top of the cloud where it is colder, this is like the “greenhouse” effect

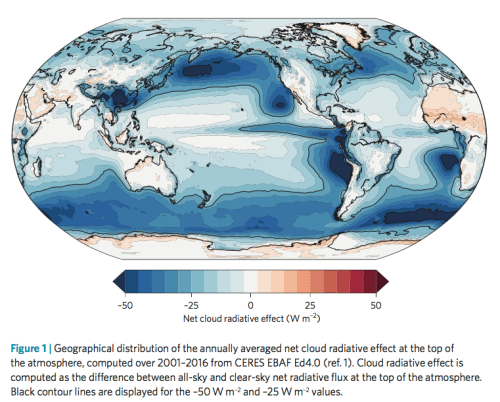

In this graphic, Zelinka and colleagues show the geographical breakdown of cloud radiative effect averaged over 15 years from CERES measurements:

Figure 1 – Click to enlarge

Note that the cloud radiative effect shown above isn’t feedbacks from warming, it is simply the current effect of clouds. The big question is how this will change with warming.

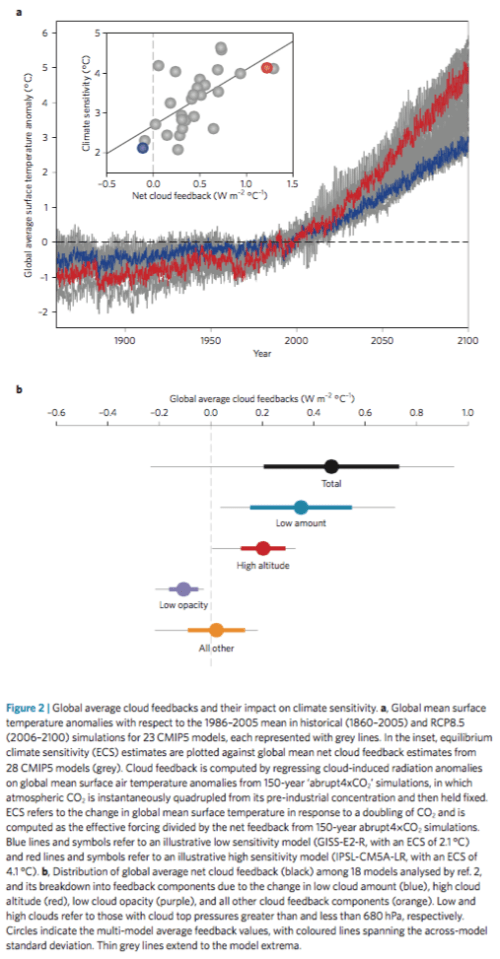

In the next graphic, the inset in the top shows cloud feedback (note 1) vs ECS from 28 GCMs. ECS is the steady state temperature resulting from doubling CO2. Two models are picked out – red and blue – and in the main graph we see simulated warming under RCP8.5 (an unlikely future world confusing described by many as the “business as usual” scenario).

In the bottom graphic, cloud feedbacks from models are decomposed into the effect from low cloud amount, from changing high cloud altitude and from low cloud opacity. We see that the amount of low cloud is the biggest feedback with the widest spread, followed by the changing altitude of high clouds. And both of them have a positive feedback. The gray lines extending out cover the range of model responses.

Figure 2 – Click to enlarge

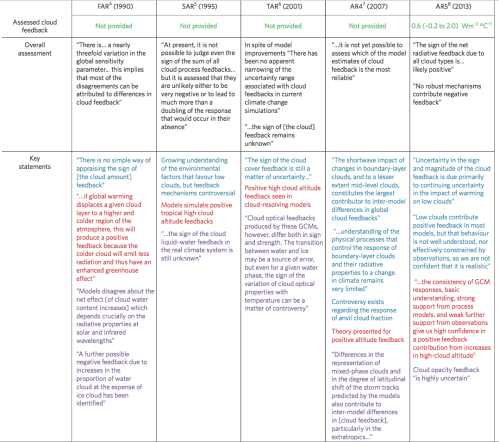

In the next figure – click to enlarge – they show the progression in each IPCC report, helpfully color coded around the breakdown above:

Figure 3 – Click to enlarge

On AR5:

Notably, the high cloud altitude feedback was deemed positive with high confidence due to supporting evidence from theory, observations, and high-resolution models. On the other hand, continuing low confidence was expressed in the sign of low cloud feedback because of a lack of strong observational constraints. However, the AR5 authors noted that high-resolution process models also tended to produce positive low cloud cover feedbacks. The cloud opacity feedback was deemed highly uncertain due to the poor representation of cloud phase and microphysics in models, limited observations with which to evaluate models, and lack of physical understanding. The authors noted that no robust mechanisms contribute a negative cloud feedback.

And on work since:

In the four years since AR5, evidence has increased that the overall cloud feedback is positive. This includes a number of high-resolution modelling studies of low cloud cover that have illuminated the competing processes that govern changes in low cloud coverage and thickness, and studies that constrain long-term cloud responses using observed short-term sensitivities of clouds to changes in their local environment. Both types of analyses point toward positive low cloud feedbacks. There is currently no evidence for strong negative cloud feedbacks..

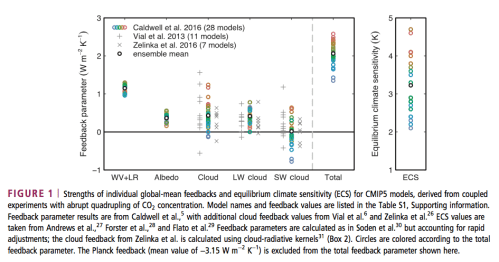

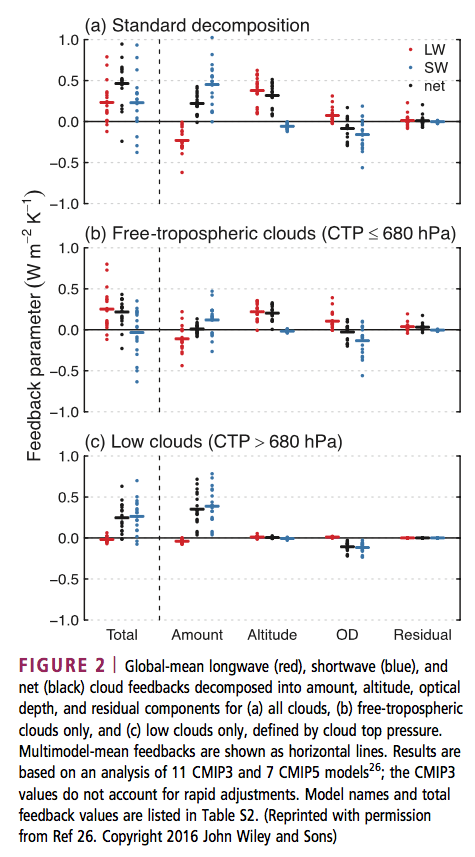

Onto Ceppi et al 2017. In the graph below we see climate feedback from models broken out into a few parameters

- WV+LR – the combination of water vapor and lapse rate changes (lapse rate is the temperature profile with altitude)

- Albedo – e.g. melting sea ice

- Cloud total

- LW cloud – this is longwave effects, i.e., how clouds change terrestrial radiation emitted to space

- SW cloud- this is shortwave effects, i.e., how clouds reflect solar radiation back to space

Figure 4 – Click to enlarge

Then they break down the cloud feedback further. This graph is well worth understanding. For example, in the second graph (b) we are looking at higher altitude clouds. We see that the increasing altitude of high clouds causes a positive feedback. The red dots are LW (longwave = terrestrial radiation). If high clouds increase in altitude the radiation from these clouds to space is lower because the cloud tops are colder. This is a positive feedback (more warming retained in the climate system). The blue dots are SW (shortwave = solar radiation). If high clouds increase in altitude it has no effect on the reflection of solar radiation – and so the blue dots are on zero.

Looking at the low clouds – bottom graph (c) – we see that the feedback is almost all from increasing reflection of solar radiation from increasing amounts of low clouds.

Figure 5

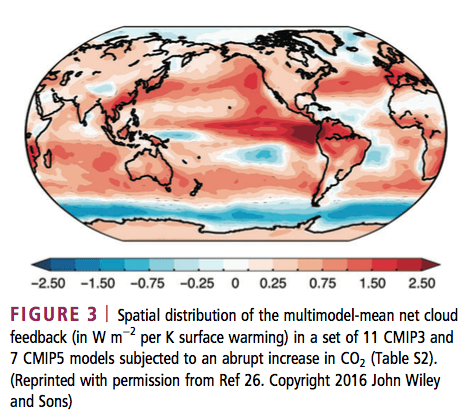

Now a couple more graphs from Ceppi et al – the spatial distribution of cloud feedback from models (note this is different from our figure 1 which showed current cloud radiative effect):

Figure 6

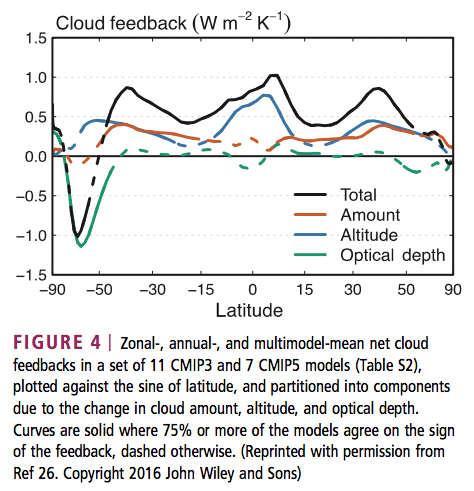

And the cloud feedback by latitude broken down into: altitude effects; amount of cloud; and optical depth (higher optical depth primarily increases the reflection to space of solar radiation but also has an effect on terrestrial radiation).

Figure 7

They state:

The patterns of cloud amount and optical depth changes suggest the existence of distinct physical processes in different latitude ranges and climate regimes, as discussed in the next section. The results in Figure 4 allow us to further refine the conclusions drawn from Figure 2. In the multi- model mean, the cloud feedback in current GCMs mainly results from:

- globally rising free-tropospheric clouds

- decreasing low cloud amount at low to middle latitudes, and

- increasing low cloud optical depth at middle to high latitudes

Cloud feedback is the main contributor to intermodel spread in climate sensitivity, ranging from near zero to strongly positive (−0.13 to 1.24 W/m²K) in current climate models.

It is a combination of three effects present in nearly all GCMs: rising free- tropospheric clouds (a LW heating effect); decreasing low cloud amount in tropics to midlatitudes (a SW heating effect); and increasing low cloud optical depth at high latitudes (a SW cooling effect). Low cloud amount in tropical subsidence regions dominates the intermodel spread in cloud feedback.

Happy Christmas to all Science of Doom readers.

Note – if anyone wants to debate the existence of the “greenhouse” effect, please add your comments to Two Basic Foundations or The “Greenhouse” Effect Explained in Simple Terms or any of the other tens of articles on that subject. Comments here on the existence of the “greenhouse” effect will be deleted.

References

Cloud feedback mechanisms and their representation in global climate models, Paulo Ceppi, Florent Brient, Mark D Zelinka & Dennis Hartmann, IREs Clim Change 2017 – free paper

Clearing clouds of uncertainty, Mark D Zelinka, David A Randall, Mark J Webb & Stephen A Klein, Nature 2017 – paywall paper

Notes

Note 1: From Ceppi et al 2017: CLOUD-RADIATIVE EFFECT AND CLOUD FEEDBACK:

The radiative impact of clouds is measured as the cloud-radiative effect (CRE), the difference between clear-sky and all-sky radiative flux at the top of atmosphere. Clouds reflect solar radiation (negative SW CRE, global-mean effect of −45W/m²) and reduce outgoing terrestrial radiation (positive LW CRE, 27W/m²−2), with an overall cooling effect estimated at −18W/m² (numbers from Henderson et al.).

CRE is proportional to cloud amount, but is also determined by cloud altitude and optical depth.

The magnitude of SW CRE increases with cloud optical depth, and to a much lesser extent with cloud altitude.

By contrast, the LW CRE depends primarily on cloud altitude, which determines the difference in emission temperature between clear and cloudy skies, but also increases with optical depth. As the cloud properties change with warming, so does their radiative effect. The resulting radiative flux response at the top of atmosphere, normalized by the global-mean surface temperature increase, is known as cloud feedback.

This is not strictly equal to the change in CRE with warming, because the CRE also responds to changes in clear-sky radiation—for example, due to changes in surface albedo or water vapor. The CRE response thus underestimates cloud feedback by about 0.3W/m² on average. Cloud feedback is therefore the component of CRE change that is due to changing cloud properties only. Various methods exist to diagnose cloud feedback from standard GCM output. The values presented in this paper are either based on CRE changes corrected for noncloud effects, or estimated directly from changes in cloud properties, for those GCMs providing appropriate cloud output. The most accurate procedure involves running the GCM radiation code offline—replacing instantaneous cloud fields from a control climatology with those from a perturbed climatology, while keeping other fields unchanged—to obtain the radiative perturbation due to changes in clouds. This method is computationally expensive and technically challenging, however.

The cloud feedback science of 2000 to 2007.

Joel Norris, Scripps Institution of Oceanography :

“cloud changes since 1952 have had a net cooling effect on the Earth”

“The decrease in reconstructed OLR since 1952 indicates that changes in upper-level cloud cover have acted to reduce the rate of tropospheric warming relative to the rate of surface warming. The increase in reconstructed net upward radiation since 1952, at least at middle latitudes, indicates that changes

in cloud cover have acted to reduce the rate of tropospheric and surface warming.”

“The surface-observed low-level cloud cover time series averaged over the global ocean appears suspicious because it reports a very large 5%-sky-cover increase between 1952 and 1997. Unless low-level cloud albedo substantially decreased during this time period, the reduced solar absorption caused by the reported enhancement of cloud cover would have resulted in cooling of the climate system that is inconsistent with the observed temperature record.”

It shows the climate science slowly retreating from former positions. So it is better to cling to models than to take observation seriously. It is possible that cloud cover was increasing for 45 years and has been decreasing a little the last 20 years. The whole 65 years span is most interesting, and maybe shows changes that are zero in sum.

My proposal for cloud feedback science, for global surface temperatures, base it on observation and statistics: Zero feedback is the best null hypothesis. Use climate models to test how different components can work together. Stop using models as speculative devices.

I think that I will correct myself here (after 4 years). It is now plenty of evidence of a global brightening, and that it was a “regime shift” about 1982. Shortwave radiation to earth surface increased, together with ocean heat content. It looks like changing clouds was responsible for much of the global warming from 1983 to 2021. The discussion of the part played by GHGs on this global brightening is not over.

I have some comments on clouds, longwave and shortwave feedback at the end of this comment serie.

It seems that when observations (IPCC) disagree with models, then the observations are assumed to be wrong, not the models.

I find it very odd that the pattern of positive cloud feedbacks from models clearly correlates with the measured net negative cloud effect from CERES. Seems to me we need a lot more measurement and a lot less modeling.

Steve,

Did you read the whole paper by Ceppi et al and follow up all the references?

Lots more measurement would be wonderful but in the meantime:

1. We only have 15 years of CERES measurements and we will only have 30 years of measurement we reach 2032. Perhaps we need 60 years of measurements which we will reach in 2062? On the other hand, if there is a way to get a better understanding before 2062 or 3032 wouldn’t you want that?

2a. There are lots of measurements apparently backing up the breakdown of cloud feedbacks, along with support from high resolution models. This is what Ceppi et al propose. So please address their points.

2b. GCMs compared with measurement compared with high resolution LES provide the opportunity to test hypotheses in more detail. You don’t want this? What would you propose as the alternative?

Yes, I read the article. It is a review article, with 181 references, most pay-walled. Are you suggesting that someone needs to read 181 references before commenting?

My take is the authors draw two apparently incongruent conclusions: 1) our understanding of cloud feedbacks in models has grown dramatically over the last 20 years, and 2) the models are no closer to agreeing with each other about cloud feedbacks than they were a dacade ago. And what do they propose? More research on this really hard problem. And better parameterizations of many processes below the model scale. And more ‘experiments’ with models using unrealistic conditions like instantaneous quadrupling of CO2. In other words, more of the same thing that has already been so effective over three decades at moving the models toward ever better agreement with each other and ever better consistency with measured reality. The contrast with Bjorn Stevens’ response to criticism of his paper on self-organization of convection could not be more clear: to paraphrase, “what we have been doing isn’t working, so we need a different approach”.

I am reminded by this review article of the presence of one recommendation in most all reports written by hired consultants: “you need more consulting”. My experience is that recommendation is often very wrong, and I don’t think that should come as a surprise to anyone. (I worked as a technical consultant for more than 15 years.)

If CERES measurements are useful, then we should be able to see clear trends over 15 years, and certainly over 30 years. If the data are too noisy/uncertain to provide meaningful confirmation of model projections (e.g., the patern of global radiative balance has seen a clear and significant trend, just as predicted by models), and to tell us which models, get clouds “right” and which get them “wrong”, then yes, we need more and/or better data…. as much as it takes. Progress is never made doing what you already know doesn’t work.

Yes, the models have gotten quite a bit better at matching reality and in agreeing with each other over the last three decades. They have objectively improved on many metrics, and do a much better job of representing smaller-scale phenomena than they used to. So, it seems wise to continue this.

Even if there was still the same amount of disagreement within models, though, that doesn’t mean you abandon them. Models, as the representation of our knowledge of how a system works, are the ultimate goal of any science. It doesn’t always have to be computer models, but the goal is still to understand reality through simplified conceptual models. This is the same guiding principle across every field.

So we keep improving our conceptual understanding of how the Earth’s climate system works. That goes hand-in-hand with improving our models; the conceptual understanding is the same whether or not you’ve programmed it into a computer.

I ask myself this question:

– we can always point to the deficiencies of a model (or a group of models)

– this is because all models are wrong – although some are useful

So at what point do we find model results useful? Never?

Models are generally useful when they make accurate predictions over a significant period of time. (That is, more accurate than a simple extrapolation of recent history.) Model predictions used to justify huge public and private costs, everywhere on Earth, have to 1) agree closely with each other, and 2) make remarkably accurate predictions, not hind-casts, over multi-decadal periods. Neither of these are close to the state of GCMs.

SOD asked: “So at what point do we find model results useful? Never?”

For me, when the models reproduce the large changes in OLR and OSR from clear and cloudy skies that accompany the seasonal cycle of warming – without having been tuned to do so. Hopefully, the agreement will be regional as well as global.

I ask myself in what way climate models are useful. This is a perturbed physics ensemble from Rowland et al 2012. It is conveniently compared to the CMIP opportunistic ensemble. The claim is an even broader range of outcomes.

The 1000’s of solutions in the perturbed physics ensemble diverge exponentially as a result of sensitive dependence on initial conditions – and the spread is ‘irreducible imprecision’. Sensitive dependence and structural instability are intrinsic properties of climate models resulting from the nonlinear nature of the core equations of fluid transport.

“Lorenz was able to show that even for a simple set of nonlinear equations (1.1), the evolution of the solution could be changed by minute perturbations to the initial conditions, in other words, beyond a certain forecast lead time, there is no longer a single, deterministic solution and hence all forecasts must be treated as probabilistic. The fractionally dimensioned space occupied by the trajectories of the solutions of these nonlinear equations became known as the Lorenz attractor (figure 1), which suggests that nonlinear systems, such as the atmosphere, may exhibit regime-like structures that are, although fully deterministic, subject to abrupt and seemingly random change.” Slingo and Palmer 2011

The implications for opportunistic ensembles are profound. Non-unique solutions from different models are compared without questioning the theoretical basis for individual solution choice. It is done on the basis of a posteriori solution behavior – i.e. the solution looks good without having any rigorous basis for the choices made.

We do of course have regime like shifts in oceans and atmosphere. One of these is of course the ENSO quasi standing wave in the globally coupled, spatio-temporal chaos of Earth’s flow field. ENSO origins are in upwelling in the eastern and central Pacific. This is influenced by blocking patterns spinning up ocean gyres in the north and south in response to polar surface pressure changes. Models are linking polar surface pressure changes to solar UV/ozone chemistry – intrinsically a better role for models in process formulation. e.g. https://www.nature.com/articles/ncomms8535

The significance for cloud is in the response to changing sea surface temperature – for which there is theoretical – e.g. http://aip.scitation.org/doi/abs/10.1063/1.4973593 – and both surface and satellite observation – e.g. – http://science.sciencemag.org/content/325/5939/460 – http://journals.ametsoc.org/doi/pdf/10.1175/JCLI3838.1 – but the future of sea surface temperature is unknowable. It seems a relatively large effect and ENSO shows extreme variability over many millennia.

“Figure 12 shows 2000 years of El Nino behaviour simulated by a state-of-the-art climate model forced with present day solar irradiance and greenhouse gas concentrations. The richness of the El Nino behaviour, decade by decade and century by century, testifies to the fundamentally chaotic nature of the system that we are attempting to predict. It challenges the way in which we evaluate models and emphasizes the importance of continuing to focus on observing and understanding processes and phenomena in the climate system. It is also a classic demonstration of the need for ensemble prediction systems on all time scales in order to sample the range of possible outcomes that even the real world could produce. Nothing is certain.” op. cit.

A news release about the Ceppi paper.

STUDY DISCOVERS WHY GLOBAL WARMING WILL ACCELERATE AS CO2 LEVELS RISE

SOD and Steve, Let me make a few points:

1. A recent paper Zhao et al on a new GFDL GCM makes the following point — “The authors demonstrate that model estimates of climate sensitivity can be strongly affected by the manner through which cumulus cloud condensate is converted into precipitation in a model’s convection parameterization, processes that are only crudely accounted for in GCMs. In particular, two commonly used methods for converting cumulus condensate into precipitation can lead to drastically different climate sensitivity, as estimated here with an atmosphere–land model by increasing sea surface temperatures uniformly and examining the response in the top-of-atmosphere energy balance. The effect can be quantified through a bulk convective detrainment efficiency, which measures the ability of cumulus convection to generate condensate per unit precipitation. The model differences, dominated by shortwave feedbacks, come from broad regimes ranging from large-scale ascent to subsidence regions. Given current uncertainties in representing convective precipitation microphysics and the current inability to find a clear observational constraint that favors one version of the authors’ model over the others, the implications of this ability to engineer climate sensitivity need to be considered when estimating the uncertainty in climate projections.” This is I think alarming in terms of relying on GCM’s for future projections.

2. Nic Lewis makes the following point regarding GCM predicted cloud fraction by latitude — “Even if the CMIP5 average water vapour + lapse rate feedback of ~1.0 Wm−2 °C−1 were correct, combining it with the Planck and albedo feedbacks would only generate an ECS of ~2°C, much lower than the diagnosed ECS values of the CMIP5 models used to generate projections of warming over this century, which average 3.4°C. The difference relates primarily to positive cloud feedbacks in AOGCMs. Clouds are unresolved sub-grid scale phenomena in AOGCMs and are represented by parameterized approximations. Clouds at different levels have very different effects. Low clouds generally cool the Earth by reflecting incoming short-wave solar radiation, whilst having little effect on outgoing long-wave radiation (although they are opaque to long- wave radiation, most of it that leaves Earth is emitted higher in the atmosphere). High level, thinner, clouds generally warm the Earth by transmitting most short-wave radiation, but blocking outgoing long-wave radiation. Current models do not even succeed in representing basic features such as total cloud extent at all accurately, as this graph comparing percentage total cloud fraction in CMIP5 AOGCMs with that per satellite observations shows:” [unfortunately pasting the plot is beyond my meager computer skills, but you can find it at Nic’s web site.]

3. Convection, the main cloud generating phenomenon in the tropics, is an ill-posed problem. It is unlikely in my view that current low resolution GCM’s will get much right here beyond a small number of outputs which can be used to tune the parameters. As shown in 1 however, these processes can have a very large effects on the ECS of a GCM. At the least, this points to a huge gap in our understanding of what to tune and how one can meaningfully constrain GCM outputs of interest to policy makers.

dpy6629,

For interested readers, we had a look at a similar paper – Cloud tuning in a coupled climate model: Impact on 20th century warming, Jean-Christophe Golaz, Larry W. Horowitz, and Hiram Levy II, GRL (2013) – in Models, On – and Off – the Catwalk – Part Five – More on Tuning & the Magic Behind the Scenes and then in the comments also discussed Zhao et al.

I’m looking forward to digging through the papers referenced by Ceppi et al to see how well LES models match up with observations and how well both match up on the FAT (fixed anvil temperature) hypothesis, the low cloud amount and other cloud feedbacks.

One of the papers I was just reading (probably a Ceppi reference) made this same point and we can also see it in figure 4 in the article.

Do you have a link?

The Nic Lewis quotation comes from: Equilibrium Climate Sensitivity and Transient Climate Response – the determinants of how much the Earth’s surface will warm as atmospheric CO2 increases, point 21.

The best I have SOD is this from Nic Lewis. In my experience he is very reliable in reporting others findings.

Click to access briefing-note-on-climate-sensitivity-etc_nic-lewis_mar2016.pdf

See particularly the later points.

Well good luck with the LES modeling. Here’s a rare papers on grid sensitivity of DES, which uses LES in the separated flow regions away from the wall.

https://arc.aiaa.org/doi/full/10.2514/1.J055685

I still wonder what rigorous methods one can use to assess the accuracy of these methods. You are aware that LES results will be dependent on grid density so grid convergence is very challenging.

In any time accurate turbulent simulation, the adjoint diverges and this fact makes classical numerical error control methods impossible. In the absence of numerical error control, it seems to me problematic to separate the numerical noise from the signal.

I would be quite interested in any alternatives you uncover in your reading.

The graph referenced in point 21 was taken from Propagation of Error and The Reliability of Global Air Temperature Projections – Patrick Frank

JCH,

In that case the graphic is this one:

SOD, I value highly your posts on recent papers like this one. It’s always informative and interesting and I thank you for your work. It’s a great forum for learning about climate science.

However, perhaps what SteveF is frustrated by is how uncertain a lot of the results are given the poor quality of the data and the models. I recently was investigating something I saw elsewhere on the web and it led me to a RealClimate post about a 2005 Nature paper stating that “high aerosol forcing will mean much more warming in the future.” This paper was raising the possibility that ECS could be as high as 10C if aerosol forcing was say -2W/m2. Schmidt’s post was basically debunking that paper.

http://www.realclimate.org/index.php/archives/2005/07/climate-sensitivity-and-aerosol-forcings/

The reason it was interesting to me was the graphics of ECS vs. total aerosol forcing based on a simple energy balance model that foreshadowed some of Nic Lewis’ work. Unfortunately, the original RealClimate post does not have the graphs probably due to data storage limitations, but I found it elsewhere and indeed according to Schmidt’s graphic if total aerosol forcing is around -1.0 W/m2, ECS is about 1.5C.

In any case, there are many more examples of “alarming” papers that say its going to be much worse than we thought that turn out to be of low quality.

The other point I would make SOD is that it is wise to exercise skepticism with regard to the literature in a politically charged field like climate science. I know in CFD that the literature is untrustworthy because most of it suffers from selection bias. People want to keep their funding stream alive so they tend to “select” their “best” results and file in the desk drawer the less convincing results. The bad results can always be explained as due to bad griding, numerical instabilities, unconverged iterations, and finally if you are brazen you can claim the data must be wrong. This is equivalent to rounding up and burning the usual witches when things don’t work as you would want.

I believe that in climate science there is a group of activist scientists who are reluctant to publish results that are not alarming. The best example of this is the IPCC redoing an energy balance paper’s calculations using a uniform prior in its chapter on ECS. That of course led to a much higher estimate than the original paper. That’s why Lewis is so valuable in this field.

dpy6629,

In this blog – check the Etiquette – we ignore presumed motivations.

The ideas in the Etiquette and in About this Blog are the principles behind the blog. There are much better blogs to debate the motivations, nefarious activities and subconscious plotting of climate scientists.

But not here.

Instead, commenters should limit themselves to presenting evidence against (or for) a point of view.

OK, sorry. I’ve seen this myself in CFD where the bias is not usually intentional. There are always rationalizations and the culture tends to provide its own survival imperative. As in any field, some people are vastly worse than others. This can be a function of personality. There is plenty of this bias in all fields of science.

SOD: I haven’t studied the material above carefully enough, but I everything appears to be comparing models to models. Let’s compare models to observations: The change in OLR and reflected SWR (OSR) from both clear and cloudy skies associated with the 3.5 K of seasonal warming the planet undergoes every year. This arises from an average of about 10 K of warming in the NH (with its lower heat capacity responding to seasonal increased irradiation) and 3 K of cooling in the SH. Unfortunately, the data is reported in terms of gain factors not W/m2/K. A gain factor of f will increase the no-feedbacks climate sensitivity by a factor of (1/(1-f)).

The gain factors of longwave feedback that operates on the annual variation of the global mean surface temperature. (A) All sky. (B) Clear sky. (C) Cloud radiative forcing. Blank bars on the left indicate the gain factors obtained from satellite observation (ERBE, CERES SRBAVG, and CERES EBAF) with the SE bar. Black bars indicate the gain factors obtained from the models identified by acronyms at the bottom of the figure (Methods, Data from Models). The vertical line near the middle of each frame separates the CMIP3 models on the left from the CMIP5 models on the right.

The gain factors of solar [reflected SWR] feedback that operate on the annual variation of the global mean surface temperature. (A) All sky. (B) Clear sky. (C) Cloud radiative forcing. See Fig. 3 legend for further explanation.

The evidence is undeniable that AOGCM’s have serious problems reproducing the large changes that are seen annually in response to the seasonal 3.5 K increase in GMST – except for an LWR feedback of about -2.1 W/m2/K through clear skies (Planck+WV+LR). Most models show LWR feedback from all skies that is more positive than observed.

Until AOGCMs are capable of better reproducing these seasonal feedbacks, why should we pay any attention to model vs. models comparisons? Why does anyone believe we can learn anything from comparing one flawed model to another? (Are climate models tuned to produce LWR feedback in response to seasonal warming of about -2.1 W/m2? If not, then this is something they get right.)

———————————

The strongly positive SWR gain factors observed with seasonal warming may not be relevant to global warming. The error bars are much larger because reflection of OSR (unlike LWR) doesn’t change linearly with monthly Ts. Some changes are lagged, particularly sea ice, which has a minimum in September and a maximum in March in the NH and the opposite in the SH. The composite has two maxima during summer in the NH: the larger in September and the second several months earlier. The SH has very little land covered by seasonal snowfall. So feedbacks in OSR observed through clear skies during seasonal warming may have nothing to do with ice-albedo feedback in global warming.

The monthly change in OSR from cloudy skies is also not very linear with temperature and appears to have lagged relationships. So observations tel us that climate models represent important seasonal feedbacks very poorly, but they don’t tell us what feedbacks will accompany global warming (except perhaps clearly sky LWR feedback: Planck+WV+LR).

In my own field (materials science), there’s quite a bit you can learn from comparing one flawed model to another. It helps you understand how changing the underlying parameters, or gridding, or numerical scheme, etc., can change the results.

Basically, it’s an exploration of parameter space. It adds to our understanding of how things work; how varying this fundamental parameter changes that derived one.

It looks like there’s a fair amount of disagreement even among the observations. CERES EBAF differs from the other two by a not-insubstantial amount.

If cloud feedback is net strongly negative now, but increases in cloud cover will cause positive feedback, then one would assume that increasing cloud cover from zero to x%, where x is less than 30, must have strong initial negative feedback change per percent cover which declines as cloud cover increases and becomes positive at some point less than 30% cover. It’s not at all clear to me how this would work. It’s also not clear to me that 100% cloud cover would result in a warmer planet. The nuclear winter folks didn’t think so, not that they were particularly believable.

So let’s say that the cloud cover fraction doesn’t change, but the location and cloud top altitude changes as a result of warming result in less negative feedback. The problem here is that cloud cover data in models is wrong now. Why should we believe that the model predictions of trends when starting from the wrong initial conditions will somehow be correct? As I’ve said before, just because researchers haven’t found a strongly negative cloud feedback model doesn’t mean there isn’t one.

Some systematical bias in models:

“Abstract: The Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation (CALIPSO) satellite provides robust and global direct measurements of the cloud vertical structure. The GCM-Oriented CALIPSO Cloud Product is used to evaluate the simulated clouds in five climate models using a lidar simulator. The total cloud cover is underestimated in all models (51% to 62% vs. 64% in observations) except in the Arctic. Continental cloud covers (at low, mid, high altitudes) are highly variable depending on the model. In the tropics, the top of deep convective clouds varies between 14 and 18 km in the models versus 16 km in the observations, and all models underestimate the low cloud amount (16% to 25%) compared to observations (29%). In the Arctic, the modeled low cloud amounts (37% to 57%) are slightly biased compared to observations (44%), and the models do not reproduce the observed seasonal variation.”

From: About the observation of cloud changes due to greenhouse warming

Hélène Chepfer 2014

Click to access CERES-ScaraB-GERB-Toulouse-Oct2014.pdf

Let us sum up models vs observation:

The total cloud cover is underestimated except in the Arctic.

The low cloud amount is underestimated compared to observations.

Models do not reproduce the observed seasonal variation.

I would not think that these five climate models have less systematical bias than other models. But I find it difficult to dig out such data.

Other papers find that it hasn`t been any decrease in cloud cover the last 25 years (perhaps with the exception of some change in distribution). Or even over 60 years (Eastman et al. 2011)

JCH,

Or it could be related to a multi-decadal cycle. We won’t know for another few decades.

Observed cloud cover: “Overall, most of the studies reviewed suggest an increase in the TCC since the late 19th century, and in particular from the early until the mid/late-20th century, both over land and ocean. Although possible artifacts in these trends cannot be ruled out, it seems difficult to argue that these possible biases in the observations could explain the significant increases observed worldwide,” TCC is total cloud cover.

For Spain, which is the object of the study: “The linear trend for the annual mean series, estimated over the 1866–2010 period, is a highly remarkable (and statistically significant) increase of +0.44 % per decade, which implies an overall increase of more than +6 % during the analyzed period. These results are in line with the majority of the trends observed in many areas of the world in previous studies, especially for the records before the 1950s when a widespread increase of TCC can been considered as a common feature.”

From:Increasing cloud cover in the 20th century: review and new findings

in Spain. A. Sanchez-Lorenzo, J. Calbo´ and M. Wild, 2012

Yes, and that is why I noted the clear correlation between the pattern of current net cooling from clouds and the model projected net warming effect from changes in cloud cover. We are supposed to believe clouds cause strong net cooling at present, but less strong net cooling in the future, with the pattern in the projected drop in net cooling closely following the pattern of current net cooling due to clouds. Which indicates there is a “goldielocks” fraction of cloud cover which maximizes net cooling… any more or any less reduces net cloud cooling. Seems to me quite a stretch. Some might say “implausible”.

Sorry, the above comment was a reply to DeWitt’s comment.

I don’t think so. If you’re just increasing the cloud cover (keeping the same ratios of types of clouds), then I’d expect that the net cooling would continue to increase.

It’s more that the type / altitude / location of clouds changes. You aren’t just keeping the cloud types the same.

So I’m not really following you. I’m not sure why there couldn’t be local maxima in the cooling effects of clouds; why the cloud feedbacks can’t switch sign. Do you have more reasoning behind this?

Windchaser,

“Do you have reasoning behind this?”

Of course.

As DeWitt pointed out above: “The problem here is that cloud cover data in models is wrong now.” It is not like the models are so damned accurate about cloud cover that it beggars belief, it is quite the opposite: they are so damned inaccurate that it beggars belief. (see the Nic Lewis graph of cloud cover posted by SoD above on December 27)

You have a bunch of models which broadly disagree with each other about cloud feedbacks, are “no closer to agreement than a decade ago” about cloud feedbacks, and which generate, both individually and on average, a clearly inaccurate pattern of cloud cover when compared to measured cover. Considering that cloud feedbacks in GCMs represent a big fraction (most?) of the difference between empirical and GCM estimates of sensitivity (~1.9C vs ~3.4 C per doubling), there seems to me plenty of reason to doubt model projections of future cloud changes. If the models are correct about how warming changes cloud cover, that should already be clear in CERES data; it’s not.

Not saying the graph is wrong, but it came from what I believe is called a poster at an AGU convention. Are they peer reviewed?

JCH,

Not as far as I know. I think, however, you may have entirely too much faith in the screening capability of the peer review system. I wouldn’t take a peer reviewed paper as gospel. I think the credibility level of a poster at a major conference would be about the same as for a peer reviewed paper in an average journal. The level of skepticism should be similar.

But even the major journals can publish questionable science. See, for example, Steig, et. al., 2009 cover article in Nature about the pattern of warming in Antarctica since 1957:

https://www.nature.com/articles/nature07669

That was found to have significant errors by O’Donnell, et. al., 2010

http://journals.ametsoc.org/doi/abs/10.1175/2010JCLI3656.1

The authors had a difficult time getting their paper published because, apparently, Steig was one of the reviewers.

DeWitt,

“The authors had a difficult time getting their paper published because, apparently, Steig was one of the reviewers.”

There is no ‘apparently’ involved; Eric Steig was the reviewer who demanded the authors not claim Steig et al was wrong.

I think, however, you may have entirely too much faith in the screening capability of the peer review system.

Dewitt – I view peer review as a ticket to a more comprehensive review and nothing more than that. It obviously does not mean the paper is error free or advances the science in any way, which has to be a major goal of the exercise: advancement.

In the case of Steig09 and the subsequent O’Donnell et al paper, perhaps I am wrong, but I suspect there is an almost zero likelihood that any of the authors of O’Donnell paper would ever in their entire lives written a paper about temperature trends in Antarctica if not for Steig09. Sounds like science was advanced.

JCH,

The history of O’Donnell at al is actually interesting. After the Steig Nature paper (with the image of a uniformly warming Antarctica on the cover), O’Donnell, Jeff Condon, McIntyre, and others commented on the Realclimate web site, where Steig had posted a writeup, and said that the reconstruction didn’t look right (for a number of reasons, including obvious discrepancies with ground station data). Despite offering clear explanations of what was wrong, Ryan O’Donnell, Jeff Condon and others were dissed by the Realclimate crew, who said they didn’t know what they were talking about. What those folks at Realclimate did not know was that O’Donnell was very familiar with the type of ‘inverse’ problem in Steig et al, and could see that they were mathematically smearing warmth from the peninsula region over the whole continent. In the end Steig pretty much told them to go write their own paper and stop wasting his time. That was a mistake. Yes, science ultimately advanced (people finally understood that most of Antarctica was not actually warming rapidly) but it could have been a lot quicker, easier, and less contentious had Steig at al been willing to listen.

JCH quoted: “Here we show that several independent, empirically corrected satellite records exhibit large-scale patterns of cloud change between the 1980s and the 2000s that are similar to those produced by model simulations of climate with recent historical external radiative forcing.

What does the phrase “independent, empirically-corrected satellite records” actually mean? It means that the roughly 3% reduction in ISCCP cloud cover observed since 1985 is removed by empirical correction – ie corrections without any physical rational. It means elimination of most of the variability in PATMOS cloud cover (which is as big as ISCCP variability, but not a gradual reduction). Any variability that correlates with satellite zenith angle or sun angle (equatorial crossing time) is eliminated using a different fit for each grid cell. And any variability that might be attributed to artifacts from a single geostationary satellite is removed. All of these corrections are hypotheses that have been not been tested. The authors say:

“Note that any real variability in cloud fraction that happens to be correlated with variability in artifact factors will be removed by our correction procedure, but we consider a corrected dataset with some real variability removed preferable to a dataset with no real variability removed but dominated by artifacts.”

http://journals.ametsoc.org/doi/10.1175/JTECH-D-14-00058.1

According to the multi-model CMIP5 mean, anthropogenic factors should have caused changes of up to +/-0.5 to 1.0% in cloud cover over the last 25 years in some grid cells. The observed change in cloud cover in some grid cells is greater than 3%. If the corrected observations and models agree in trend direction, that is called agreement.

The data suggesting that cloud top heights in the tropics have risen may be somewhat more robust, but we are still talking about changes of about 0.5% over 25 years.

Then one still faces the issue of converting changes in cloud amount and cloud altitude with warming into changes in OLR and OSR.

Above, I asked why anyone would pay attention to this noisy, inconclusive data when we have very clear evidence from the seasonal cycle that LWR cloud feedback is slightly negative, not positive as most models predict? And there is no consensus among models about seasonal SWR cloud feedback.

The paper has been cited 58 times. I went through all of the ones cited that have complete copies available. The scientists who have cited it appear to be in the forefront of research to do with clouds. One was cited in opposition above. He used a result of this study in recent work. Stevens has a goal.

Frank asked: “Why anyone would pay attention to this noisy, inconclusive data (Norris 2016) when we have very clear evidence from the seasonal cycle that LWR cloud feedback is slightly negative, not positive as most models predict (Tsushima and Manabe 2013)? And there is no consensus among models about seasonal SWR cloud feedback.”

JCH replied: “The paper has been cited 58 times”, and reviewed the background of those citing the work.

Tsushima and Manabe 2013 has been cited only 4 times, despite the fact that Syukuro Manabe is one of the most respected figures in climate science. None of these citations endorsed the conclusion of this paper: Three appear to be large review articles and the fourth was an E&E paper that claims ECS is about 0.15K (that has only been cited once by its own author). Could this be because Norris 2016 says observations of clouds agree with models while Tsushima and Manabe 2013 say models are biased (and their data shows they are mutually inconsistent)?

Immediately below is the data that defines LWR feedback during seasonal warming. I challenge anyone to show me another climate science paper with tighter observational data. This is because you are looking at the biggest temperature change and seasonal warming has been observed once a year for the last thirty years. I posted the results for models above.

The globally averaged, monthly mean TOA flux of outgoing longwave radiation (Wm−2) over all sky (A) and clear sky (B) and the difference between them (i.e., longwave CRF) (C) are plotted against the global mean surface temperature (K) on the abscissa. The vertical and horizontal error bar on the plots indicates SD. The solid line through scatter plots is the regression line. The slope of dashed line indicates the strength of the feedback of the first kind [Planck feedback alone]. [The values on the y-axis represent heat lost and technically should have a negative sign.]

I don’t have the ability to paste figures from Norris (2016), but the observed change in cloud coverage shows changes 3-5X those predicted by climate models (See vertical scale.) Some other caveats:

“This is because observational systems originally designed for monitoring weather have lacked sufficient stability to reliably detect cloud changes over decades unless they have been corrected to remove spurious artifacts.”

“Note that any real variability in cloud fraction that happens to be correlated with variability in artifact factors will be removed by our correction procedure, but we consider a corrected dataset with some real variability removed preferable to a dataset with no real variability removed but dominated by artifacts.”

Worst of all, Norris (2016) tells us that models qualitatively agree with highly processed observations, but I doesn’t tell us anything quantitative about cloud feedback.

So why does a study that definitively shows AOGCMs are biased (and mutually inconsistent) get no significant citations in more than 4 years while Norris has 58 citations in 1.5 years.

Our host dislikes speculation about motivations. So I’ll simply ask: “Without AOGCMs, what does climate science have to offer policymakers?

Frank,

“Without AOGCMs, what does climate science have to offer policymakers?”

Well, there is broad consensus that equilibrium climate sensitivity is unlikely to be less than 1.5C per doubling, and transient sensitivity unlikely to be less than 1C. It is therefore possible to give policymakers (in democracies, that would ultimately be us!) lower bound estimates for future warming for any projected rise in CO2, and (at least in theory) make lower bound estimates of future costs and benefits. Unfortunately “future costs and benefits” are as often as not subject to wildly differing estimates, because of both the complexity of making projections, and because people assign very different ‘values’ to the same actual outcome… eg. What is the “cost” of having a 33% lower population of polar bears? (assuming that was a quantifiable outcome)

Frank,

So why does a study that definitively shows AOGCMs are biased (and mutually inconsistent) get no significant citations in more than 4 years while Norris has 58 citations in 1.5 years.

The reason the Norris paper has a high number of citations is that it is introducing a useful dataset. They get a citation whenever anybody uses that dataset.

Why doesn’t Tsushima and Manabe 2013 have more citations? We’d really need to question why it would be cited to understand that.

You seem to be impressed that the paper demonstrates biases in GCMs but actually that’s been done in hundreds, even thousands, of other papers. Just type “cloud biases GCMs” into google scholar for a subset.

In terms of the technique they propose for quantifying cloud and radiation biases based on seasonal cycle variations, this 2013 paper is really just rehashing one from the same authors from 2005, which has been cited 22 times according to the journal (38 times according to google). However, this seems to have been fairly quickly outdone in terms of model error quantification methods in the climate science world by Gleckler et al. 2008, which has 360 (674) citations and provided the methodological basis for much of the demonstration of model biases in IPCC AR5 Chapter 9.

In terms of a seasonal cycle cloud bias assessment being applied to the latest generation of CMIP models, this paper was already outdone by one published a few months earlier by the same lead author. It has been cited 20 (30) times, including numerously by AR5 Chapter 9. Tsushima and Manabe 2013 seems to have been a bit of a highlights reel spin off from that more substantial assessment.

Ultimately there doesn’t seem to be a great reason for citing that paper because it doesn’t bring anything new to the table.

Thank you for the presentation of Tsushima and Manabe, Frank.

I find it very convincing. It doesn`t only show model bias and spread, but it also shows that models get it systematically wrong. And it will be hard for many scientists to admit, when they are invested in their beliefs about clouds positive feedback, and when they keep repeating that models get reality reasonable right.

Paulski0: Thank you so much for the extremely responsive and useful answer. I just started trying to digest the Tsushima 2012 Climate Dynamics paper (T12). Some initial comments. TM13 is about feedback (W/m2/K), and therefore climate sensitivity. It uses our most reliable observational data (TOA OLR and OSR) and all CMIP3 and 5 models. T12 is about a variety of cloud observations (W/m2, % coverage, etc.) and a handful of CMIP5 models. It doesn’t bear directly on climate sensitivity.

Those using and citing the empirically corrected cloud data set should be citing Norris (2015) and have done so 34 times in 2.5 years. Those citing the conclusion that the corrected data set agrees with model predictions should be citing Norris (2016) and have done so 58 times in 1.5 years.

For me, TM13 clearly illuminates some important facts about seasonal feedbacks, which useful models should reproduce. Models are clearly mutually inconsistent in their predictions about OLR and OSR from clear and cloudy skies. Overall climate feedback parameters may agree, but only because of compensating errors. On the monthly time scale, OLR is a tight function of Ts (2.1 W/m2/K), with a slightly negative CRE. Most AOGCMs have a positive CRE and some are badly wrong. Models don’t properly reproduce seasonal changes in OSR, but these are not a tight function of Ts. Some component probably lag; others may be caused by hemispheric differences (in seasonal snow cover, sea ice and geography). Applying the gain factors TM13 calculate to global warming is problematic.

I need to stop talking and see what the other references you provide say about these important (and possibly ignored) conclusions.

I find it very convincing. It doesn`t only show model bias and spread, but it also shows that models get it systematically wrong. And it will be hard for many scientists to admit, when they are invested in their beliefs about clouds positive feedback, and when they keep repeating that models get reality reasonable right.

I guess Manabe is one who you think finds something too difficult to admit. Weird interpretation. He cowrote the paper. Frank wants to use the paper to imply that models are wrong, and Manabe’s early model cannot fairly be described by the word “wrong”:

JCH wrote: “I guess Manabe is one who you think finds something too difficult to admit. Weird interpretation. He cowrote the paper. Frank wants to use the paper to imply that models are wrong, and Manabe’s early model cannot fairly be described by the word “wrong”:

FWIW, the ECS of M&W 1967 model was 2.0 K. That may explain why you say his early projection was about right, why the central estimates for EBMs are 1.5-2.0 K, and why the OLR gain factor observed in T&M13 is consistent with an ECS of 1.8 K.

Manabe doesn’t inform us (in the limited space provided by PNAS) that OSR has some components that lag monthly Ts (see scatter plot) and some components that reflect hemispheric differences. (For example, there is negligible seasonal snow cover in the SH to reflect SWR through clear skies). Therefore, the OSR gain factor for the seasonal cycle is not directly relevant to global warming. However, it is a reasonable criteria for identifying biases in models. Spenser and Lindzen&Choi have both found that the best correlation between OSR and Ts involves a three-month lag and shifts from positive feedback with zero lag to negative feedback. L&C found this in the tropics, so it is not simply a problem involving surface (ice) albedo feedback. I personally disagree with using either zero or three month lag; I think the data is saying that OSR is not a simple function of Ts at any particular time. (Temperature controls emission of OLR, but reflection of SWR is obviously far more complicated.)

Manabe’s abstract diplomatically concludes: “we show that the gain factors obtained from satellite observations of cloud radiative forcing are effective for identifying systematic biases of the feedback processes that control the sensitivity of simulated climate, providing useful information for validating and improving a climate model.”

Is Manabe implying that AOGCMs need validation? That they failed validation?

I would go a little further: The data clearly shows that models are seriously mutually inconsistent. I don’t know what Manabe believes about the utility of a multi-model mean of mutually-inconsistent models. If he had shown the data, the LWR gain factor for the multi-model mean would be too high, inflating ECS and projected warming.

As the saying goes: “All models are wrong; some models are useful.” The question is whether current models are useful for informing policymakers about the likelihood that ECS is greater than 2 K. The IPCC and the climate science community have totally ducked their responsibility for presenting this issue in their Summaries for Policymakers and to the public.

Frank,

If he had shown the data, the LWR gain factor for the multi-model mean would be too high, inflating ECS and projected warming.

Except the LWR gain factors shown do not correlate at all with the models’ climate sensitivities. The highest gain is from one of the lower sensitivity models and the lowest gain is from one of the higher sensitivity models. In fact the alternative version of that latter model has a markedly reduced sensitivity but shows higher gain. So, while the test may provide a useful pointer to model biases it doesn’t carry any clear implication for climate sensitivity.

For similar tests which do appear to correlate with climate sensitivity, generally it has been found that higher sensitivity models show smaller biases. That’s the basis of the recent Brown and Caldeira paper and also a conclusion from the 2012 Tsushima paper: ‘The models in this study that best capture the seasonal variation of the cloud regimes tend to have higher climate sensitivities.’

DeWitt,

Your argument I think is only valid if feedback is quoted as vs cloud cover fraction.

It isn’t; it’s quoted vs temperature.

I don’t think the absolute value of cloud forcing in principle tells you anything at all about how it will change with respect to temperature.

vtg,

Yes, probably.

However, I think there may be confusion in cause and effect on location of cloud cover and surface temperature. I’m thinking now that a possible mechanism for the apparent multi-decadal oscillation in temperature in the surface record that is highly correlated with the AMO Index data might be changes in the pattern of cloud cover possibly driven by shifts in ocean currents that are chaotic in nature rather than driven solely by increases in anthropogenic ghg’s. Inherently chaotic processes can produce oscillatory behavior, at least for a while. We also know there was a major shift in the North Atlantic around 1995. That’s when Arctic Sea ice loss accelerated. I can also see what looks like a break point around that date in the UAH NoPol TLT temperature data.

Two of the model trends in response to warming seem reasonable: convective cloud tops in tropics increase in height, non-convective cloud belts shift northwards into areas with lower sun angles.

Reply to Paulski0. Thanks for the Glecker reference. A very interesting paper that. And happy New Year to all!

What you’ve highlighted here is really a very interesting part of climate science. It is important to realize that most scientists I know put most of the weight on simple physical arguments and observations rather than climate models (although GCMs play a key role in synthesizing our understanding). So “I don’t believe the models” is not a very good argument, IMHO.

The key is to break the cloud feedback into various components, as was described in the post above. Then you can examine each one and decide if it’s reasonable. There are convincing simple arguments why, for example, cloud height is expected to increase in a warmer world — see, e.g., http://www-k12.atmos.washington.edu/~dennis/Hartmann_Larson_2002GRL.pdf

This factor leads to a robust positive feedback.

Most of the uncertainty in ECS can be tied to the response of low clouds. While not as simple, one can also make a pretty convincing argument synthesizing obs. and simple theory that low clouds will not be a negative feedback. https://link.springer.com/article/10.1007/s10712-017-9433-3

I really recommend people read that paper to see an example of how climate scientists think about the problem. It’s different from the cartoon version that we just run climate models and blindly accept the results.

Happy new year to all.

So Andrew, You drop by for another drive by comment. Do you have any thoughts on the Zhao et al paper on parameters controlling convection and precipitation and their strong influence on ECS. And their statement that there are no convincing observational constraints to set those parameters?

I don’t think there’s any question that climate models can get a range of climate sensitivities by adjusting parameters. I’ve been told that, in the MPI model, they adjusted the cumulus entrainment parameter and sensitivity went from 3 to 7°C.

That said, there are a few things I like to emphasize. It seems easy to get a climate model to have a high sensitivity, but perturbed physics ensemble experiments (where they’ve systematically explore the universe of parameters) seem to show that it’s very very hard to get a realistic low sensitivity model.

I’d think it would be interesting for someone to show that you can get a climate sensitivity of, say, 1°C in a realistic model by adjusting parameters. No one’s demonstrated that.

Second, there seems to be this myth that climate models are the fundamental basis of climate science. Most scientists I know take them seriously, but the bedrock of climate science is simple physical models + observations.

So I think the result you describe as interesting (I haven’t read the paper), but I don’t think it causes me to question the mainstream view of climate science.

Thanks for responding Andrew. I’m not sure why a model with an ECS of 1.0 is a cutoff for your challenge. Surely, an ECS of 2.0 would lead to different conclusions that the current CMIP5 mean of about 3.4.

I would note that Nic Lewis has some data on this from the literature and Zhao also came up with a much lower ECS model varying the convection and precipitation model. A naive calculation comes in at 1.8 vs. 3.0 ECS for the two sets of parameters. Here’s the link to Nic’s writeup. In some of the later points, he discusses other examples in the literature of such modifications yielding much lower ECS. Start at point 19.

values.https://niclewis.files.wordpress.com/2016/03/briefing-note-on-climate-sensitivity-etc_nic-lewis_mar2016.pdf

I did look at the abstracts for your session at the recent AGU conference. I couldn’t find the presentations themselves however. Are they available?

I hope this threads correctly … it’s a response to https://scienceofdoom.com/2017/12/24/clouds-and-water-vapor-part-eleven-ceppi-et-al-zelinka-et-al/#comment-123474 by dpy6629

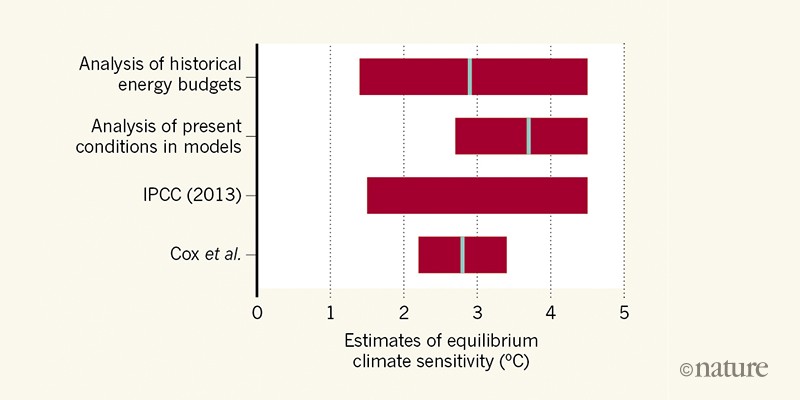

I think ECS could end up anywhere between 2 and 4.5 K — I have a paper about to be submitted that yields a likely range of 2.4-4.4 K. Whether our policy should be different for an ECS of 2 vs. 3.5 is not a scientific question, so I don’t have any professional opinion about that.

I agree with a lot of what Mr. Lewis says. If you add up the feedbacks we are very confident about (Planck, water vapor, lapse rate, ice albedo), you get an ECS of about 2 K. If the cloud feedback is positive, which most of the evidence suggests is true, then you end up with an ECS > 2 K. That’s basically the argument that I find most compelling for why the low values (< 2 K) of ECS are unlikely.

Mr. Lewis suggests that one way around this is if the water vapor + lapse rate feedback are overstated b/c the atmosphere is not warming up as fast as expected ("no hot spot"). The evidence on that is mixed, with some data sets showing expected warming and others not. Obviously, some of these observational data are wrong — and my guess is that the data sets that don't show a hot spot are wrong.

The reason I have that view is that the atmosphere and surface are tied together by pretty simple physics (see moist adiabatic lapse rate) and if the atmosphere is not warming as fast as expected, then something really weird is going on. The more parsimonious explanation is that data sets that don't show warming are wrong.

Andrew, The hot spot and the lapse rate theory in the tropics are indeed “fundamentals” of climate science. We have been assured for decades this is settled science. The hot spot or lack of one has generated in my view a huge literature much of which tries to “find” the signal by various intricate methods of massaging the data. I would note that at Real Climate on their model comparison page, there is a graphic for TLT in the tropics that shows that indeed models are warming much faster than the data. They baseline it differently than Christy, but both show pretty much the same thing. It’s easy to get confused on this issue and I’ve seen this many times in my field where inconvenient data is tortured until it confesses to its errors.

In my view, it is quite likely that these lapses in “settled climate science” are related to convection and precipitation in the tropics, an ill-posed problem. The spate of recent papers on this is simply confirmation of what any graduate student in CFD will already know and has been settled science for at least 70 years. What these recent papers show is in my view a very good reason to not view current AOGCM’s as scientifically valid evidence for ECS, as Nic Lewis says.

So then we are left with your line of argument about feedbacks and the observation evidence. You will also recall that in AR4, observational studies were massaged and yielded ECS not much different than GCM’s. One study was even recalculated using a uniform prior so it would give a higher ECS more consistent with other studies. Generally, Lewis’ work has shown that these early estimates were grossly too high. Why did it take an outsider to show this?

Seems to me that better data is really needed. Surely, we spend vastly too much on generating and “running” AOGCM’s whose scientific value seems to me to be low. That money could be better spent on a new lapse rate theory that agrees with observations and improved data.

And with respect, it seems to me that climate science needs to do a better job of eliminating biases that it is becoming increasingly clear are a threat to the scientific enterprise itself.

http://www.thelancet.com/journals/lancet/article/PIIS0140-6736(15)60696-1/fulltext

Sorry, its the TMT graphic I was referring. You will need to cut through reams of criticisms of Christy and his graph (including criticism of the “message it conveys”) before finding that in fact Christy was on to something, even though perhaps he overstated the finding a bit. It’s a sad statement about bias in climate science and politization of the field.

Andrew,

“Obviously, some of these observational data are wrong — and my guess is that the data sets that don’t show a hot spot are wrong.”

The likelihood that the data are always wrong in the same direction (always to suggest higher climate sensitivity, always to suggest models are correct) seems to me very low. Measured average cloud top heights do not show a robust increase over time… once again, contrary to modeled (and canonical) expectations. In any case, we need a lot more ‘ground reality’ data like balloon temperature profiles, not more discussions of why measurements are always wrong when they conflict with GCMs.

You seem surprised by people focusing on climate model projections, and surprised that they doubt those projections. My observation is that much (most?) of the most ‘alarming’ projections come from GCMs. I find it not at all surprising that people focus on the most alarming GCM projections.

dpy6629,

On this blog you must not state the obvious if that obvious includes any suggestion about motivations. I find it a preposterous rule, because when you exhaust other explanations for very dubious conclusions, motivations seem the obvious alternative. But it is what it is.

Yes Steve, but I think thoughtful readers will see the obvious implications. What the Lancet says about science is in my view at least as applicable to climate science where the data are noisy, the models not very skillful, and the activism pretty poorly disguised.

So let’s dig into the question of why I don’t think there is a “missing hotspot”.

1. There are a lot of atmospheric temperature data sets and some show the hotspot (e.g., RSS, Sherwood et al. homogenized radiosondes) and some don’t (e.g., UAH).

2. Simple theory gives us a prediction of how much the atmosphere should warm. The observations agree with this very well with this theory for short-term climate fluctuations (e.g., El Ninos).

3a. If you want to believe the “no hot spot” obs., then you are are dismissing the data that show the hot spot. You are also implicitly positing some physical process that occurs on long time scales that is not occurring for short-term variability.

3b. The alternative, which I consider to be more parsimonious, is that the theory is right and the hot spot exists, and it’s the “no hot spot” observations that are wrong.

Overall, this is actually quite a hard problem and I acknowledge that I could be wrong — but I don’t think it’s likely. I recommend people interested in the topic read this paper to get some sense of the difficulties in determining what the atmospheric trend should be: http://onlinelibrary.wiley.com/doi/10.1002/2014JD022365/full

I would also commend people for being skeptical of models, but you should also be skeptical of observations. Some observations are right, some aren’t. Don’t assume obs. that confirm your views are always correct.

Andrew, I found the Sherwood paper and its the one I remember that uses wind data to calculate temperature and finding that the wind data if relatively free of “artifacts.” This is in my view very questionable. For one thing, wind speed is very noisy compared to temperature as is obvious from any cursory knowledge of meteorological data. For another thing, balloons drift with the wind. They measure relative wind speed, not absolute wind speed.

I would suggest taking a look at the Real Climate page. Given their history, one would have to say they would be unlikely to show such a discrepancy if there were any alternative explanation.

Sherwood has done several analyses of temperature. One was based on thermal wind (using winds to calculate temperatures), which I found to be quite good. But if you’re not convinced by that, they’ve also done plain analyses of temperatures … e.g., http://iopscience.iop.org/article/10.1088/1748-9326/10/5/054007

Zhou16 is one of my favorite papers. I found this comment in your 2nd link to be useful as i suspect it has to do with Matt England’s intensified trade winds:

As interesting as SST pattern effects are, they are unlikely to have a first-order impact on the century time-scale tropical low-cloud feedback. With typical values of the cloud sensitivities, a negative tropical local low-cloud feedback would not occur unless the ratio of EIS to SST change is ~ 1, several times larger than the typical ratio of 0.2 exhibited by climate models. Such a large value might happen for decadal variability (Zhou et al. 2016), but is extremely unlikely to happen for century time-scale warming. …

Professor Dessler: The abstract of Hartmann & Larson (2002) is copied below. It appears to say nothing about increasing cloud top height in a warmer world, but it is consistent with mysterious aspects of AOGCMs output that result in high climate sensitivity. I’d sure like to understand them better.

Abstract: “Tropical convective anvil clouds detrain preferentially near 200 hPa. It is argued here that this occurs because clear-sky radiative cooling decreases rapidly near 200 hPa. This rapid decline of clear-sky longwave cooling occurs because radiative emission from water vapor becomes inefficient above 200 hPa. The emission from water vapor becomes less important than the emission from CO2 because the saturation vapor pressure is so very low at the temperatures above 200 hPa. This suggests that the temperature at the detrainment level, and consequently the emission temperature of tropical anvil clouds, will remain constant during climate change. This constraint has very important implications for the potential role of tropical convective clouds in climate feedback, since it means that the emission temperatures of tropical anvil clouds and upper tropospheric water vapor are essentially independent of the surface temperature, so long as the tropopause is colder than the temperature where emission from water vapor becomes relatively small.”

I don’t understand the physics behind the assertion that radiative cooling becomes “inefficient” around 200 hPa. As the water vapor mixing ratio decreases with altitude, water vapor is both absorbing less and emitting less. In other words, both radiative cooling and radiative heating decrease, so drying doesn’t change the temperature at which these processes are in equilibrium. (Changing the radiation entering 200 hPa from below or above would change the temperature of radiative equilibrium.) The calculated radiative cooling rate certainly drops around 200 hPa (Figure 1), but I’ve always assumed that this happens because convection has stopped bringing up heat from below at this altitude. Lower in the troposphere, convected heat raises the local temperature above pure radiative equilibrium and results net radiative cooling. AOGCMs presumably calculate radiative transfer correctly, even if I misunderstand Hartmann’s explanation.

Hartmann says that surface warming in the tropics won’t increase the temperature at 200 hPa (which I might describe as positive lapse rate feedback), making dOLR/dTs = 0. Even if Hartmann’s explanation were dubious, something like this is having a major impact on the output from AOGCM’s in the tropical Pacific – perhaps rising cloud tops. For example, in Andrews (2015), dOLR/dTs is 0 in the early years of an abrupt 4XCO2 experiment and becomes strongly positive in later years. (Positive meaning less heat escapes to space as Ts rises.) This happens through both clear and cloudy skies.

http://journals.ametsoc.org/doi/10.1175/JCLI-D-14-00545.1

Try reading this Zelinka and Hartmann paper, which might explain the FAT hypothesis better: http://onlinelibrary.wiley.com/doi/10.1029/2010JD013817/full

Andy wrote: “I agree with a lot of what Mr. Lewis says. If you add up the feedbacks we are very confident about (Planck, water vapor, lapse rate, ice albedo), you get an ECS of about 2 K. If the cloud feedback is positive, which most of the evidence suggests is true, then you end up with an ECS > 2 K. That’s basically the argument that I find compelling for why the low values (< 2 K) of ECS are unlikely."

I think that many of the commenters here agree with this statement, except they want to dig more deeply in why cloud feedback must be positive and whether it is significantly positive. The fact that professional climate scientists are willing to engage with Mr. Lewis is encouraging.

Tsushima and Manabe (2013) show that LWR cloud feedback during the seasonal cycle is slightly negative, but positive in most models. The multi-model mean is biased. Since the amplitude of the seasonal cycle is huge (3.5 K in GMST) and the OLR response is highly linear, this discrepancy appears very robust. So I personally start with an ECS of 1.8 K/doubling and add only SWR cloud feedback. (Unlike OLR, there is no fundamental physics I know of linking changes in reflection of SWR to changes in Ts.) There is observational evidence and some physical rational for expecting changes in reflection of SWR to lag changes in Ts (when Spenser and Lindzen claim SWR feedback is negative), but I'm skeptic that positive unlagged SWR feedback during the seasonal cycle or negative lagged SWR feedback at other times tell us anything useful, especially when the relationship isn't clearly linear.

Since saturation water vapor increases at a rate of 7%/K and 5.6 W/m2/K in terms of latent heat (!), the rate of overturning of the atmosphere must slow. That will raise relative humidity over the oceans slightly (and slow the rate of evaporation). The interface between the boundary layer and the free atmosphere can't be properly modeled with large grid cells, I'll stick with my intuition that rising relative humidity will produce more boundary layer clouds.

Andrew Dessler wrote: “I agree with a lot of what Mr. Lewis says. If you add up the feedbacks we are very confident about (Planck, water vapor, lapse rate, ice albedo), you get an ECS of about 2 K. If the cloud feedback is positive, which most of the evidence suggests is true, then you end up with an ECS > 2 K. That’s basically the argument that I find most compelling for why the low values (< 2 K) of ECS are unlikely."

I agree with most of what Frank wrote in response to this statement. But I would add that the evidence is weak and not at all on one side. The evidence for positive cloud feedback consists of qualitative arguments and models. There is no solid observational evidence, at least according to AR5. But there is also Lindzen's iris effect, which is a plausible qualitative argument for a negative cloud feedback. And there is some modelling support for that. From the abstract to Mauritsen and Stevens (2015) "Missing iris effect as a possible cause of muted hydrological change and high climate sensitivity in models", Nature Geoscience, DOI: 10.1038/NGEO2414:

"A controversial hypothesis suggests that the dry and clear regions of the tropical atmosphere expand in a warming climate and thereby allow more infrared radiation to escape to space. This so-called iris effect could constitute a negative feedback that is not included in climate models. We find that inclusion of such an effect in a climate model moves the simulated responses of both temperature and the hydrological cycle to rising atmospheric greenhouse gas concentrations closer to observations. … We propose that, if precipitating convective clouds are more likely to cluster into larger clouds as temperatures rise, this process could constitute a plausible physical mechanism for an iris effect."

So qualitative arguments are inconclusive as to the sign of the cloud feedback. Models are also inconclusive since the various positive feedbacks are highly inconsistent between models and the the main proposed negative feedback depends on a sub-grid-scale process. There is no clear observational evidence one way or the other, except for the the evidence on overall sensitivity. That implies a cloud feedback near zero.

I looked a little further into Professor Hartmann’s work and found him linked to the “fixed anvil temperature” hypothesis for rising cloud tops in the tropics. So perhaps Professor Dessler didn’t provide the optimum reference to Hartmann’s work. The reference below is to a 2007 Hartmann study with cloud resolving models.

http://journals.ametsoc.org/doi/full/10.1175/JCLI4124.1