In Opinions and Perspectives – 5 – Climate Models and Consensus Myths we looked at (and I critiqued) a typical example of the storytime edition – why we can trust climate models. On that same web page they outlined a “Climate Myth”:

Models are unreliable

“[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere.” (Freeman Dyson)

Fudge factors does kind of imply a lot of unreliability. But I was recently giving some thought to how I would explain some of the issues in climate models to a non-technical group of people. My conclusion:

Models are built on real physics (momentum, conservation of heat, conservation of mass) and giant fudge factors.

Depending on the group I might hasten to add that “giant fudge factors” isn’t a nasty term, aimed at some nefarious activity of climate scientists. It’s just to paint a conceptual picture to people. If I said “sub-grid parameterization” I’m not sure the right mental picture would pop into peoples’ heads. I’m not sure any mental picture would pop into peoples’ heads. Instead, how do we change the subject and stop this nutter talking?

Fudge Factors

In the least technical terms I can think of.. imagine that we divided the world up into a 3d grid. Our climate model has one (and only one) value in each cell for east-west winds, north-south winds, up-down winds, high cloud fraction, low cloud fraction, water vapor concentration.. basically everything you might think is important for determining climate.

The grid dimensions, even with the latest and best supercomputers, is something like 100km x 100km x 1km (for height).

So something like N-S winds, and E-W winds, might work quite well with large scale processes operating.

But now think about clouds and rain. Especially in the tropics, it’s common to have strong upwards vertical winds (strong convection) over something like a few km x a few km, and to have slower downward winds over larger areas. So within one cell we have both up and down winds. How does the climate model deal with this?

We also have water vapor condensing out in small areas, creating clouds, later turning into rain.. yet other places within our cell this is not happening.

How does the model, which only allows one value for each parameter in each cell, deal with this?

It uses “sub-grid parameterizations”. Sorry, it uses giant fudge factors.

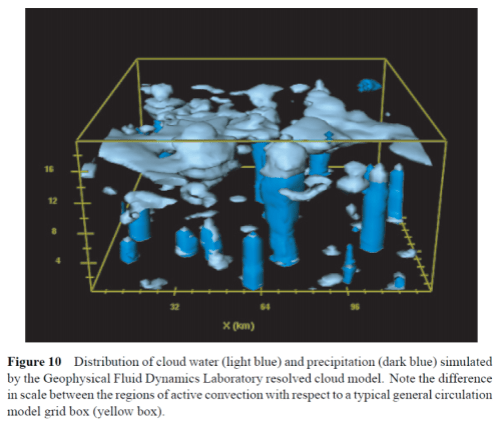

Here is an example from a review paper in 2000 on water vapor (the concepts haven’t changed in the intervening years) – the box is one cell in the grid, with clouds and rainfall as light blue and dark blue:

Figure 1

The problem is, when you create a fudge factor you are attempting to combine multiple processes operating over different ranges and different times and get some kind of average. In the world of non-linear physics (the real world), these can change radically with very slight changes to conditions. Your model isn’t picking any of this up.

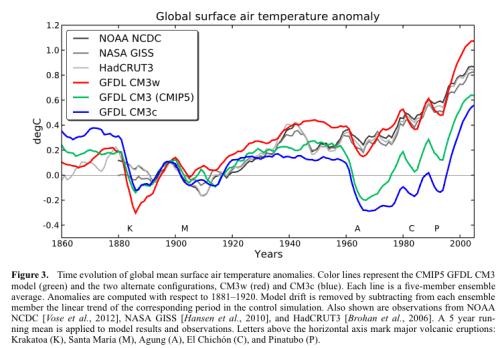

Here’s just one example from a climate science paper. It picks out one parameter in cloud microphysics, and uses three different values to run the climate model from 1860 to today and looks at the resulting temperature anomalies (see the last article 5 – Climate Models and Consensus Myths).

Figure 2

The problem is that the (apparently) most accurate value of this parameter (blue) produces the “worst” result (the least accurate) for temperature anomalies. As the authors of the papers say:

Conversely, CM3c uses a more desirable value of 10.6μm but produces a very unrealistic 20th century temperature evolution. This might indicate the presence of compensating model errors. Recent advances in the use of satellite observations to evaluate warm rain processes [Suzuki et al., 2011; Wang et al., 2012] might help understand the nature of these compensating errors. (More about this paper in Models, On – and Off – the Catwalk – Part Five – More on Tuning & the Magic Behind the Scenes).

Conclusion

This is the reality in climate models. They do include giant fudge factors (sub-grid parameterizations). The “right value” is not clear. Often when some clarity appears and a better “right value” does appear, it throws off some other aspects of the climate model. And anyway, there may not be a “right value” at all.

This is well-known and well-understood in climate science, at least among those who work closely with models.

What is not well-known or understood is what to do about it, or what this means for the results produced by climate models. At least, there isn’t any kind of consensus.

Articles in this Series

Opinions and Perspectives – 1 – The Consensus

Opinions and Perspectives – 2 – There is More than One Proposition in Climate Science

Opinions and Perspectives – 3 – How much CO2 will there be? And Activists in Disguise

Opinions and Perspectives – 3.5 – Follow up to “How much CO2 will there be?”

Opinions and Perspectives – 4 – Climate Models and Contrarian Myths

Opinions and Perspectives – 5 – Climate Models and Consensus Myths

References

Cloud tuning in a coupled climate model: Impact on 20th century warming, Jean-Christophe Golaz, Larry W. Horowitz, and Hiram Levy II, GRL (2013) – free paper

Is ocean heat content a fudge factor?

https://tambonthongchai.com/2018/10/06/ohc/

No.

An example for the ocean is the vertical eddy diffusivity.

In doing a three-day weather forecast, are they using the same fudge?

JCH,

There are lots of differences between weather forecasting models and climate models. Also lots of similarities.

The underlying equation base is of course the same. Conservation of momentum, mass, heat and so on.

Three big differences that I see:

1. Much finer grid – I believe something like 1km x 1km for the best national weather forecasts vs 100km x 100km for climate models

2. Much shorter timescale to run over – a week or two vs a century

3. Opportunities for refinement based on results

The effect of 1 & 2 is the closure schemes – to solve “sub-grid parameterization” – are completely different due to the scales.

And I expect (but I don’t know the minute details of weather models) you don’t have to worry about drift in weather models. If the energy balance is incorrect then, for example, the temperature might drift by 0.002’C over 1 week. Unnoticeable. If the energy balance is incorrect by the same amount in a climate model the temperature will drift by 10’C over a century. So climate modelers and weather modelers are focused on very different outcomes.

The effect of 3 allows over-confidence, under-confidence to be reviewed and worked on.

So in weather forecasting you might run 50 models, with slightly different initial conditions and/or slightly different parameters (why?). Maybe 5 of the models say there is the chance of a severe storm. So you conclude that there is a 10% chance of a severe storm (5/50). The storm occurs. Were you correct in your 10% chance? You have no idea.

But over a year you look at all the 10%ers and see – did they occur roughly 10% of the time in reality? Or was it 30%? Or 2%?

So you can find out what you are over-confident of, or under-confident of, and attempt to find model changes which will make the model’s estimate of likelihood match up to reality.

I did write an article a while back which explains more on point 3 – Ensemble Forecasting.

SoD – thanks.

Niño 3.4 is below .5 ℃, so 18-19 El Niño is in a bit of trouble. For the moment anyway.

Another way to look at this is that pressure gradients in the atmosphere are usually low thus making Rossby waves relatively easy to model. However even in weather models there are issues such as the assumption that there is no turbulence outside the boundary layer. Thus the rate of decay of vortices and Rossby waves is determined by numerical viscosity. Much of the success of weather modeling is really doing a good job on Rossby waves over short time frames.

However even this success must be tempered strongly. If you ever read the forecast discussions the NWS does, you will see often something like “the models diverge at day 3” and we go with a broad brush forecast.

As SOD points out in the longer term, many other things become more important such as any lack of conservation of energy or tropical convection. Even weather models I suspect can’t predict tropical thunderstorms in detail very well. You might get a reasonable probability based on pressure gradients and past experience, but you simply cannot predict exactly where a thunderstorm will develop on any given day.

In the case of weather forecast models, we have the output from 700 model runs per year (with up to 50 different initialization) making predictions for every hour for two (?) weeks. With all of this data, we have a fairly good idea of how reliable a forecast is.

Climate modelers do not make “forecasts” intended to be validated by subsequent events. Look at the debates over Hansen’s early projections. Ever-evolving climate models are only checked by hindcasting, leaving open the possibility that models are tuned (intentionally or unintentionally) to agree with the past.

Thus we have the present stupid situation where negative aerosol forcing predicted by models is substantially stronger than now believed to be appropriate based on observations, and those wrong forcing estimates cause confusion in energy balance models. Two decades from now, we won’t be able to compare the projections of CMIP5 models with observed warming, because the realized forcings will not agree with forecast forcings included in various scenarios. CMIP output does not include the forcing produced by every driver. The other important potential “fudge factor” is ocean heat uptake, which allows any heat not escaping to space through an appropriately high climate feedback parameter to “disappear” into the deep ocean, and thereby not cause “too much warming” at the surface.

“All models are wrong. Some models are useful.” Today’s climate models are useful for encouraging policymakers to limit CO2 emissions. Candid discussion of validation might make them less useful for this purpose.

A few comments:

1) Arguments by arm-chair experts on blogs are not definitive. Bias/error analysis/predictability needs to be addressed quantitatively.

2) Errors go both ways. There is strong selection bias in making qualitative arguments. We all do it. However model bias can only be addressed by evaluating all the factors simultaneously, not by cherry-picking one or two to make a point. This takes well-designed studies. Emerging constraint studies are looking for bias and so far results haven’t indicated any high-side bias.

3) IMO the paleo record is the best way to estimate ECS. Our recent observational record certainly supports a sensitive climate.

4) Tuning is a feature not a bug. Based on the track-record established since the 1980s, reasonable forward projections can be made by tuning climate models. Tuning is not trivial. Think of it this way, tuning a climate model involves many orders of magnitude more information than a tuning a simple EBM.

5) The big picture needs to be considered. Models handle water vapor feedback well. If there was any way to avoid strong H2O feedback, models would be the way to demonstrate it. 2) There are certain cloud effects in a warming climate that are independent of sub-grid-scale phenomena, likely to cause warming and where models have skill. The list includes: convective clouds in the tropics extend to greater height; Hadley cells expand to the north, storm tracks shift to the north, low level cloudiness expands in the polar night as sea ice shrinks.

Well Chubbs, Sod is more than an “arm chair expert.” In any case, what he says here is in my view correct. Tuning is not a “feature”. It’s a messy way to try to overcome the fact that many scales of the flow are not resolved by the grid.

What you say about clouds is quantitative and as such does not help much in terms of determining cloud feedback or how to model clouds.

I’d like to see evidence that models handle H2O feedback “well.” There is some evidence that in the tropics they do OK on total column water vapor. But the distribution of that water vapor is important too. Stronger convection will affect that distribution.

Paleo estimates are all over the place. They are a lot less reliable than modern observational evidence.

Sorry, I meant to say that what you say about clouds is QUALITATIVE and NOT quantitative.

Chubbs,

Thanks for your kind words, but I think you are over-stating my knowledge. I am no expert.

It’s usually an office type chair as well, but that’s probably not important.

In this blog – at least up until this series – we try and see what scientific evidence exists for various claims. There are no authorities. Of course, I expect that people who write textbooks know what they are doing and that’s who I’ve learnt from. Likewise, climate scientists who have worked in a sub-field for decades and published 100 papers know 10,000x more than me on that sub-field so I read their papers and assume they understand the subject better than anyone else.

But in the end, all claims need to be evaluated – what evidence is there for a set of claims?

And in this article, you might have misunderstood. I’m not doing a treatise on climate models. I’m reviewing a website with what seems to be an overly positive message on climate models, aimed at the general public.

SOD

Sorry I certainly didn’t mean any offense. Also thanks for the clarification on the purpose of the blog article. I had completely missed it to be honest. Your article reads much more like a criticism of climate models than of another blog article.

Regarding evidence, I didn’t see any. Climate model output is understood to reflect to average conditions over a very large number of realizations. So yes, models will be wrong in every single grid square at every single time step. But you haven’t provided any information on model performance and the relative importance of sub-grid vs. other model physics. It may be that thermodynamics drive the key results. So don’t think this article says anything about the value or use of climate models or whether “fudge factor” is a good term to convey information about climate models to the public.

I close by linking a journal article from Manabe and Weatherald (1975) perhaps the first GCM, I am not sure. Many caveats from the authors, very primitive, no ocean, yet the findings in the abstract aren’t bad, old hat now. You have to hunt for the ECS which is buried, not far from CMIP5, I’m sure there were compensating errors.

https://journals.ametsoc.org/doi/10.1175/1520-0469%281975%29032%3C0003%3ATEODTC%3E2.0.CO%3B2

Chubbs, I really think you don’t understand the issue. If in fact hind casting of GMST is a result of tuning and other things like clouds or effective aerosol forcing or ocean heat uptake are wrong then overall energy flows are wrong and future projections will be wrong too.

So far you have dealt with a single integral output function and that means little in terms of skill.

You seem to be unaware also of the importance of the distributions of forcings and energy flows to ECS and TCR and ultimately temperatures. The ice ages were caused by changes in the distribution of forcing. The total forcing remained constant. That’s as close to a proof as you are going to get in this field.

With respect, SOD showed an example of the very large impact of cloud microphysics sub grid parameters on the global mean temperature in the GFL model. This proves that “thermodynamics” does not drive the result. Just as in all CFD, the dynamics are critical for overall forces and temperature.

Sorry I should have said that thermodynamics does not determine the outcome by itself. Dynamics are also critically important.

Sorry dpy I don’t think you know the issue. Model performance hasn’t been discussed here. You are arguing from incredularity. What seems incredible to you is SOP to me.

How do you know dynamics are important? Lets take ENSO for example; very important for this year, somewhat important for this decade, unimportant for this century.

Dynamics are critical in all CFD simulations including of the atmosphere. Dynamics for example determine the equator to pole temperature gradient, cloud feedbacks, and indeed the lapse rate. It’s an obvious point to people in the field.

Regarding dynamics – climate models do a good job of simulating average global circulation.

Here is a reference for my statement on models getting some of the large-scale cloud feedbacks right:

https://www.nature.com/articles/nature18273

Another opinion on water vapor feedback from Nic Lewis:

“There is more doubt about whether the combined water vapour + lapse rate feedback in AOGCMs is realistic, despite model estimates being fairly consistent at around +1.0 Wm−2 °C−1. This feedback is concentrated in the tropics, where AOGCMs simulate strong warming in the upper troposphere with specific humidity (the amount of water vapour), which has a particularly powerful effect there, increasing and absorbing more radiation. Saturation water vapour pressure increases rapidly with temperature, so this would happen if relative humidity were constant. Observational evidence does not appear to support the existence of such a strong tropical “hot spot”. This suggests that water vapour feedback (and the weaker, opposing, lapse rate feedback) may be overestimated in AOGCMs. The below chart27 compares tropical warming trends in CMIP5 models and various observationally-based datasets over recent decades. The 100000 Pa (1000 mb) pressure level is near surface level, 30000 Pa (300 mb) is in the mid-upper troposphere.

Related to this, although some evidence suggests specific humidity has gone up (with relative humidity changing little)28, as in most AOGCMs, other evidence suggests it has been steady or fallen,29 30. NOAA data31 shows a marked decline in tropical upper tropospheric water vapour over recent decades, although this represents a model-based reanalysis and may not be accurate.”

28 Chung E-S. et al, 2014. Upper-tropospheric moistening in response to anthropogenic warming. PNAS doi/10.1073/pnas.1409659111

29 Vonder Haar, T.H.,J.L. Bytheway, and J. M. Forsythe, 2012.Weather and climate analyses using improved global water vapor observations, Geophys. Res. Lett.,39: L15802, doi:10.1029/2012GL052094.

30 http://www.drroyspencer.com/2015/08/new-evidence-regarding-tropical-water-vapor-feedback-lindzens-iris- effect-and-the-missing-hotspot/ Also see graphs in http://www.friendsofscience.org/assets/documents/NVAP_March2013.pdf

31 NOAA ERSL water vapour data. Specific humidity, 300 mb, 30N-30S, 0-360E, monthly values, area weighted grid. 300 mb, the highest level available, is in the upper troposphere.

It is very difficult to evaluate water vapor feedback with observations. I have seen several flawed attempts in the past. The best analyses have come to the opposite conclusion. Below for instance:

https://www.pnas.org/content/111/32/11636.short

While difficult in observations, it is very easy to evaluate water vapor feedback in a model and model results are tightly clustered. Strong water vapor feedback has been a consistent result for decades. If there was a way to generate a lower water feedback by adjusting model physics, someone would have reported it by now.

To me Nick’s document sounds like advocacy, not science.

dpy,

The “friends of science” are an… interesting choice of citation.

From their website

“Our Opinion:

It is our opinion that the Sun is the main direct and indirect driver of climate change. ”

Unlikely to be a reliable source.

https://friendsofscience.org

Nit picking is not interesting VTG. The quoted document has many references. Can you respond to the substance of the point about effective feedbacks in models varying over a large range.

dpy,

the use of reputable sources is not “not-picking”, it’s central to good faith technical discourse. Please use reputable sources, not political websites with a self-avowed prejudged opinion.

I’m not sure what precisely you refer to in ” the point about effective feedbacks in models varying over a large range.”, as your comment doesn’t refer to this as far as i can see. Is this some new point? There is indeed a wide range in sensitivity due to uncertainty in feedbacks.

Well “reputable” sources are often wrong too. Nic has perhaps 40 references. Your selection of one you don’t like is not a valid point. Can you respond to the technical substance for once?

dpy,

I have no idea what you want a response on. I have already asked…

Chubbs, Nic’s point here is technical and it seems well sourced to me. The whole issue of water vapor is tied up in convection, precipitation, clouds, and the lapse rate. Models don’t do a good job at any of these except perhaps the last one. An obvious conclusion once again is that if indeed they agree with what meager and uncertain data there is out there, it is not due to skill but to fortuitous cancellation of errors in the sub grid models.

I checked one reference, 29. It presents a “new” globally merged TPW data set for the period 1989 to 2009. Much of the paper covers work to remove biases in the data sets that are merged. Note that the data period ends in a La Nina, that depresses TPW values. No trend analysis or even trend discussion is included. Here is a quote: “Therefore, at this time, we can neither prove nor disprove a robust trend in the global water vapor data.”

#30 comes from a court case so is an advocacy document, covers 1990 to 2001 and doesn’t show any impact of the 1998 el nino.

There are many other observational references that could have been cited here.

Nothing in Nicks document is quantitative regarding lapse rate and H2O feedback – purely qualitative.

On the flip side we have very little spread in model lapse rate/H20 feedback estimates and there are published studies which show observations are consistent with the models.

So unconvincing and frankly not worth the time to analyze.

Chubbs: Manabe and Weatherald (1975) contains grossly wrong prediction about many things. Compare Figure 10 to today’s KT energy balance diagram. This mistakes occurred because M&W couldn’t properly model the flux of sensible heat and latent heat from between land/ocean and the atmosphere using large grid cells. You were correct recognize the likely existence of compensating errors in parameterization and pay little attention to the model’s ECS.

However, today’s models still have the same problems: The grid cells are still vastly too large to properly model heat fluxes at the boundary between surface and the atmosphere (as well as between clear and cloudy skies). The biggest problem occurs with the boundary layer, which IIRC is only two grid cells high, and topped by boundary layer clouds (the most cooling clouds on the planet). Yet, for some reason, you now choose to ignore compensating errors and believe model ECS.

However, the same Manabe provides evidence that today’s models are still filled with compensating errors. The linked paper shows the large observed (CERES) and modeled feedbacks that accompany seasonal warming. Models do a poor job of reproducing the observed changes in LWR from cloud skies (observed feedback is insignificantly negative rather than positive) and the changes in SWR (which aren’t linear with temperature and therefore aren’t “feedbacks”). Even worse, models are mutually inconsistent. They have somewhat the same ECS due to compensating errors. Nevertheless, you choose to believe their predictions about ECS.

The feedbacks observed in seasonal warming may not be the same as those in global warming. Seasonal change is the net result of warming in one hemisphere and cooling in the other. It overweights feedbacks outside the tropics where the temperature change is the largest. Nevertheless, useful models should be able to deal with this situation.

https://www.pnas.org/content/110/19/7568

“Here, we show that the gain factors obtained from satellite observations of cloud radiative forcing are effective for identifying systematic biases of the feedback processes that control the sensitivity of simulated climate, providing useful information for validating and improving a climate model.”

Yes Frank, the compensating errors point is obvious from first principles as well as experience with the models. It is amazing to me that anyone with any modeling experience would not credit this point.

I think elsewhere I pointed to the Zhou et al paper on tuning cloud microphysics which showed that ECS could be engineered over a wide range using credible values of the sub grid model parameters. That’s about as clear an evidence of the compensating errors idea as any I’ve seen.

SOD, Great post.

One could look to turbulence modeling as an example of how to improve sub grid models.

In the 1970’s practical turbulence models were algebraic (like the climate sub grid models you describe here). The local value of eddy viscosity was determined from the local properties of the flow field. Later as computers advanced one was able to use more sophisticated models which are partial differential equations that are global that are solved simultaneously with the Navier-Stokes equations. These extra PDE’s produce the eddy viscosity. This resulted in a dramatic improvement in the quality of the modeling.

Of course there are still limitations that are well known for more challenging flows. The problem is that the model parameters are determined based on simple 2D correlations based on 2D wind tunnel testing. Thus, complex 3D flows are not going to be right even though they are often not as bad as one might expect.

Another problem is that the PDE’s being solved are strongly nonlinear so the equations become hard to solve and there are bifurcations, multiple solutions, etc.

But overall, the modern models are a vast improvement.

SoD you say:

“Depending on the group I might hasten to add that “giant fudge factors” isn’t a nasty term, aimed at some nefarious activity of climate scientists. It’s just to paint a conceptual picture to people.”

I have an educational background in engineering science, environmental engineering, and meteorology and I’ve heard the term “fudge factors” many times over the years and I have even made simple spreadsheet air quality forecast models using “fudge factors” that work fairly well. My understanding is that weather models are full of “fudge factors” and they work reasonably well out to a few days.

So I agree that “fudge factors” are not necessarily a bad thing. However, my experience in using fudge factors is that they ideally need to be determined based on actual measurements and then later adjusted based on model performance over time for best results. No complex model will ever be perfect, but most forecast models can be improved over time. I think the current crop of climate models have a LOT of room for improvement.

Oz4 – that is the question I asked above: do weather modelers have to do the same steps: making fudge? Climate models are often described as simply being weather models. I was thinking climate modelers probably have to do many of the same/similar steps.

I have a nephew who is working on his PhD in Quantum Computing. Even if he and his fellow researchers are successful, I doubt a quantum computer could run a climate model in the manner people seem to be expecting.

One problem here JCH is that weather models can use vastly finer grids for their simulations. But weather models take advantage of the fact that Rossby waves are an easy problem over short time frames. I doubt weather forecasts are very good in the tropics where there are usually scattered showers. But in climate, its critical to model the tropics well.

JCH, I’ve used the output from weather models for forecasting weather and air quality since I was in college at the University of Texas at Austin in the early and mid 70’s and I have watched them evolve over the years. Even in retirement now I still look at a variety of weather model forecasts daily. However, I’m no expert on how they work. I do know that global weather models like GFS and ECMWF do not have the resolution necessary to resolve convective thunderstorm cells. The current GFS resolution is 1 degree of latitude by 1 degree of longitude. So I’m guessing that finer scale features, like cloud convection must be parameterized. The GFS performs fairly well for large scale weather features, but has little to no skill at predicting smaller features like squall lines and meso-cell thunderstorms. The newer high resolution regional models, like HRRR and NAM 3km do attempt to resolve convective clouds and perform better than the global models in this regard, but suffer from relatively sparse high resolution vertically resolved input compared to the grid spacing. The UM CCI Climate Reanalyzer has a nice display of the NAM3 here (be sure to check out the different parameters):

https://climatereanalyzer.org/wx/fcst/#nam.conus-lc.t2

Oops … I goofed on the GFS resolution. It’s now half a degree latitude/longitude resolution, which is still not small enough to resolve clouds and convection.

For some people interested in weather and climate models, I recommend Representing model uncertainty in weather and climate prediction,

TN Palmer et al (2005) – free paper.

There are a couple of useful ways that sub-grid parameterizations (fudge factors) are developed:

1. Process studies

2. Higher resolution models

1. Observe some specific climate processes via lots of instruments in one area – you might have some boats out on the ocean, planes collecting data at certain altitudes, satellite data. Out of the dataset comes a parameterization scheme.

2. I give an example of this – Siefert et al 2015 – at the end of Models, On – and Off – the Catwalk – Part Five – More on Tuning & the Magic Behind the Scenes.

Lots can be learnt from these high resolution models.

Just a note that higher resolution models still need “closure schemes” (fudge factors) unless they can resolve down to the mm scale. If they could resolve down to the mm scale there would still be lots of uncertainties (we find that dust particle size affects this process, what is the dust particle size in area x,y,z.. and so on). The Siefert 2015 example had a 25m x 25m grid and ran for a simulated 60hrs.

The other way that fudge factors are developed is finding the best value to ensure the modeled climate does what the real climate does, or did.

One thing that puzzles me about these high resolution models is how they can possibly handle free air turbulence. This turbulence is highly variable and must have a significant effect on the global energy transfers. Normally one would use an eddy viscosity model but these are expensive and work rather poorly for things like free shear layers (at the edges of convective cells for example).

[deleted by capricious moderator]

I just looked up the Siefert post and they avoid eddy viscosity models by using LES. That answers my question.

The fact remains, weather models often work very well. I follow the 10 day global forecasts by an online reanalysis site. While not perfect, they’re pretty good. As month unfolds, you have a pretty good idea what the BEST and GISS, etc. anomalies will be.

Here is a recent paper which describes the slow but steady progress in weather forecasting over the decades. Behind a paywall unfortunately, but the reference list includes many of the important “fudge factors” which are also used in climate models.

https://www.nature.com/articles/nature14956

SOD already explained that weather forecasting and climate modeling are fundamentally different in their purposes and the grid spacings to name just one difference. Weather is about short term prediction of Rossby waves, a relatively easy problem. Climate is about energy flows and long term dynamics of the system with the tropics being very important. Weather model skill does not imply climate model skill.

The big difference between weather forecasting and climate modeling is that climate models only have to be right on the long-term average; not for next Tuesday’s weather forecast, so climate modeling is much easier than weather forecasting.

Sub-grid processes are critical to the accuracy of weather forecasts so climate models get to piggy-back on the technology developed for weather forecasting. The same “fudge factors” used in climate models are being run operationally at great expense around the world.

Chubbs, As i’ve mentioned several times, your view on this is not correct. Overall “averages” will change when the detailed dynamics change. For example if convection becomes stronger that will create a change in the vertical temperature structure and humidity structure that will change the outgoing radiation balance.

Here’s another paper finding the importance of dynamics in climate.

https://ams.confex.com/ams/2019Annual/meetingapp.cgi/Paper/354779

Your reference indicates that using measured SST instead of model predicted SST improves the prediction of precipitation and vertical velocity. That seems very reasonable to me. Actual gradients are more intense than modeled. That also seems reasonable. Doesn’t say anything about ECS, though.

Here is a somewhat relevant example from weather forecasting. Weather models use ensemble forecasting methods where the initial conditions and/or the model physics of a best case operational model are perturbed to generate a range of possible outcomes. It is almost always the case that the average of the ensemble is a better forecast than the unperturbed operational model; even though, the operational model has better data and physics than any of the individual ensemble members.

[…] « Opinions and Perspectives – 6 – Climate Models, Consensus Myths and Fudge Factors […]

SoD,

“In the least technical terms I can think of.. imagine that we divided the world up into a 3d grid. Our climate model has one (and only one) value in each cell for east-west winds, north-south winds, up-down winds, high cloud fraction, low cloud fraction, water vapor concentration.. basically everything you might think is important for determining climate.”

You’re omitting something important here. GCM’s, as in CFD, are solving the Navier-Stokes equations. That is, the equations for momentum and mass, and also an accounting for energy. The essential point is that these are conserved quantities. That is, there is a meaningful mass and momentum for a cell. Just one number. And the N-S solution process balances that, basically by accounting at boundaries (boundary forces and fluxes).

That is the PDE solution process. The other things are subsidiary things that you can carry along. To take one interesting example that you mention – vertical velocity.

In fact, GCM’s radically simplify (for good reason) the vertical component of the momentum equation. They use a hydrostatic balance. This isn’t overwriting N-S with something new. It is just using the terms that remain when you say that the forces associated with vertical acceleration, and that component of viscous shear, are negligible. So they can be dropped from the force balance, leaving g and pressure gradient. That isn’t to say that vertical transport is impossible. We know it happens in storms, especially hurricanes etc. But we know too that these are infrequent. So they have an updraft model to account for that transport. That isn’t affecting the basic N-S solution process, which is the rapid time change process. It says that you can observe what happens, and add it in as a corrector.

I think Nick that the numbers for a given cell will be badly wrong if the cells are too big because of large numerical truncation errors.

Most CFD methods do discretely conserve mass, momentum, and energy. That’s not however always the case for climate models as i understand it. This conservation helps accuracy but is no guarantee of accuracy.

Generally, one needs sub grid models for scales not resolved by the grid. That’s where the fudge factors come in.

There is a strange side of models that Clive Best has put some light on, in his blogpost. “A comparison of CMIP5 Climate Models with HadCRUT4.6” Where he shows a comparison of the latest HadCRUT4.6 temperatures with CMIP5 models for Representative Concentration Pathways (RCPs). The temperature data lies significantly below all RCPs, which themselves only diverge after ~2025.

He has calculated Global Surface temperatures from 12 different CMIP5 models run with RCP8.5 from 1860 to 2100. These runs give a remarkabe result: There is a huge disagreement on the global average surface temperature even in 2018– a spread of 4C. The models now spread from a little over 12 deg C to a little over 16 deg C. This means a great cooling bias in most of the models. So, when the models get used, they must be adjusted to the real temperatures which are measured today? Some kind of fudge gymnastics.

NK: Basically, you are asking if models that get pre-industrial temperature wrong can predict the right amount of warming from 2XCO2?

For pre-industrial in a simple zero-dimensional model:

S(1-a) = eoTs^4 = 240 W/m2

If models get Ts wrong, then they must get the planet’s effective emissivity (e) or albedo (a) wrong T=288, e = 0.614 and Ts=289, e = 0.0607 both give 240 W/m2, so the “error” is actually very small. We have a pretty good idea of how much energy is being delivered by the sun (S) and it appears fairly constant, so let’s assume it isn’t one of the things that might be wrong.

RCP’s specify a given radiation forcing (say 8.5 W/m2), so there is no error in forcing. To calculate warming at steady steady, you need to know planet’s climate feedback parameter (dRi/dTs), the change in radiative imbalance (Ri) per degK of surface warming.

Ri = S(1-a) – eoTs^4

dRi/dT = -4eoT^3 – (oTs^4)*(de/dTs) – S*(da/dTs)

In other words, can models that get a or e very slightly wrong be trusted to get da/dTs and de/dTs right? As best I can tell, the former has no bearing on the latter.

Thank you for the clarification, Frank.

Could fudge factors be about neutralizing sysrematic errors in models?

According to Wild et al , 2014: “The magnitudes of TOA fluxes over land and oceans can now well be determined from the CERES satellite observations. The CMIP5 multimodel mean solar and thermal fluxes closely match these observations when averaged over land and oceans. More substantial biases remain in individual models. Compared to the TOA fluxes, the magnitudes of the surface fluxes are much less well established, as they are not directly measurable by satellites. Historically, this has lead to an unsatisfactory large spread in the surface flux estimates already on global or land/ocean averaged-scales, and has prevented the establishment of firm agreed-upon reference values for the magnitudes of the ocean and land surface energy balance components. This is still evident in the significant spread in the land and ocean surface budgets of the CMIP5 models. Compared to direct surface observations, the majority of these models tend to overestimate the downward solar radiation over land and oceans, and underestimate the downward thermal radiation particularly over land.”

The energy balance over land and oceans: an assessment based

on direct observations and CMIP5 climate models

Martin Wild · Doris Folini · Maria Z. Hakuba · Christoph Schär ·

Sonia I. Seneviratne · Seiji Kato · David Rutan · Christof Ammann ·

Eric F. Wood · Gert König‑Langlo

Click to access 10.1007%2Fs00382-014-2430-z.pdf

So ,even solar radiation at land and ocean surface is wrong in models, and show very different values across models. The same with downward thermal radiation. But why bother when they go in opposite direction. And in addition we can bring in some fudge factor to balance it even more. So, let us calculate the climate in year 2100 with neutralized systematic biases. And if Martin Wild protests, he can be neutralized at RealClimate.

Wild, PowerPoint Presentation

Click to access 13369-verification-radiation-large-scale-climate-models.pdf

I think this is overly negative about climate models. While there are tunable parameters in the models, that does not mean that you can get anything you want out of a model. One thing that has had a big influence on me is the research that sweeps parameter space of the models to determine the range of climate sensitivities that one can reasonably get from a model. They find that ECS > 2 in just about any realistic model (e.g., models must be stable).

Click to access nature_first_results.pdf

It’s also worth saying that people spend an incredible amount of time refining parameterizations. As a result, the models do an incredible job at many things that rely on these, like the water vapor feedback.

I think Andrew you may be suffering from selection bias. There are lots of things the models get wrong. Regional precipitation is one SOD showed here. TLT is another one. It seems that the vertical temperature profile in the tropics is also badly missed. Convection aggregation is shown by experiments to reduce ECS quite significantly and is mostly not accounted for. There was a paper looking at solar flux at the surface. It was off in models by an average of 7 W/m2. Cloud fraction as a function of latitude is all over the place. Gavin Schmidt just posted on the ocean heat uptake in the GISS model which appears to be significantly higher than the most recent data analyses show. These things lend credence to the fudge factor characterization.

I can relate this to the history of turbulence modeling where big steps forward have been taken. Most climate sub grid models are algebraic like practical turbulence models were 40 years ago. The results were generally poor. Modern models solve global PDE’s for the eddy viscosity and are a lot better. It is very unlikely that convection in one grid cell doesn’t impact neighboring grid cells.

First principles analysis shows that the numerical truncation errors in climate models are very large. Also sub grid models for convection are also almost certainly way off. The only way you can get skill on some measures in these circumstances is if what you are looking at is strongly related to quantities used in tuning. That tuning merely optimizes cancellation of errors.

If you read here in the comments and elsewhere you will find some of the references to negative results. One that shocked me was the extreme sensitivity of model results to the order in which sub grid models are applied. What that means is that these models are not independent of numerical details and so tuning will become wrong when those details change. If I had such a low standard for CFD modeling, I’d be fired.

https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017MS001067

Here’s another one.

https://journals.ametsoc.org/doi/10.1175/JCLI-D-15-0191.1

In figure 1 of your reference Andrew, there are some (only a few though) model runs for a doubling CO2 scenario that have decreasing temperatures.

It seems to me that regional and continental scale predictions are the real supposed value of climate models. Energy balance methods can also project average temperatures quite well. While it is true that effective forcings are needed, those are globally averaged forcings. As we get better data on ocean heat uptake, TOA radiation imbalance and forcings, those simple model predictions will get better.

In fact even on the measure of global average temperature anomaly the model performance is not great. Here are some plots by Clive Best.

Here’s one comparing models vs. reanalysis data from weather modeling.

This was in response to Zeke Hausfather tweeting a graphic showing almost perfect agreement of CMIP5 with data but using a baselining period of 1981-2010. Clive used 1961-1990 which is what AR5 used and what the Hadley center uses. This just shows that there is a lot of stuff that gets out there that doesn’t hold up under careful analysis.

So, the bottom line question is why all the continued huge investment in climate models when they add little to our ability to predict anything? Given the very coarse grids used and the algebraic sub grid models (which experience in turbulence modeling shows are unlikely to be very good), is there any prospect that they will become skillful anytime in the foreseeable future?

Seems to me that better data and better theory will give more information for the dollars invested. Aeronautical CFD is hard enough already. There is at least some prospect that continued investment will improve CFD skill because the models are already pretty good for many situations and the deficiencies better understood. This will give us a better handle on how to improve climate models.

“First principles analysis shows that the numerical truncation errors in climate models are very large. Also sub grid models for convection are also almost certainly way off. The only way you can get skill on some measures in these circumstances is if what you are looking at is strongly related to quantities used in tuning. That tuning merely optimizes cancellation of errors.”

Even if you use something like LES to simulate the large scales and make use of the fact that backscatter is very small and energy flows in the mean to the smallest resolved scales, you will have almost no chance in getting the subgrid information you would like to have with big grid cells.

People tried to use stochastic models to generate some structure mimicking some kind of isotropic turbulence. Nevertheless, trying to find accurate prediction on the subgrid scale is impossible, in my opinion, esp. for higher moments. I say so doing those simulations for years and developing models myself.

Most one- or two-equation RANS models are a lot worse, relying on crude eddy viscosity concepts with often only one external length scale. Those models, when applied to wings etc., are perfectly able to get integral quantities reliably (e.g. drag or lift, used by the big car and air-plane companies), but fail already in prediction detachment or reattachment, reliably. As you said, Reynolds stress models have become really good but due to the higher cost aren’t used in industry at all – I know of no German manufacturer having used any for production stuff. Nevertheless I consider those important, as they are able to deal with anisiotropy.

The problem is not, that those RANS models lack physics. It is that the physics involved is often crude or simplified, lacking important mechanisms and therefore not being able to predict strongly anisotropic turbulent flows requiring a multitude of length and time scales.

LES is a much better way to go and my experience is that you get excellent results, but only if you can afford the computational expense and resolution required to resolve the high shear/gradient regions and have a mesh of the order of the scales you want to make predictions about.

Yes Holger sounds like you have a lot of experience. However, there are problems with LES too. My sources tell me that there is no grid convergence and that there is sensitivity to initial conditions too. What I’ve seen so far is pretty sketchy and isn’t really very convincing.

Andrew,

Welcome.

What do you think about the paper by Golaz?

I know Chris and I trust his work. As the paper points out, there remain important problems in understanding the temperature evolution of the 20th century, and the magnitude of aerosol indirect forcing is one of the biggest. Nevertheless, that doesn’t really negate my point that no one has demonstrated that you can tune the parameters in a model to get a very low ECS (i.e., much less than 2 deg). It may be possible, of course, but it’s yet to be demonstrated. Combined with other results from measurements of feedbacks based on observations & simple theoretical arguments, the case for ECS < 2 deg is, in the opinion of most experts, weak.

Andrew,

I’m not making that point in this article. That is, I’m not claiming anything about the likely ECS range (and as best as I understand the work of climate science so far I believe you are correct).

This article, this series, is aimed at a less technical audience. A previous article commented on contrarian myths on climate models. The last article and this one comment on “consensus myths” (where “consensus” = popularized support for the climate science position).

The myths:

– there aren’t fudge factors in climate models

– reproducing past global temperature anomalies means that “we can trust climate models”

Regardless of how few people read this blog, I’m trying to explain important subtleties of climate science. This series is more a commentary.

—

But interesting questions follow in my next comment..

interesting questions..

– in reading a large number of papers on the ice ages I found that as model refinement improved, a proposition that seemed supported became unsupported, then supported again with yet more model improvement (onset of ice ages with year round snow cover at high latitude)

– likewise in reading papers on improved model resolution in the oceans (over a much smaller domain) I found that clarity appeared where in coarser models the simulations had more problems.

So, I wonder.

At the moment we can simulate century scale at 100km x 100km. When we can simulate the same time scale at 1km x 1km (if we ever can) how will this change projections of precipitation, regional temperature changes, and so on?

How can we have confidence in models that disagree on regional changes yet it appears regional changes matter for model predictions? (I can expand on this question, but will need to look up a bunch of papers, hopefully you see where I am aiming).

I think the answer is that I wouldn’t put a lot of faith in specific regional predictions of climate models for 2 reasons. First, it’s not clear that the models are capable of doing that, and second, internal variability can have huge impacts on regional climate (http://www.cgd.ucar.edu/staff/cdeser/docs/deser.communicating_navari.ncc12.pdf), so that even with a perfect model, to get internal variability right you also have to initialize the model perfectly, too.

Overall, I think people should be skeptical of models. We are not confident of our future predictions because we are confident in the models. Rather, the field relies on observations and simple physical arguments. Models are useful to verify those and to allow us to better understand phenomena. Water vapor feedback is a good example: our extremely high confidence is based on simple physical arguments (https://journals.ametsoc.org/doi/pdf/10.1175/JCLI-D-14-00255.1) and observations (https://drive.google.com/file/d/0ByXC85Z909PTNm42ZzE4Zk5SVzQ/view). The fact that models simulate it correctly adds to our confidence.

So what can we say about regional temperature changes? We know temperatures are going up everywhere — more on land than ocean and more in winter and at night than summer and the day. And more in the middle of continents than on the edge. Those conclusions arise from simple physics. We know that, as the Earth warms, the jet streams will move poleward as the Hadley cell expands, which is another simple physical argument. This will push precipitation poleward. You don’t need climate models to reach any of these conclusions.

If you want to know what’s going to happen to your hometown, I don’t think we’re in a position to say that yet.

How about continent?

For continents, I think the models are going to do a reasonable job. That said, internal variability is important for predictions at that scale, so you not only need a good model, you also have to initialize the model with an accurate state of the ocean. I suspect that the initialization is a much bigger roadblock to regional predictions than the parameterizations of the model.

I really recommend this paper: http://www.cgd.ucar.edu/staff/cdeser/docs/deser.communicating_navari.ncc12.pdf

It’s quite good and really shows how internal variability confounds regional predictions.

And yet for Australia the average rainfall over 25 years, as hindcast by the various modeling groups, shows a large range compared with reality. At least double through to less than 50% (see last article).

I haven’t dug into the projections but my understanding is that even for RCP8.5 (my name “an extreme emissions scenario”), where we would hope to separate the signal from the noise, the 2100 projections have some models with large increases in rainfall and some with large decreases in rainfall, and everything in between.

It doesn’t seem like a reasonable job for Australia. I know it’s a small place.

I haven’t looked into Australian rainfall so I don’t know if there are any rigorous results there. From a policy perspective, it may be that all you can say is that you need to be prepared for large, but unspecified changes in precip under high emissions scenarios. It’s worth noting that regional rainfall is hard, but that doesn’t mean that we know nothing about the future. If you want to know what you can “take to the bank,” you can bet on warmer temperatures in Australia, higher sea level, and acidified oceans.

Andrew said:

Thanks, it’s very interesting.

The paper you referenced Andrew is indeed very interesting and looks like a pretty strong negative result. Unless I’m misunderstanding, it looks to me as if models are all over the place even with regard to rates of temperature change over the next 40 years for the US, with some runs showing no change in the US and some a rapid rate of change. Summer seems a little bit more tightly clustered. Looks like Mazatlan has a much tighter range though with not much change being shown. This does not look like it can be blamed on internal variability to me since the trends look rather consistent over 40 years.

The global average temperature seems to be more tightly clustered. What that tells an experienced modeler is that the average is right perhaps because of conservation of energy (TOA radiation in is a tuned for metric and ocean heat uptake is in the ballpark) but the pattern is wrong in detail. That’s what is called in the trade cancellation of large errors. Experienced CFD modelers also know that in such a circumstance, output quantities will only be accurate either by chance or because they are related to quantities for which the model has been tuned.

With due respect, no one would use such poorly performing CFD models for decisions where human life or safety was involved. The FAA wouldn’t allow it and competant executives would never take such risks. Individual scientists would not allow it either or indeed present the results as if they were accurate or even meaningful.

Until recently, the GFDL models didn’t have the ability to produce and aerosol indirect effect. The Golaz paper explored parameterizations that introduce different amount of aerosol indirect effect into their models. Figure 2 shows that nothing else was improved or changed between three versions of CM3, but aerosol forcing changed: -1.0, -1.6 and -2.3 W/m2. (vs Total GHG forcing +2.6 W/m2.) Only about -0.5 W/m2 is reflection of SWR by aerosols. So now the CM3 model has parameters that can be tuned to hindcast whatever historic forcing one needs to make warming agree with observations without changing anything else about how the model functions. As SOD says, a giant fudge factor. (Since RCPs assume aerosol forcing is going to disappear, tuning the aerosol indirect effect has no future projections.)

Ironically, as Golaz (2013) was being published, the AR5 was casting extreme doubt about the ability of climate models to produce a realistic aerosol indirect effect.

7.4.3.1 The Physical Basis for Adjustments in Liquid Clouds (p609)

“The traditional view (Albrecht, 1989; Liou and Ou, 1989) has been that adjustment effects associated with aerosol–cloud–precipitation interactions will add to the initial albedo increase by increasing cloud amount. The chain of reasoning involves three steps: that droplet concentrations depend on the number of available CCN; that precipitation development is regulated by the droplet concentration; and that the development of precipitation reduces cloud amount (Stevens and Feingold, 2009). Of the three steps, the first has ample support in both observations and theory (Section 7.4.2.2). More problematic are the last two links in the chain of reasoning. Although increased droplet concentrations inhibit the initial development of precipitation (see Section 7.4.3.2.1), it is not clear that such an effect is sustained in an evolving cloud field. In the trade-cumulus regime, some modelling studies suggest the opposite, with increased aerosol concentrations actually promoting the development of deeper clouds and invigorating precipitation (Stevens and Seifert, 2008; see discussion of similar responses in deep convective clouds in Section 7.6.4). Others have shown alternating cycles of larger and smaller cloud water in both aerosol-perturbed stratocumulus (Sandu et al., 2008) and trade cumulus (Lee et al., 2012), pointing to the important role of environmental adjustment. There exists limited unambiguous observational evidence (exceptions to be given below) to support the original hypothesised cloud-amount effects, which are often assumed to hold universally and have dominated GCM parameterization of aerosol–cloud interactions. GCMs lack the more nuanced responses suggested by recent work, which influences their ERFaci estimates.

Figure 7.19 (p619) summarizes all of the available information about aerosol forcing and I will try to paste it below. If you believe models should reflect what we can measure from space, aerosol forcing should be from -0.45 to -0.95 W/m2 and only CM3w (-1.0 W/m2) is plausible. If you follow the IPCC’s expert judgement, CM3 (-1.6 W/m2) is on the edge of plausibility.

Figure 7.19 | (a) GCM studies and studies involving satellite estimates of RFari (red), ERFaci (green) and ERFari+aci (blue in grey-shaded box). Each symbol represents the best estimate per model and paper (see Table 7.3 for references). The values for RFari are obtained from the CMIP5 models. ERFaci and ERFari+aci studies from GCMs on liquid phase stratiform clouds are divided into those published prior to and included in AR4 (labelled AR4, triangles up), studies published after AR4 (labelled AR5, triangles down) and from the CMIP5/ACCMIP models (filled circles). GCM estimates that include adjustments beyond aerosol–cloud interactions in liquid phase stratiform clouds are divided into those including aerosol–cloud interactions in mixed-phase clouds (stars) and those including aerosol–cloud interactions in convective clouds (diamonds). Studies that take satellite data into account are labelled as ‘satellites’. Studies highlighted in black are considered for our expert judgement of ERFari+aci. (b) Whisker boxes from GCM studies and studies involving satellite data of RFari, ERFaci and ERFari+aci. They are grouped into RFari from CMIP5/ACCMIP GCMs (labelled CMIP5 in red), ERFaci from GCMs (labelled AR4, AR5 in green), all estimates of ERFari+aci shown in the upper panel (labelled ‘All’ in blue), ERFari+aci from GCMs highlighted in the upper panel (labelled ‘Highlighted GCMs’ in blue), ERFari+aci from satellites highlighted in the upper panel (labelled ‘Highlighted Satellites’ in blue), and our expert judgement based on estimates of ERFari+aci from these GCM and satellite studies (labelled ‘Expert Judgement’ in blue). Displayed are the averages (cross sign), median values (middle line), 17th and 83th percentiles (likely range shown as box boundaries) and 5th and 95th percentiles (whiskers). References for the individual estimates are provided in Table 7.3. Table 7.4 includes the values of the GCM and satellite studies considered for the expert judgement of ERFari+aci that are highlighted in black.

Andrew, on that subject:

It just occurred to me that if you ran the model shown in Golaz’ paper with the “realistic” value of the parameter (which shows no 20th century warming), maybe you would get no 21st century warming for RCP6?

I’m not sure what that tells anyone..

dpy6629,

I think you are misunderstanding it.

It’s one model with many runs, all starting from slightly different initial conditions.

The issue identified by the Golaz paper is with the aerosol indirect effect. Almost all of the forcing in the RCP’s is from greenhouse gases, so I’m confident that the warming over the 21st century would not be impacted much by the choice of how the indirect aerosol effect is parameterized.

Thanks SOD. That is surprising to me though. I would think that a 40 year consistent increasing trend of temperatures over the US of about 2C would be very rare. I do question whether real internal variability can be that large for example in the winter months. The historical US temps seem to follow pretty well the global values with admittedly more short term noise. But on 40 year periods, trends look pretty similar to me. Is an increase from 0 to about 4 in 40 years really possible when global temperatures only go about 1 degree?

From NASA Earth Observatory, 2004: “The scientists found that most climate models have been overestimating the amount of water vapor entering the atmosphere as the Earth’s surface warms.”

Another systematic bias.

Ha ha. You should look up who “The scientists” are and read the paper; that was not a very good description of the actual content of the paper.

Climate models are simulations. They are tuned to get global surface temperatures and TOA radiation right. I have never seen that they have a good grip on hydrological circle, as Hadey cell extension, upper tropospheric drying, precipitation and many other matters. These are the components of water vapor feedback.

And climate model runs are hypothesis, not evidence.

From a paper:Zachary Pirtle, Ryan Meyer, , and Andrew Hamilton, 2010: What does it mean when climate models agree? A case for assessing independence among general circulation models .2010.

“Climate modelers often use agreement among multiple general circulation models (GCMs) as a source of confidence in the accuracy of model projections. However, the significance of model agreement depends on how independent the models are from one another. The climate science literature does not address this. GCMs are independent of, and interdependent on one another, in different ways and degrees. Addressing the issue of model independence is crucial in explaining why agreement between models should boost confidence that their results have basis in reality.”

This is an excellent point. Most of the world’s climate really derive from less than half a dozen sources and the methods in the dynamical cores are pretty similar too. GFDL may be an exception in that they went to a Colella and Woodward discretization scheme instead of high order finite differences.

I just got a voicemail from someone (I won’t name them) thanking me for commenting on the blog. I’m glad that people appreciate my POV — sometimes it hard to know if anyone is listening. They didn’t leave any contact info, so I thought I’d say “thanks” here.

Hi Andrew, I appreciate your participation here as well. I haven’t met you before, but I have worked with your cohort John N-G in conjunction with several air quality studies in the past. Even though I would probably be best characterized as a climate “luke warmer”, I like to hear scientific arguments from all sides of the issues. No one person can know everything in this very complicated field.

I’ve been following weather models since I was studying meteorology in the now defunct UT Atmospheric Science Department with Professors Ken Jehn and Norm Wagner back in the early and mid 1970’s. The weather models have certainly come a long ways since back then. In retirement now, I’m trying to learn a bit more about weather and climate models, so your expertise is appreciated in that regard.

Delighted to see you back here, hope you stay engaged.

Well the paper on regional climate model ensemble predictions was interesting. Are there any comparisons with regional historical trends vs. historical model simulations for the region? There must be somewhere. I really question if on decadal time scales there is that much real internal variability. Particularly for winter in Seattle for example, it’s rare in my experience to have more than a few warm or cold ones in a decade. And decadal averages I am guessing would be pretty close to the underlying trend line. But maybe I’m wrong on that.

Likewise – good to see you back here and I hope you can hang around for a while. And thanks for the pointers to the paper above.. Interesting reading that!

I likewise appreciate your engagement. Given the natural tendency of humans to divide into tribes and be unable of assimilate evidence that contradicts deeply held beliefs, the only way even the most open-minded can really learn is by hearing the best arguments from all sides.

So far, I haven’t heard any compelling objections the thesis of this post:

“This is the reality in climate models. They do include giant fudge factors (sub-grid parameterizations). The “right value” is not clear. Often when some clarity appears and a better “right value” does appear, it throws off some other aspects of the climate model. And anyway, there may not be a “right value” at all.”

In the case of Golaz (2013), we see that hindcast warming can be dramatically changed by tuning the aerosol indirect effect, but mostly by creating an aerosol indirect effect which AR5 believes is too large and not supported by observations.

I agree with much of the Golaz paper: the aerosol indirect effect is one of the big uncertainties in climate science, and it’s one reason that we don’t really know what the historical radiative forcing is. That, in turn, is a primary reason the uncertainty in ECS estimated from the 20th century is so large.

However, we can also estimate ECS from periods where changes in forcing is not important (i.e., the last few decades), so we can end-run around this problem. https://drive.google.com/open?id=1OVUO_e6H5WMxhAYJhLiimY5kGYeL_Nyu

Second, aerosol indirect forcing is important to the 20th century forcing budget, but it is unimportant for the 21st century because it is completely dominated by CO2. So uncertainty in this will not cause much uncertainty in future warming.

At the risk of spoiling the good feelings here I should point out that this latest paper of Andy’s builds on an earlier paper with Mauritsen and Stevens (also from 2018) that Nic Lewis has critiqued in great detail. It appears to me that some of the criticisms are valid and may affect the later sections of the referenced paper too.

In Andy Dessler’s latest paper, Figure 1b shows a scatter plot and linear regression with Ts as the independent variability and R-F as the dependent variable, where F is radiative forcing and R is the imbalance at the TOA. (R = F + lambda*Ts, and the regression affords lambda, the climate feedback parameter and then ECS.) All variables are expressed in terms of monthly anomalies. R^2 shows that only 3% of the variance in R-F is explained by the change in Ts! As spoiled scientists who has had the satisfaction of performing well-controlled experiments in the laboratory, I personally find it absurd to assign any meaning to a regression based on an R^2 of 0.03 – despite the fact that similar plots have been published by many climate scientists of all persuasions.

If one looks at the TOA response to seasonal warming (Tsushima and Manabe (2013) PNAS), it is easier to understand why the regression in Figure 1 is likely meaningless. GMST (not the anomaly) seasonally rises 3.5 K every year due to the lower heat capacity of the NH and that produces unambiguous large changes in radiation at the TOA

The globally averaged, monthly mean TOA flux of outgoing longwave radiation (W/m2) over all sky (A) and clear sky (B) and the difference between them (i.e., longwave CRF) (C) are plotted against the global mean surface temperature (K) on the abscissa. The vertical and horizontal error bar on the plots indicates SD. The solid line through scatter plots is the regression line. The slope of dashed line [is 3.21 W/m2/K, Planck feedback with no other feedbacks]. https://www.pnas.org/content/pnas/110/19/7568.full.pdf

For LWR, we observe an unambiguous LINEAR signal showing that the planet as a whole emits about 2.2 W/m2/K more LWR from both clear and all skies (formally -2.2 W/m2/K) as it warms. In other words, WV+LR feedback is about +1 W/m2/K, and there is no positive cloud LWR feedback visible in this system (contrary to what AOGCMs predict). This is the kind of analysis anyone can trust.

Now let’s look at the SWR signal:

The globally averaged, monthly mean TOA flux (W/m2) of annually normalized, reflected solar radiation over all sky (A) and clear sky (B) and the difference between them (C) (i.e., solar CRF) are plotted against the global mean surface temperature (K) on the abscissa. The vertical and horizontal error bar on the plots indicates SD. The solid line through scatter plots is the regression line.

Now we clearly don’t have the same kind of SIMPLE linear relationship between reflected SWR (OSR) and Ts. Figure 2B shows the SWR response seen through clear skies, which is presumably the result of a combination of Rayleigh scattering (presumably independent of Ts) and surface albedo. Surface albedo change presumably reflects changes in seasonal snow cover sea ice. We EXPECT such responses to lag behind monthly Ts, explaining why we don’t see the same sort of unambiguously linear relationship we do for LWR. (The global sea ice anomaly shows two peaks per year during June and September and a single minimum in March, while snow cover is greatest during late winter in the NH.) Other factors may also be involved. It is clear that the response of cloudy skies at least partially lags behind monthly Ts, but the reason for this phenomena is less obvious. Lindzen and Choi and separately Spenser asserted that there is a slightly stronger correction between negative cloud feedback with a 3-month lag than the positive feedback observed with no lag. From my personal perspective, I think it is ludicrous to assert that any simple direct or lagged relationship describes the relationship between reflected SWR and Ts at ANY particular time. Emission of LWR is a function of Ts for obvious physical reasons, but there is no obvious reason to expect a simple physical relationship between reflection of SWR and Ts on a monthly time scale.

Dessler and Forster (2018) only analyze the sum of the LWR and SWR responses (R) to Ts and ignore the obvious fact that the SWR response can not be a simple function of the current monthly Ts anomaly. I don’t think this can tell us anything useful about the SWR feedbacks in response to global warming. The dynamic range of the temperature and radiation changes is much smaller and not reproduced every year.

Seasonal warming is the result of warming in the NH, cooling in the SH and relatively little change in the tropics, and therefore it is a lousy model for global warming. Nevertheless, it is obvious that AOGCMs are incapable of reproducing the large responses (except WV+LR through clear skies) to seasonal warming observed from space. Furthermore, those responses are mutually inconsistent between models.

Mauritsen and Stevens (2015) have recently confirmed the finds of Lindzen and Choi showing the that the LWR response to warming in the tropics shows POSITIVE LWR feedback (-4 W/m2/K). Our climate system is obviously much too complicated to be understood in terms of a single instantaneous (on a monthly time scale) global OLR+OSR response to changing Ts.

Click to access 553630ea0cf218056e92e697.pdf

Dessler and Forster do show a somewhat tighter correlation between the temperature at 500 hPa and R-F. Unfortunately, this is the temperature at 500 hPa from 30S-30N compared with global R-F. There is no physical rational for this pairing the data in this manner. When the authors eventually decide to compare tropics to tropics and non-tropics to non-tropics, separate the LWR and SWR channels and clear and cloudy skies, then it might be worth looking into the validity of their method for converting warming at 500 hPa into warming at the surface.

Suffice it to say I don’t agree with Nic Lewis. What we showed in Dessler, Mauritsen, and Stevens is that a model with an ECS of ~3°C could nevertheless look like an ECS of 2°C-4°C. Nothing he does in his analysis contradicts that.

He pushes forward a red-herring by focusing on changes in the uncertainty range (“this doesn’t change the uncertainty range”), and he’s right that if you add this in quadrature with other errors it has a small effect. But he doesn’t talk about how it effects the “most likely” value — it can move it by a significant amount.

When you add uncertainty to that issues with masking and blending of the observational surface temperature record (https://drive.google.com/file/d/1_C41ryDcWwq6bfNa22Lx8Z_b1MexROsY/view?usp=sharing), which also tend to low bias observational estimates by about 20%, the case that models are running hot begins to vaporize.

As far as the low R^2 criticism — yes, that’s a bummer. I wish we had more data so we could better isolate the signal. However, it is important to remember that low R^2 is not equal to high uncertainty … in fact they are unrelated. In the paper, we do a thorough uncertainty analysis and propagate the uncertainty in the relation through to our ECS range.

Every way of measuring ECS has some uncertainty. If you want to estimate a best estimate of ECS, you should look at all of them and use your expert judgment to come up with a range that best fits everything.

Well Andrew, Nic address some the issues you raise here in the post on LC 2018. They looked at using Cowtan and Way and found a marginal increase in ECS. I don’t think the SST blending issue is important if you are consistent since they look at changes in temperature and forcing. For the effect you found to be important you would have to be using short comparison periods and/or ignore things like matching AMO state. Further, the difference radiosondes show between tropospheric rates of warming at different altitudes is pretty small. We have no data for the troposphere at earlier periods of time.

I wouldn’t call his discussion of uncertainty a red herring. He simply showed that the internal variability in surface temperature implied by your analysis produced a narrower pdf that their allowance for that variability. That shows your paper does not affect the results of LC18 at all.

The problem here as I’ve documented above in detail with references is that GCM’s are simply not valid scientific evidence of the value of ECS. That leaves one with historical constrained estimates and paleoclimate estimates.

You could try to contact Nic directly or comment at Climate Etc. on those posts. Sniping on twitter doesn’t help advance science.

Thanks, everyone

Andy: The reason I focus on the OLR and OSR response to seasonal warming is that the changes are huge and have been observed yearly for many years. The is data tight. In my scientific experience, the goal has always been to devise improved, better-controlled experiments that allow one to more definitively understand a phenomena. Often that is accomplished by ISOLATING one aspect of a complex phenomena. With seasonal warming (3.5 K change in GMST), you have a phenomena that produces well-defined responses in OLR and OSR from clear and cloudy skies; responses that AOGCMs can’t reproduce – according to the linear analysis that T&M13 report. And the responses models do produce are mutually inconsistent. IMO, the R^2 of 3% in your Figure 1b tells those of us who have had the luxury of performing and sometimes dramatically improving laboratory experiments that your data set isn’t clean enough to illuminate what is really going on.

I’m aware that responses to seasonal warming are completely different from responses to global warming. (These “responses” shouldn’t be called “feedbacks” unless they are linear.) However, seasonal warming may be the only phenomena you can study with enough signal-to-noise to draw useful conclusions. When we understand and can reproduce the OLR and OSR responses to seasonal warming in much more detail, maybe we will know what to look for in global warming.

Professor Dessler wrote: “As far as the low R^2 criticism — yes, that’s a bummer. I wish we had more data so we could better isolate the signal. However, it is important to remember that low R^2 is not equal to high uncertainty … in fact they are unrelated. In the paper, we do a thorough uncertainty analysis and propagate the uncertainty in the relation through to our ECS range.”

What the low R^2 should be telling you is that the change in the monthly anomaly in R has little to do with the change in the monthly temperature anomaly. When you look at the larger changes associated with the seasons, the reason is obvious and unavoidable: LWR changes linearly within the same month as Ts, but SWR CHANGES ARE NOT. You can’t learn about the climate feedback parameter (the change in OLR+OSR with Ts) from monthly data when the OSR response takes more than one month to develop.