At least 99.9% of physicists believe the theory of gravity, and the heliocentric model of the solar system. The debate is over. There is no doubt that we can send a manned (and woman-ed) mission to Mars.

Some “skeptics” say it can’t be done. They are denying basic science! Gravity is plainly true. So is the heliocentric model. Everyone agrees. There is an overwhelming consensus. So the time for discussion is over. There is no doubt about the Mars mission.

I create this analogy (note 1) for people who don’t understand the relationship between five completely different ideas:

- the “greenhouse” effect

- burning fossil fuels adds CO2 to the atmosphere, increasing the “greehouse” effect

- climate models

- crop models

- economic models

The first two items on the list are fundamental physics and chemistry, and while advanced to prove (see The “Greenhouse” Effect Explained in Simple Terms for the first one) to people who want to work through a proof, they are indisputable. Together they create the theory of AGW (anthropogenic global warming). This says that humans are contributing to global warming by burning fossil fuels.

99.9% of people who understand atmospheric physics believe this unassailable idea (note 2).

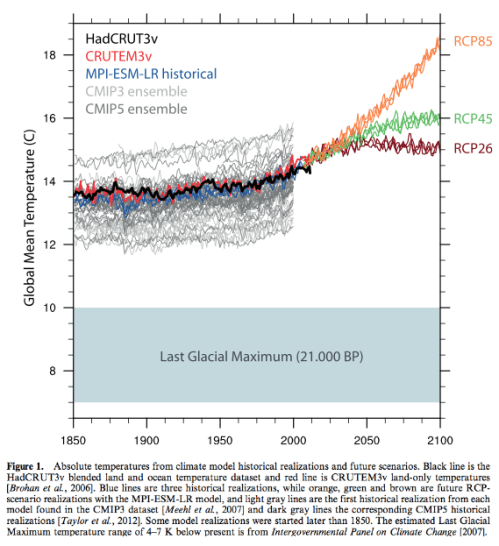

This means that if we continue with “business as usual” (note 3) and keep using fossil fuels to generate energy, then by 2100 the world will be warmer than today.

How much warmer?

For that we need climate models.

Climate Models

These are models which break the earth’s surface, ocean and atmosphere into a big grid so that we can use physics equations (momentum, heat transfer and others) to calculate future climate (this class of model is called finite element analysis). These models include giant fudge-factors that can’t be validated (by giant fudge factors I mean “sub-grid parameterizations” and unknown parameters, but I’m writing this article for a non-technical audience).

One way to validate models is to model the temperature over the last 100 years. Another way is to produce a current climatology that matches observations. Generally temperature is the parameter with most attention (note 4).

Some climate models predict that if we double CO2 in the atmosphere (from pre-industrial periods) then surface temperature will be around 4.5ºC warmer. Others that the temperature will be 1.5ºC warmer. And everything in between.

Surely we can just look at which models reproduced the last 100 years temperature anomaly the best and work with those?

If the model that predicts 1.5ºC in 2100 is close to the past, while the one that predicts 4.5ºC has a big overshoot, we will know that 1.5ºC is a more likely future. Conversely, if the model that predicts 4.5ºC in 2100 is close to the past but the 1.5ºC model woefully under-predicts the last 100 years of warming then we can expect more like 4.5ºC in 2100.

You would think so, but you would be wrong.

All the models get the last 100 years of temperature changes approximately correct. Jeffrey Kiehl produced a paper 10 years ago which analyzed the then current class of models and gently pointed out the reason. Models with large future warming included a high negative effect from aerosols over the last 100 years. Models with small future warming included a small negative effect from aerosols over the last 100 years. So both reproduced the past but with a completely different value of aerosol cooling. You might think we can just find out the actual cooling effect of aerosols around 1950 and then we will know which climate model to believe – but we can’t. We didn’t have satellites to measure the cooling effect of aerosols back then.

This is the challenge of models with many parameters that we don’t know. When a modeler is trying to reproduce the past, or the present, they pick the values of parameters which make the model match reality as best as they can. This is a necessary first step (note 5).

So how warm will it be in 2100 if we double CO2 in the atmosphere?

Somewhat warmer

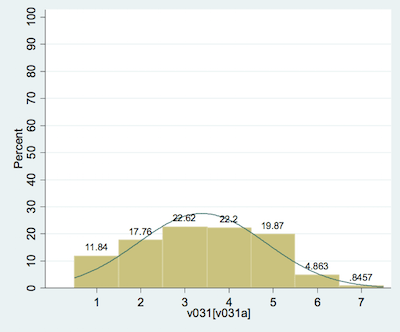

Models also predict rainfall, drought and storms. But they aren’t as good as they are at temperature. Bray and von Storch survey climate scientists periodically on a number of topics. Here is their response to:

How would you rate the ability of regional climate models to make 50 year projections of convective rain storms/thunder storms? (1 = very poor to 7 = very good)

Similar ratings are obtained for rainfall predictions. The last 50 years has seen no apparent global worsening of storms, droughts and floods, at least according to the IPCC consensus (see Impacts – V – Climate change is already causing worsening storms, floods and droughts).

Sea level is expected to rise between around 0.3m to 0.6m (see Impacts – VI – Sea Level Rise 1 and IX – Sea Level 4 – Sinking Megacities) – this is from AR5 of the IPCC (under scenario RCP6). I mention this because the few people I’ve polled thought that sea level was expected to be 5-10m higher in 2100.

Actual reports with uneventful projections don’t generate headlines.

Crop Models

Crop models build on climate models. Once we know rainfall, drought and temperature we can work out how this impacts crops.

Will we starve to death? Or will there be plentiful food?

Past predictions of disaster haven’t been very accurate, although they are wildly popular with generating media headlines and book sales, as Paul Ehrlich found to his benefit. But that doesn’t mean future predictions of disaster are necessarily wrong.

There are a number of problems with trying to answer the question.

Even if climate models could predict the global temperature, when it comes to a region the size of, say, northern California their accuracy is much lower. Likewise for rainfall. Models which produce similar global temperature changes often have completely different regional precipitation changes. For example, from the IPCC Special Report on Extremes (SREX), p. 154:

At regional scales, there is little consensus in GCM projections regarding the sign of future change in monsoon characteristics, such as circulation and rainfall. For instance, while some models project an intense drying of the Sahel under a global warming scenario, others project an intensification of the rains, and some project more frequent extreme events..

In a warmer world with more CO2 (helps some plants) and maybe more rainfall, or maybe less what can we expect out of crop yields? It’s not clear. The IPCC AR5 wg II, ch 7, p 496:

For example, interactions among CO2 fertilization, temperature, soil nutrients, O3, pests, and weeds are not well understood (Soussana et al., 2010) and therefore most crop models do not include all of these effects.

Of course, as climate changes over the next 80 years agricultural scientists will grow different crops, and develop new ones. In 1900, almost half the US population worked in farming. Today the figure is 2-3%. Agriculture has changed unimaginably.

In the left half of this graph we can see global crop yield improvements over 50 years (the right side is projections to 2050):

Economic Models

What will the oil price be in 2020? Economic models give you the answer. Well, they give you an answer. And if you consult lots of models they give you lots of different answers. When the oil price changes a lot, which it does from time to time, all of the models turn out to be wrong. Predicting future prices of commodities is very hard, even when it is of paramount concern for major economies, and even when a company could make vast profits from accurate prediction.

AR5 of the IPCC report, wg 2, ch 7, p.512, had this to say about crop prices in 2050:

Changes in temperature and precipitation, without considering effects of CO2, will contribute to increased global food prices by 2050, with estimated increases ranging from 3 to 84% (medium confidence). Projections that include the effects of CO2 changes, but ignore O3 and pest and disease impacts, indicate that global price increases are about as likely as not, with a range of projected impacts from –30% to +45% by 2050..

..One lesson from recent model intercomparison experiments (Nelson et al., 2014) is that the choice of economic model matters at least as much as the climate or crop model for determining price response to climate change, indicating the critical role of economic uncertainties for projecting the magnitude of price impacts.

In 2001, the 3rd report (often called TAR) said, ch 5, p.238, perhaps a little more clearly:

..it should be noted however that hunger estimates are based on the assumptions that food prices will rise with climate change, which is highly uncertain

Economic models are not very good at predicting anything. As Herbert Stein said, summarizing a lifetime in economics:

- Economists do not know very much

- Other people, including the politicians who make economic policy, know even less about economics than economists do

Conclusion

Recently a group, Cook et al 2013, reviewed over 10,000 abstracts of climate papers and concluded that 97% believed in the proposition of AGW – the proposition that humans are contributing to global warming by burning fossil fuels. I’m sure if the question were posed the right way directly to thousands of climate scientists, the number would be over 99%.

It’s not in dispute.

AGW is a necessary theory for Catastrophic Anthropogenic Global Warming (CAGW). But not sufficient by itself.

Likewise we know for sure that gravity is real and the planets orbit the sun. But it doesn’t follow that we can get humans safely to Mars and back. Maybe we can. Understanding gravity and the heliocentric theory is a necessary condition for the mission, but a lot more needs to be demonstrated.

The uncertainties in CAGW are huge.

Economic models that have no predictive skill are built on limited crop models which are built on climate models which have a wide range of possible global temperatures and no consensus on regional rainfall.

Human ingenuity somehow solved the problem of going from 2.5bn people in the middle of the 20th century to more than 7bn people today, and yet the proportion of the global population in abject poverty (note 6) has dropped from over 40% to maybe 15%. This was probably unimaginable 70 years ago.

Perhaps reasonable people can question if climate change is definitely the greatest threat facing humanity?

Perhaps questioning the predictive power of economic models is not denying science?

Perhaps it is ok to be unsure about the predictive power of climate models that contain sub-grid parameterizations (giant fudge factors) and that collectively provide a wide range of forecasts?

Perhaps people who question the predictions aren’t denying basic (or advanced) science, and haven’t lost their reason or their moral compass?

—-

[Note to commenters, added minutes after this post was written – this article is not intended to restart debate over the “greenhouse” effect, please post your comments in one of the 10s (100s?) of articles that have covered that subject, for example – The “Greenhouse” Effect Explained in Simple Terms – Comments on the reality of the “greenhouse” effect posted here will be deleted. Thanks for understanding.]

References

Twentieth century climate model response and climate sensitivity, Jeffrey Kiehl (2007)

Tuning the climate of a global model, Mauritsen et al (2012)

Yield Trends Are Insufficient to Double Global Crop Production by 2050, Deepak K. Ray et al (2013)

Quantifying the consensus on anthropogenic global warming in the scientific literature, Cook et al, Environmental Research Letters (2013)

The Great Escape, Angus Deaton, Princeton University Press (2013)

The various IPCC reports cited are all available at their website

Notes

1. An analogy doesn’t prove anything. It is for illumination.

2. How much we have contributed to the last century’s warming is not clear. The 5th IPCC report (AR5) said it was 95% certain that more than 50% of recent warming was caused by human activity. Well, another chapter in the same report suggested that this was a bogus statistic and I agree, but that doesn’t mean I think that the percentage of warming caused by human activity is lower than 50%. I have no idea. It is difficult to assess, likely impossible. See Natural Variability and Chaos – Three – Attribution & Fingerprints for more.

3. Reports on future climate often come with the statement “under a conservative business as usual scenario” but refer to a speculative and hard to believe scenario called RCP8.5 – see Impacts – II – GHG Emissions Projections: SRES and RCP. I think RCP 6 is much closer to the world of 2100 if we do little about carbon emissions and the world continues on the kind of development pathways that we have seen over the last 60 years. RCP8.5 was a scenario created to match a possible amount of CO2 in the atmosphere and how we might get there. Calling it “a conservative business as usual case” is a value-judgement with no evidence.

4. More specifically the change in temperature gets the most attention. This is called the “temperature anomaly”. Many models that do “well” on temperature anomaly actually do quite badly on the actual surface temperature. See Models, On – and Off – the Catwalk – Part Four – Tuning & the Magic Behind the Scenes – you can see that many “fit for purpose” models have current climate halfway to the last ice age even though they reproduce the last 100 years of temperature changes pretty well. That is, they model temperature changes quite well, but not temperature itself.

5. This is a reasonable approach used in modeling (not just climate modeling) – the necessary next step is to try to constrain the unknown parameters and giant fudge factors (sub-grid parameterizations). Climate scientists work very hard on this problem. Many confused people writing blogs think that climate modelers just pick the values they like, produce the model results and go have coffee. This is not the case, and can easily be seen by just reviewing lots of papers. The problem is well-understood among climate modelers. But the world is a massive place, detailed past measurements with sufficient accuracy are mostly lacking, and sub-grid parameterizations of non-linear processes are a very difficult challenge (this is one of the reasons why turbulent flow is a mostly unsolved problem).

6. This is a very imprecise term. I refer readers to the 2015 Nobel Prize winner Angus Deaton and his excellent book, The Great Escape (2013) for more.

Very well done.

One point you made that causes me angst: “The 5th IPCC report (AR5) said it was 95% certain that more than 50% of recent warming was caused by human activity”. Very few proponents for CO2 mitigation concede the point that even if we were sure that was the percentage caused by human activity we don’t know how much is due to GHG forcing, land use change, aerosols and other human activities affecting climate. Consequently how much confidence can we possibly have that CO2 mitigation is going to work as advertised?

Yes gravity has very explicit equations including that light blue shifts , ie : gets hotter , descending in a gravitational well .

GHG has.. [moderator’s note – thanks Bob for reminding me to add the request that the debate about the greenhouse itself should be elsewhere. I have moved your comment to another article –

The “Greenhouse” Effect Explained in Simple Terms]

Ok .. [ moderator’s note – comment moved to relevant thread]

No evidence for the relationship between changes in atmos co2 and emissions.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3000932

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2997420

I wonder how it is possible to hold on to climate models as predictive devices when there are so many systematic biases that is sheared among a great majority. Looks that it is some consensus bias among those who build the models. It is now confirmed that models get the aerosole effect wrong, and that some aerosole combined with soot can warm the atmosphere, that sulfur emission has very little effect, and that vapor and dust from plants can do some difference (the CLOUD experiment). Together with cloud biases, atmospheric ice biases, wind biases, rainfall biases and more. Shouldn`t a model get the Antarctica right to have a global relevance? So, if 20 parameters are sytematically biased, how can scientists themselves believe in their model predictions?

NK,

Why not ask central bankers why they cling to models that are far worse than climate models. We know beyond a reasonable doubt that those models are worse than useless. Actions based on those models, like negative interest rates, are doing far more damage than climate models.

Everyone questions models, especially those who build and work with them on a daily basis.

The problem with the non-expert people questioning them is that they often use the uncertainty to forward their own narrative (i.e. climate sensitivity is low, more CO2 is good for plants or we’re doomed). The late Stephen Schneider had something to say about that: “The end of the world or it’s good for you are the lowest probability outcomes.”

Models are the one way to calculate the climate sensitivity. The jokes, however, are the time charts. It is about the concentration of CO2, which should change over the years. What should be the abscissa instead of the CO2 concentration?

The trend can be prolonged in the correct representation (inter alia via the tropopause pressure) and provides e.g. With the measured values of Hohenpeissen a climate sensitivity of 3 K.

Perhaps denying science is not questioning the predictive power of economic models?

Perhaps people who are denying basic (or advanced) science should rather question the economical predictions than proving having lost their reason or their moral compass?

The subject of Cook et al 2013 is precisely the consensus about the human contribution to climate change. It refutes the claim that this consensus is a misrepresentation of the literature and the accusations that the scientists are having more doubts than what it is said, and other “final nail in the coffin” statements, suggesting that AGW theory is discredited. The paper does not claim that we should all go to Mars.

So, to resume, a blog post which is so ridiculously partisan that it should be read backwards to make sense.

And what is CAGW, by the way – except a meme of the skeptical blogosphere? Who is the proponent of CAGW in the peer-reviewed literature ? What does it claim exactly ?

Ort,

I think you got confused by my complicated plot. I used an analogy. I can see why you got confused and I will think about how to rewrite the article.

Part One

————

– I agree with AGW. It is fundamental physics and chemistry.

– I agree that AGW is the overwhelming consensus of climate science.

Part Two

———–

– Neither the Cook et al 2013 paper, nor the consensus of climate science suggest we should send a manned mission to Mars.

– Missions to Mars are completely irrelevant to climate science and now I think about it, I can’t see why I brought it up. Apologies to my readers.

Part Three

————–

– I introduced the term CAGW because I have seen it used and because AGW simply tells us the world will be warmer if we keep burning fossil fuels.

– AGW doesn’t tell us how much warmer, or how dangerous that will be.

– I could have called “CAGW” instead “Theory B”. But, perhaps mistakenly, I thought using a new term might be more confusing than a term that many other people use.

– At the moment most non-technical people are confused. I suspect this is because fundamental physics and chemistry of the atmosphere lead to the theory called AGW. At the same time, the less certain theory of a coming catastrophe is also called AGW. This gives more opportunity for confusion.

Part Four

————-

– It seems that believing AGW is necessary but not sufficient condition for believing that there will be catastrophic warming.

– Therefore (the confusing bit in my article), other things need to demonstrated along with AGW to demonstrate a catastrophe.

– It seems that these are more speculative than the highly convincing theory called AGW.

The reason why CAGW is relevant is because Cook & Co never talk about anything but CAGW. If you question them on it by citing, say: the IPCC, they will say your are wrong because the IPCC have been superseded by the latest model projection of catastrophe. Believers generally don’t refute the media reporting catastrophe. It’s an old trick:- let someone else tell lies for you.

You may want to revisit including the Cook paper as an example of anything other than motivated reasoning masquerading as “science”. http://www.joseduarte.com/blog/cooking-stove-use-housing-associations-white-males-and-the-97

Speed rating the abstracts of 10,000+ papers doesn’t seem like an awesome method, but at least it’s something. Maybe it motivates another group to attempt a better analysis.

I’ve read a lot of climate science papers myself. Maybe 1500. I haven’t done any kind of formal evaluation but if any of them questioned the existence of the “greenhouse” effect, or that burning fossil fuels added to the “greenhouse” effect I would have noticed.

Obviously, I’ve dissected a few of the “popular” papers that express contrary opinions in this blog and, of course, I have gone looking for all papers highlighted by people confused over AGW.

99%-100% seems like about the right range.

And, of course, Cook et al didn’t just look at abstracts, they asked the authors for a rating of their own work as well (the whole paper presumably, not just the abstract). Both results agree with each other, which strengthens the former method, something rarely mentioned in the discussion surrounding that paper.

https://skepticalscience.com/tcp.php?t=faq

Ontspan: From a scientific and policymaking point of view, Cook at al asked a totally meaningless question: Are humans causing (an unspecified amount of) warming? All the prominent skeptical scientists agree that humans are; there is no scientific controversy about it. This non-quantitative answer doesn’t provide policymakers any information useful for deciding whether to restrict GHG emissions.

The survey uncovered about 75 papers with abstracts discussing whether humans had caused at least 50% of recent warming and only 65 agreed. Two (in)famous papers by Lindzen and Choi were not among those 10. So there is no 97% consensus on this subject.

Even if 50% of warming were due to humans, what would that tell policymakers to do? Possibly nothing! Models assign at least 100% of recent warming to GHGs. If only half of that warming were due to man, then it would be sensible to assume that climate sensitivity of models is about 2X too big. In that case, projected warming of about 4 K would become 2 K. We might not need to do anything to stay under the 2 K target.

CO2 is not responsible.. [moderator’s note – I’ve moved this comment to The “Greenhouse” Effect Explained in Simple Terms for reasons explained in the article]

Doh. “land” –> should be “surface”

Few in the scientific community would talk about projections as ‘catastrophic’. The end of the world is just one extreme wing (low probability) on the the probability curve of all outcomes. But there is plenty of chance for ‘dangerous’, which is a word used much more in the scientific community.

The large uncertainty in economic or biological models do not relieve any of that, on the contrary I would say. Especially when we talk about ensuring life support on Earth I would rather err on the side of too much preventive action.

Every time I look at economic results (i.e. stock results) I’m told that the past is no precursor for the future, so I’m a bit puzzled by the suggestion in this article that we can solve any future challenges because we solved many challenges in the past. Let alone that there are many different forms of ‘solve’, including many that will cost a lot of us dear.

SoD, you end your article with a lot of questions but I’m not sure if you ask them rhetorically or in earnest. Can you elaborate on this? How does uncertainty in models allow a form of single-sided skepticism that suggests that we’ll end in the ‘it won’t be bad’ part of the probability curve? Do you suggest we can safely reduce climate mitigation because economic models are rubbish?

ontspan,

I’m asking the questions in earnest.

I didn’t say that at all. Why read that into my question?

Perhaps because of the problem of “the debate”, which turns everything into two polarized camps.

Why did I write this article?

Most people don’t understand the difference between fundamental physics proving the “greenhouse” effect (AGW), and climate catastrophe.

I didn’t say they don’t understand the difference between fundamental physics and a nuanced view of possible danger from AGW if the warming is on the high side, and other bad effects click in. Because 99.99% of the population doesn’t read the nuanced view in climate papers.

So, I’m talking about the difference between fundamental physics and catastrophe.

That’s why I wrote this article.

I didn’t, in the article, suggest there was no danger. Instead I asked a few questions, including: “Perhaps reasonable people can question if climate change is definitely the greatest threat facing humanity?”

In the polarized world of today, asking this question is conflated with “denying basic science that 97% of climate scientists believe”.

I hope you understand what I am trying to explain. In the world of Manichean viewpoints it seems difficult to explain.

I suggest that the certainty of danger is low. There is danger. The certainty is low.

When serious people write about predictions of food prices in 2050 in a serious vein I can’t take it seriously. What 30 year predictions of commodity prices have come true? What proportion of 30 year predictions have come true? How can we assess the predictive power?

If the IPCC report summarized Food Security with “we can see potentially serious problems but have no way to quantify the likelihood” I could take their executive summary more seriously.

Let me be clear, I do not believe we can safely reduce climate mitigation because economic models are rubbish. On the other hand, reasonable people can differ about how seriously to take future safety because economic models are rubbish.

At the moment, in one large chunk of the media, people who differ about how seriously to take future safety – because economic models are rubbish – are called “science d—ers” [note, this blog’s moderation policies don’t allow certain words, as explained in The Etiquette].

Thanks for your clarification SoD.

In light of future population projections (which seem likely to me) and the increasing demand for high-intensity food (meat/dairy products) combined with possible less predictable/stable weather patterns, I do wonder if past performance translate well into the future.

E.g. the crop yield graph suggests at least linear improvements into the coming decades, while I suspect that there are physical limits on the achievable crop improvements as well as climate constraints on increasing yields in the field. Can we really double real world crop production per hectare in ~40 years again? I wouldn’t assume that blindly.

Perhaps, regarding how serious to take future safety in light of poor economic model performance, a lot comes down to which risk error model you prefer. See e..g. http://journals.ametsoc.org/doi/pdf/10.1175/BAMS-D-13-00115.1

While the results of those models may be questionable and rightfully attract skepticism, that fact may not have much influence on the seriousness of the threat of AGW on future safety as a Type 2 error is preferred over a Type 1 error when we’re talking about our life support system. At least to me (and judging by the Paris accord, to most people). So, in summary, I propose to take economic models, flawed and all, seriously in a discussion about future safety.

I’m sorry, I meant: avoiding a Type 2 error is preferred…

The problem here ontspan is that there are numerous threats to humanity. The real issue here is the risks are often quite uncertain as SOD points out. The real problem here in the debate is that partisans try to scare people with what amount to quite unlikely outcomes. It seems this happens with all issues nowadays and the media have descended into partisan territory not seen since the 19th Century.

I would just add a simple conclusion from SOD’s explication of GCM’s predictions. It does appear that the latest research is yielding lower and lower estimates for aerosol forcing. This would tend to lend credence to those models with lower sensitivity. This is an area where some climate scientists.. [deleted – moderator’s note – please observe the blog Etiquette]. The very high uncertainty in GCM’s is just recently being surfaced by actual modelers (as opposed to scientist activists like Hansen). And then there is convection and the tropics where there is a rather bad mismatch between data and GCM predictions. That is important because it goes to the lapse rate theory, which is really a foundation of atmospheric sciences. Could it be that the lapse rate needs revision? Amongst all the nasty partisans in the debate, that important question has not been addressed by anyone. Perhaps SOD can weight in.

In short, there are lots of holes even in fundamental atmospheric science to address if we are really serious about this. If we just want to do “communications” I would say go to work for Al Gore.

ontspan

I don’t assume it blindly. I don’t assume it at all. I have no idea.

Let’s consider crop production and the growing world population which is expected to be around 9bn by mid-century (I didn’t check the expected number). Let’s consider it without climate change – can we feed the world the same or better per capita intake as now?

Perhaps this is the greatest threat facing humanity? A population bomb Paul Ehrlich Redux?

We don’t know whether rains will increase or decrease in any given region so it’s hard to say what will happen due to global warming. Likewise we have no idea even if there was no global warming. The variation in each region decade to decade is higher than any estimated trends.

Here are some drought estimates around the world since 1950 according to Sheffield and Wood 2008:

Here are drought estimates of China over 500 years from Dai 2010:

Without any anthropogenic warming we have large decadal and centennial variability. With anthropogenic warming we have large decadal warming with different trends in different regions.

Note that Dai 2010 found the opposite global trend for the last 50 years. And regionally I find it hard to match up their two reanalyses. (Both of these two papers were cited by the IPCC report which is why I highlight them).

So our knowledge on droughts is not great. We see lots of variation. Experts disagree even on the past.

Who can predict future crop yields without global warming?

dpy

I think you have important points. “The real problem here in the debate is that partisans try to scare people with what amount to quite unlikely outcomes.” Yes, we see it now with the release of the latest paper bye Hansen et. co. We have seen it before with many scientific papers. And the same stories keep coming up about sinking sities, dying species and all that. It seems like many scientists believe in an Eemian Hell that will break loose (as Hansen so dramatically put it), and that it is their mission to save the planet for the next generations.

As for the models, I think too that history haunts us. Something was defined by the earliest models. Hansen`s GISS II model (used up to year 2000) had a sensitivity of 4,4 deg C,after 140 years with doubling of CO2 (according to Clive Best). Then the whole drama soup could begin, with quite unrealistic volcano and aerosole effects. These effects could explain the not so dramatic observed temperatures. The anthropogenetic destruction has only been offset. Lag in the “pipeline”.

As for the lapse rate “theory”. I don`t know if the theory is wrong, but I think that perhaps the laps rate estimates are uncertain. How big will the laps rate feedbacks be with atmospheric warming? Will these feedbacks be linear? I have not seen so much discussion about this.

Alittle more on the climate sensitivities from GISS II, 1983.

“The benchmark for climate sensitivity comes from the Hansen et al 1988 paper on global warming. The climate sensitivity is quoted as 4.2°C (Hansen et al, 1988) for doubled CO2 for the GISS GCM Model II (Hansen et al, 1983); subsequent papers have been reluctant to push for a sensitivity greater than that. However it appears that the 4.2°C number may have been an artifact of limited computing resources and particular parameterizations. Recent experiments with the same model (Rind and Chandler, 2005) have gotten a sensitivity > 5°C, if the doubled CO2 simulations are run for 100 years instead of the 30 years done in 1983. Newer versions of the GISS GCM have lower sensitivities because of aerosols and extensive tuning, otherwise higher resolution versions Model II have a sensitivity of > 6°C.”

From University lectures 2005, programmer Michael Shopsin

And Michael Shopsin should have first hand knowledge: “I’m a programmer at Columbia University for GISS a climate institute located on 112th street.”

nobodysknowledge,

Thanks for the history of the GISS GCM. In the 1980’s the calculations would have been on incredibly coarse grids as you say. I think I remember seeing somewhere that the current GISS E version has an ECS of 2.3 or so. Hansen did a great disservice to climate science with his alarmism and activism. One can never be sure that his science isn’t being skewed toward alarmism.

Hanson’s reply about the high sensitivity of the 1988 model was”It does not matter much over 20 year. Buried in the 1988 paper is this gem

“Forecast temperature trends for time scales of a few decades or less are not very sensitive to the model’s equilibrium climate sensitivity (reference provided). Therefore climate sensitivity would have to be much smaller than 4.2 C, say 1.5 to 2 C, in order for us to modify our conclusions significantly.”

RTFR

Well yes Eli, Hansen did indeed get it wrong on decadal time scales, so his rationalization is not worth much. There is a big difference between an ECS of 4.7 and 2.3. It’s more than a factor of 2. Hansen just had it wrong according even to later GISS model revisions.

It continues to be the case that I have never seen a QUANTITATIVE , ie : computable , explanation of Hansen’s claim that Venus’s extreme surface temperature , 2.25 times the gray body temperature in its orbit ( energy density 25 times ) is due to an optical effect .

Until I do , I will be more than a skeptic .

Bob Armstrong wrote: It continues to be the case that I have never seen a QUANTITATIVE , ie : computable , explanation of Hansen’s claim that Venus’s extreme surface temperature , 2.25 times the gray body temperature in its orbit ( energy density 25 times ) is due to an optical effect. Until I do, I will be more than a skeptic .

Now you have seen such an explanation. There are far better reasons to be or not be a skeptic or an a1armist.

Sorry , I still don’t see any equation I can implement . I don’t even see any equation based on spectra rather than the crudest computation with a scalar absorptivity parameter . Can an equation be presented in terms of the Schwarzschild differential ? That I can implement .

Bob Armstrong,

I don’t think anyone here is going to hold your hand and lead you through the steps necessary to write your own line-by-line atmospheric radiative transfer program. Spectracalc.com has an online program, but it’s subscription based for anything really useful. If you want code, LBLRTM ( http://rtweb.aer.com/lblrtm.html ) is available for free. For the Venusian atmosphere, you need the HITEMP spectral database: https://www.cfa.harvard.edu/hitran/HITEMP.html

If you’re interested in learning about atmospheric radiative transfer, I suggest you purchase Grant Petty, A First Course in Atmospheric Radiation. It’s available from the publisher for $36. http://www.sundogpublishing.com/shop/a-first-course-in-atmospheric-radiation-2nd-ed/

You can also read SoD’s articles on calculating radiative transfer starting here:

Can’t you simply point me to one ? In an APL it could not possibly be more than a dozen lines .

I still see little evidence that the journeyman “climate scientist” even knows how to calculate the equilibrium temperature of a colored , ie : arbitrary absorptivity=emissivity spectrum , sphere illuminated by a disk with an arbitrary power spectrum . That should be standard textbook stuff in any modern undergraduate heat transfer course . Without that you literally don’t know how to calculate the temperature of a billiard ball under a sun lamp , much less the mean temperature of a planet’s surface .

I was quite disappointed that Incropera et al : Heat Transfer didn’t cover the coupling between arbitrary spectra . But I see there are now newer editions online than the paper edition I got . It certainly needs to be somewhere and in more traditional notations other than on CoSy .

I did point you to one, LBLRTM. The fact that you think you could code a reasonably accurate radiative transfer program in a dozen lines of assembly language demonstrates your complete lack of understanding of the complexity of the problem. That’s why I suggested you read Petty’s book.

There are on the order of one million different absorption lines for CO2 and water vapor in the spectral range of interest, ~2-2500cm-1. You have to calculate the shape of each line based on the local temperature and pressure from coefficients in the HITRAN database and then select the lines that matter for the incremental resolution step, something on the order of 0.01cm-1. You have to do this for at least ten layers of the atmosphere, preferably more.

A paper summarizing LBLRTM is here:

Click to access Mlawer_etal_2001_AERsum.pdf

A paper describing the Linepak program used at spectralcalc.com is, unfortunately, behind a paywall:

http://www.sciencedirect.com/science/article/pii/0022407394900256

Incropera is an introductory textbook. Dealing with complex emission and absorption spectra is beyond its scope.

APLs don’t care if you have one “line” or a million , it’s still just a couple of dot products — and in fact , notations like K , the “template” for CoSy , don’t care if you have a million dot products .

And that doesn’t include niceties like the water vapor continuum spectrum.

If you really believe that, then you should write your own program and sell it. But you need to do the homework yourself. As I said above, don’t expect anyone here to do the heavy lifting. And it is heavy.

Bye.

I’m interested in the language and working with others specifically interested in these issues , important as they are .

I still have not gotten anybody to either confirm or challenge the algorithms in the freely downloadable K for the “radiative balance” for arbitrary spectra presented at http://cosy.com/Science/warm.htm#EqTempEq . Translating those few expressions into traditional integrals is not that hard . ( But not executable . )

If you think there is commercial value in such , and there likely is , perhaps you or someone you know might be interested in working on it .

And the programming languages CoSy melds , APL and Forth , are not considered lightweight . See my intro playlist on CoSy’s YouTube channel : https://www.youtube.com/playlist?list=PLfIstXupgHXX_tqjdX02FLf8JgyK-brho .

Bob,

Having relocated your earlier questions to another post, I hadn’t realized that your incomprehension of radiative physics had arrived back here.

Please post your incomprehension back in that article, or an appropriate article, for example, the series Visualizing Atmospheric Radiation.

I’ll just delete further questions of yours on the fundamentals that appear here, (I won’t bother to relocate them).

Thanks. I try not to engage people like BA, but I was bored and he gave the false impression that he was seeking information. His obvious failure to follow the leads I gave him made that crystal clear.

ontspan,

You wrote: “Few in the scientific community would talk about projections as ‘catastrophic’.”

There is really no difference between “catastrophic” and “potentially catastrophic”. Most (all?) preventative actions that people take are to avoid potential catastrophes.

You wrote: “Especially when we talk about ensuring life support on Earth I would rather err on the side of too much preventive action.”

In other words, you are worried about catastrophic anthropogenic global warming.

It is perfectly reasonable to look for ways to prevent against possible catastrophes. But your concern is one sided since it ignores natural climate change. A real climate catastrophe, far more severe than anything that might come from anthropogenic warming, would be a return to glacial conditions (an “ice age”). There are good reasons to believe that ought to be happening now. No one knows why it is not happening, but one theory is that it id due to all the extra CO2 that people have put into the atmosphere. So maybe the correct application of the precautionary principle is that we should make sure we continue to emit CO2. I am not saying that we should do that, I am only saying that we don’t actually know.

You later wrote: “a Type 2 error is preferred over a Type 1 error when we’re talking about our life support system.”

Your jargon is meaningless. All you have to do to change a Type 1 error into a Type 2 error is to change your null hypothesis. You seem to incorrectly think there is only one null hypothesis subject to debate.

Ontspan wrote: “a lot comes down to which risk error model you prefer.”

However, professors at elite academic institutions weren’t elected to decide which error model to use. Long-range planning for any government is about a decade, occasionally two decades, not the half-century it will take to for climate change to have a big impact. (AR5 projected only 0.3-0.7 K of warming over the next two decades.) The US government has a clear understand of the coming bankruptcy of Social Security in one a little more than one decade, but is doing nothing to prevent it when the problem is most tractable. If they can’t deal with SS, who in their right mind expects them to treat climate change as anything but a political football.

Most importantly, the future of CO2 emissions is mostly under the control of developing and undeveloped countries. China now emits more per capita than the EU and twice the US. Before their emissions peak around 2030, 50% more than the EU and that could be triple the US. And every developing country’s top priority is to follow in China’s economic footsteps.

When economists determine an optimal strategy for mitigation, they select a discount rate based on a mathematical theorem. One key input in this calculation is an estimate of the future rate of economic growth. If your great-grandchildren are expected to be dramatically richer than you are today, it doesn’t make sense to spend precious capital on emissions reductions today, when their far richer descendants will have plenty of money to adapt. The higher the economic growth rate, the higher the discount rate you should using in calculating the SCC. Our elite academics live in a world they fear their great grandchildren might envy. They favor mitigation, because their descendants might not be able to adapt.

Our elite academics should keep their personal perspectives on risk assessment out of their scientific assessment of climate change.

I understand well the arguments you are making and basically agree with you. AGW is good science but does not necessarily imply disaster (CAGW). Advocates for immediate drastic action fall back on the precautionary principal, justified by ‘worst case’ scenarios that they themselves helped developed.

RCP8.5 is just one example of this because its name is based on 8.5 W/m2 TOA forcing, rather than on hypothetical future CO2 emissions. The only way to reach such huge values is to assume that the carbon cycle will very soon saturate so that the airborne fraction of emissions rises from 45% to 100%. So far there is zero evidence that this is actually happening. Economic modelling is notoriously biased.

The problem with drastic action too early is that we will most likely waste our one chance to get free of fossil fuels by investing in inefficient technology, which in the long term will do more harm than good.

Clive Best wrote: “The problem with drastic action too early is that we will most likely waste our one chance to get free of fossil fuels by investing in inefficient technology, which in the long term will do more harm than good.”

Great point. We are pumping huge amounts of money into silicon solar cells, probably at the cost of developing high efficiency solar cells that might actually contribute to a revolution in electricity production.

Clive wrote: “The problem with drastic action too early is that we will most likely waste our one chance to get free of fossil fuels by investing in inefficient technology, which in the long term will do more harm than good.”

Will waiting actually deliver significantly improved technology? For single layer Si-PV, the theoretical efficiency limit is 30%. In 2015, Solar City claimed they would be selling 22% efficient panels for the roof top market. Multiple layers can help, but probably won’t be cost effective if they aren’t based on silicon. About half the price of roof top solar is now installation. Miami has mandated roof top solar on all new single family homes constructed. Would home buyers there be better off with today’s panels for the next 30 to 50 years (1%/yr efficiency loss) or retrofitting with the improved technology available a decade from now?

Wind is an even more mature technology. The cost of installation (per MW) hasn’t dropped in more than a decade (though prices were higher around 2010).

All kWh of electricity are not equally valuable, so the LCOE doesn’t tell us how much more we will really be paying for low-carbon electricity now or in the future. I’m saying the difference in cost realized by waiting is unlikely to be worth the cost of waiting To put it differently, if the US or UK has a carbon budget of X to spend over the rest of the century, we would be better off starting reductions now. If ECS were 2 or less, we might benefit from waiting, because the needed reductions would be much less. The possibility of a peak in fossil fuel production and a large spike in price (due to inelastic demand) favors starting now.

Improvements in our ability to cheaply store energy from non-dispatchable renewable generators could make a huge difference as wind and solar gain market share. That would reduce the true cost of wind and solar (and of meeting peak demand).

Will improvements in nuclear technology help? TMI and Fukushima have demonstrated that a loss of coolant accident will result in water reacting with the zirconium cladding on fuel rods, release of hydrogen, and potentially explosions. With this technology providing only 10% of world demand and creating serious incidents every decade or two, we probably can’t rely on it to deliver 50% of demand to a nervous population. Even if a new design eliminate this problem and if high-level waste could be incorporated into fuel rods and burned for power, a crash program would take 2-3 decades to building and operate a dozen new plants long enough to demonstrate their improved safety. So it could take until 2050 before the US could confidently start building the roughly 300? plants that would be needed to supply all base load power through carbon-free nuclear. The same may be true of smaller modular reactors. Right now, regulatory burdens are currently making it financially uncompetitive to build any new design in many developed countries. Any transition to improved nuclear doesn’t appear to conflict with investing in wind or solar for the next decade or two unless the lifetime of turbines and panels improves.

dpy6629 asked, August 2, 2017 at 8:39 pm:

The question is addressed by 100s or 1000s in climate papers and of course in textbooks.

Just a side note that what passes for discussion in the media and most blogs doesn’t give a flavor of the actual discussion in climate science. There isn’t some monolithic block of opinion, there are a wide range of opinions and ideas.

This is completely contrary to what many people believe so I can only claim it, and from time to time demonstrate it by highlighting many different papers.

At the heart of the lapse rate question is the important observation that in the tropics where convection is strong the actual lapse rate is very close to the adiabatic lapse rate. This is easy to calculate from theory if you know the amount of humidity.

More about this at Temperature Profile in the Atmosphere – The Lapse Rate and Potential Temperature.

This topic is also covered in most atmospheric physics textbooks as a basic building block.

Of course, the question of the actual lapse rate under global warming is widely discussed and complicated by the lack of quality (and decadally stable) observations in the upper troposphere. I read a lot of papers on this a while back but there was too much statistics and probably a lot at stake for the many people writing so I wasn’t able to form a useful conclusion that I could write about.

The lapse rate outside the tropics is a different and very difficult question without that same constraint.

My summary from memory, what is expected from theory about the tropical tropospheric lapse rate isn’t well matched by observation, but the observations have lots of biases and errors that are hard to correct for. The last 10-15 years with CERES and AIRS may give a dataset that can resolve these problems.

The great Isaac Held had a comment in one of his papers that I’m sure I quoted not so long ago, but can’t now locate. Something to the effect that “assuming the current question about lapse rate gets resolved in favor of the prevailing viewpoint.. otherwise we will have to rethink some important questions”. Sorry for the lack of citation.

I guess I should read your earlier post on this. It does seem to me though that this is one aspect of the “fundamentals” that is not settled.

I does seem to me that consensus studies in climate science are really largely meaningless. You can “manufacture” a consensus by choosing a vague enough statement to poll. And those who tout them or author them have never seemed to me to be first stringers.

dpy6629,

I’m sure you know this point, but a preamble for the benefit of non-technical readers..

In physics we often say “all other things remaining equal”. That is, let’s change this one thing, nothing else, and see what happens. It has been a very successful reductionist approach in science over the last few hundred years.

Back to the question about the lapse rate. Let’s take pre-industrial levels of CO2, 280ppm, with current climatology – geographical distribution of temperature around the globe, cloud cover distribution, temperature profile up through the atmosphere, current distribution of specific humidity, and so on.

Let’s now calculate the outgoing long wave radiation (OLR), which we can easily do because we have the spectroscopic values of CO2 (and other GHGs) – i.e., the absorption of radiation at different wavelengths.

Now, without changing anything else (“all other things remaining equal”), let’s double CO2 overnight to 560ppm.

Now we recalculate the OLR and of course it has dropped (without looking up the number, memory says a little less than 4W/m2).

Now we increase the surface temperature to find at what increased surface temperature we get back to our old value of OLR.

What do we find – the surface temperature has to increase something like 1.2’C to restore the old OLR value with the new value of CO2. So, “all other things remaining equal”, doubling CO2 will increase the surface temperature by 1.2’C.

Notice we didn’t address any change in lapse rate. This is a feedback. If the lapse rate doesn’t change then it is a negative feedback because higher temperatures emit more radiation. If the lapse rate increases then it is a yet more negative feedback because temperatures higher up in the atmosphere will increase yet further. Likewise, we didn’t address any ice melting which changes the earth’s albedo (positive feedback) Or any change in clouds (unknown). Or humidity (expected to be a positive feedback). Or anything.

So at the fundamental level, because the “greenhouse” effect is a certainty and can be calculated from concentrations of CO2, water vapor and other radiatively-active gases, we can be certain of AGW. Increasing CO2, all other things being equal, reduces OLR, so the earth warms.

Perhaps the feedbacks somehow all perfectly cancel this out? It’s a remote possibility. Some feedbacks will reduce the effect of more CO2, some will increase the effect of more CO2.

How much? For that we need models (GCMs).

Hopefully you can see where the lapse rate fits in the whole picture. Saying that this is one aspect of the “fundamentals” that is not settled, is like saying that clouds represent one aspect of the “fundamentals” that is not settled. AGW does not depend on all the feedbacks. They are not settled. AGW says more CO2, more warming. How much? Answering that is currently done by solving climate models.

The lapse rate doesn`t behave exactly as the models tell it. The tropopause layer is warming when it is expected to cool. Or perhaps it is more like a constant temperature. This should give more OLR than expected, so the lapse rate feedback should be more negative.

“While anthropogenic GHG emissions have kept rising during the recent decades, the tropical TPTs did not decrease as expected from the GHG increase. Instead, since about the turn of the century, the tropical tropopause has significantly warmed, according to the Global Positioning System Radio Occultation (GPS-RO) measurements. The GPS-RO measurements provide an unprecedented accurate, global, and weather-independent data set of tropopause temperature with high vertical resolution. In fact, the tropical TPTs exhibit strong decadal to multidecadal variability, which could be related to internal variability of the climate system. At the same time, remarkable decadal variability has also been seen in the lower stratospheric water vapour.” TPT is tropopause temperature. From: Decadal variability of tropical tropopause temperature and its relationship to the Pacific Decadal Oscillation.

Wuke Wang et al, 2016.

NK,

Yes, the models don’t get the details right. Some or all of them predict a double ITCZ and the tropical upper troposphere hot spot hasn’t appeared. This isn’t exactly news.

Questions

From the concentration of CO2 and the pressure of the atmosphere one can calculate the mass of CO2 in the atmosphere – and this mass has increased over time.

From the amount of burned fossil fuels you can calculate the amount of CO2 that is blown into the atmosphere.

In the seas the CO2 concentration increases.

According to the skeptics, the more CO2 in the atmosphere comes not from the combustion, but from the outgassing of the seas.

Price question: How do the skeptics explain the increase in CO2 concentration and where does the CO2 disappear from the burning of fossil fuels?

In the troposphere the temperature gradient is largely determined by the convection. The temperature gradient in the troposphere hardly changes by through more greenhouse gases – at most somewhat indirectly due to more water vapor because of its condensation properties.

In the stratosphere there is largely radiation equilibrium. Thus, the temperature gradients in the stratosphere are largely determined by the concentration of the greenhouse gases.

The position of the tropopause is determined by the temperature gradient of the stratosphere becoming so great that the convection begins.

If all this is not the case, why did Ernest Gold (later President of the English Meteorological Society) already have the assumption that, in the case of greater CO2 concentration, the tropopause height increases?

What determines the surface temperature? The altitude and temperature of the tropopause is determined by the net energy flow through the stratosphere. More greenhouse gases in the stratosphere increase the tropopause height and the surface temperature increases because of the constant temperature gradient in the troposphere.

What else should be the effect of CO2?

David Crisp who heads the OCO-2 mission has the best slide I’ve seen on the amount of human emission versus the amount not reabsorbed , in particular by the greening of the planet it is causing : http://cosy.com/y17/CrispCO2absorption.jpg .

What natural processes absorb half the CO2 emitted?

Most of it is absorbed into the oceans. Some goes into increased biomass.

Why does the rate change from year to year?

Ocean surface temperature may have an effect on the rate of absorption. I would bet, however, that changes in the net rate of biomass accumulation is more likely. Seasonal variation of atmospheric CO2 is much higher at Barrow, AK than it is at Mauna Loa and there is almost no seasonal variation at the South Pole. Atmospheric CO2 went up more rapidly during the recent strong El Nino. Dead plants rot faster when it’s warmer.

Ebel: I believe that the warming in the stratosphere with altitude is cause by locally produced ozone, not well mixed GHGs. Without ozone, there would be no tropopause.

At the very top of the atmosphere (the thermosphere), high energy particles from the sun produce local warming as UV does in the stratosphere.

In theory, the height of the tropopause has been raised by GHG-mediated and by destruction of ozone by CFCs. Santer claimed a 100-200 m (1-2%) rise from both effects, complicated by large changes produced by volcanic aerosols.

Click to access santertext.pdf

The tropopause has something to do with the ozone layer, is unfortunately a widespread error. Although the ozone layer will, of course, have some influence on the level of the tropopause – because of the low mass (because of the low density at the high altitude) very little influence.

Another supplement: Santer et al. With pictures: https://www.math.nyu.edu/~gerber/pages/documents/santer_etal-science-2003.pdf

In the troposphere, large radiative changes are compensated by minimal changes in convection. This is why the stratosphere is decisive, since there is hardly any convection.

The increase in the height of the tropopause is twofold: the amount of CO2 in the stratosphere is constant or the amount increases according to the concentration change. The measurement shows a measure of about 2/3 of the concentration change.

Ebel,

Without the ozone layer there would be no tropopause and temperature would decrease with altitude up to wherever there is absorption of sunlight. See https://scienceofdoom.com/2012/08/12/temperature-profile-in-the-atmosphere-the-lapse-rate/, in particular Figure 7. However, there would be an altitude above which the temperature gradient is too small to drive convection, creating a “stratosphere” that is very different from the one we have.

Heat flows from high temperature to low temperature. So for there to be a temperature maximum, as there is at the stratopause, there most be a source of energy. That is provided by the absorption of UV by O2 and O3 in the ozone layer.

Although the existence of a tropopause is down to ozone, its location depends on other things that influence where the tropospheric and stratospheric gradients meet. By warming the troposphere and cooling the stratosphere, greenhouse gases raise that altitude. Greenhouse gases cool the stratosphere by making it easier to radiate the energy absorbed by O2 and O3.

Why did Gold already suspect the increase in tropopause with more CO2 in 1908, even though the ozone layer was only discovered in 1913? See also http://geosci.uchicago.edu/~rtp1/papers/PhysTodayRT2011.pdf

Santer et al. confuse cause and effect. The stratosphere is the thinner the greater the net flow through the stratosphere and the greater the greenhouse gas concentration. Above, the troposphere becomes colder and below warmer – because the temperature gradient is approximately constant and the thickness of the troposphere increases.

The whole also follows from the radiation transport equation, which is decisive in the stratosphere. At Schwarzschild 1906 can read.

Accordingly, the tropopause does not rise because the lower troposphere is warmer, but is reversed because the tropopause height increases, the lower troposphere becomes warmer.

I mention this because the few people I’ve polled thought that sea level was expected to be 5-10m higher in 2100.

Actual reports with uneventful projections don’t generate headlines.

I’m just a cowboy, so I’m just going to shoot from the hip. No googling.

An early IPCC report had a fairly high estimate, but far less than 1meter. Later, around 2007, it was reduced to around 13 cm. There was a caveat that models did not contain a component for nonlinear changes in the ice sheets. James Hansen criticized the IPCC for misleading people on potential SLR. Based on paleo, he theorized that a rise of around 5 meters could happen in the 21st century. My understanding of what he wrote is this was not a prediction of 5 meters. Currently there are scientists reporting progress on modeling nonlinear changes in the ice sheets. This sounds very similar to work my Uncle did during WW2 as a member George Irwin Rankin’s fracture mechanics group.

So I don’t know who you asked, but they are not following the issue at a meaningful level, and I have pretty low regard for your comment.

JCH wrote, “So I don’t know who you asked, but they are not following the issue at a meaningful level … ”

That was the point.

No, because it’s virtually impossible for anybody who follows the issue to commonly read anything authoritative that indicates SLR is going to be 5 to 10 meters by 2100. Rahmstorf, who is commonly described as screeching alarmist, does not say that. Jevrejeva does not say that. Mitrovica does not say that.

They may have read from an authoritative source that SLR will be 5 to 10 meters, or more, by ~2200 to 2400.

They may have read on right-wing sources that alarmist scientist screech that SLR will be 5 to 10 meters by 2100.

Richard Alley in his keynote at esrl.noaa.gov/gmd/annualconference/ compared the threat of sea level rise with Katrina .

Look out . It could happen any day now with little warning .

Thanks for making case in point. Europe by 2100 could see 2.0 to 2.5 feet. That is a little less than 5 to 10 meters. During storms there could severe flooding. Not controversial.If there were to be a large nonlinear collapse of an ice sheet sea level would exceed current predictions. Not controversial.

In the world of regulatory implementation policy the headline is always we have to plan for the high values e.g. in NY City the headline was 2m of sea level rise. SoD is exactly right “actual reports with uneventful projections don’t generate headlines”. The point is that uneventful projections don’t drive current policy either.

I have been greatly impressed by this blog. You showed me something I never thought possible. That it was somehow possible for a person to be respected by both sides of the debate.

But this post is a bad one. Your skill is that you stick to the science and you avoid tipping your hand in any one direction. So no one is able to really disagree with you or think you are one of “them” and thereby just stop listening to you.

But lately you have begun strongly tipping your hand in the direction of the skeptics. I am a skeptic myself. But by doing so you compromise your authority and status. I think you would be better off if you stayed away from topics like this and just stuck purely to science. As I said I was amazed when I encountered this blog. I don’t get why you would ruin it by writing articles like this where you basically indicate that skepticism is a reasonable position. Its very obvious how this will be interpreted by anyone on the AGW side.

You have something really incredible and quite rare here. I don’t think you get how rare or how special.

I think SoD has the courage to not let others define him and what he is trying to accomplish. Of couse you can get enemies by being just open minded. Activists will say that by using the “snarl word” CAGW, he places himself in the wrong camp (http://rationalwiki.org/wiki/Global_warming). And at WUWT his blog is placed under the heading Pro AGW Wiews (together with some more dubious blogs) and not under Sceptical Wiews. This falls back on their own black-or-white-thinking. As he has said himself, SoD try to show us true scepticism, and a true invitation to to think freely about climate change.

So, assman, I think you are wrong when you say. “I don’t get why you would ruin it by writing articles like this where you basically indicate that skepticism is a reasonable position. Its very obvious how this will be interpreted by anyone on the AGW side.” There are some reaonable people “on the AGW side” who are not misled by activism. At least I hope that many of them can think for themselves and not get stuck in an echo chamber.

assman,

Thanks for your kind comments, much appreciated. And nice moniker.

I’ve never tried to find some “median position” in the climate debate, or be popular. I don’t have any authority and status.

If people find this blog helpful in explaining climate that is wonderful. More importantly, I try to present ideas with evidence and arguments so they can be questioned and challenged.

Skepticism – in the classical sense – is always a reasonable position. Perhaps by “skepticism” you mean “choosing the side that doesn’t believe climate scientists”. Which seems to be often how it is used.

In a highly polarized world it’s hard to explain a viewpoint without being misunderstood.

In this article I’ve asked isn’t it reasonable to question the certainty of future catastrophe, seeing as it is not based on the certainty of AGW (that is probably subscribed to by 99-100% of climate scientists).

As I said at the start of this comment “I try to present ideas with evidence and arguments so they can be questioned and challenged.”

I hope that people will challenge this article with counter-arguments and evidence. That is, I look forward to people explaining that it is unreasonable to question whether climate change is the greatest threat to humanity.

“I’ve never tried to find some “median position” in the climate debate, or be popular. I don’t have any authority and status.”

I am not saying you did. But you did stick to numbers and fact and judgements that could be supported by evidence or numbers. You basically avoided “opinions” or rhetorical arguments. This post is essentially a rhetorical argument.

“Skepticism – in the classical sense – is always a reasonable position. Perhaps by “skepticism” you mean “choosing the side that doesn’t believe climate scientists”. Which seems to be often how it is used.”

Sure I know that. As I said I’m a skeptic. But there are tonnes of skeptic blogs. And to honest I have strongly questioned my approach to things based mostly on this blog. What I have begun to realize is that its pointless to engage in rhetorical discussions. Its rare that anyone is persuaded. Facts and evidence and what I would call “small” opinions, meaning opinions that don’t make very large or broad claims are what work. “Large” opinions are dangerous.

“I hope that people will challenge this article with counter-arguments and evidence.”

That’s a great hope. But it won’t happen. What people will do is that the will bucket you. You will enter the bucket of Skeptic for everyone in the AGW tribe. And once that happens they will dismiss you.

assman,

That is an assertion that you consistently fail to back up with evidence other than hand waving. Your post is the rhetorical argument.

“But lately you have begun strongly tipping your hand in the direction of the skeptics. “

You think? That’s been obvious for a long time. Plus his science is very poor and badly explained.

Thibeaux,

This blog is oriented towards backing up claims with evidence and arguments. Feel free to criticise, but with evidence. I look forward to your comments.

Your comment is extremely poor and not explained at all.

This comment illustrates beautifully what I am talking about. Your science is good and well-explained but Thibeaux tribal instincts have taken over and he has lost the ability to think clearly about this. I am saying I am any different…we are both Humans with programming designed to survive in tribes…not to reason thoughtfully and logically.

Assman: What makes a subject “scientific” and therefore allows the author to be respected by both sides for the quality of his evidence and the rationality of the discussion? What makes another subject non-scientific and subjects the author to charges of bias or politicization despite the same quality of evidence and rationality?

The problem is not that scholarship (which is what SOD practices) is invading the realm of politics and political advocacy. The problem is that politics and political advocacy have taken over realm of scholarship and science, particularly at our universities. For those focused on climate science, this problem is best illustrated by a recent book by Alice Dreger, whose title is unacceptable to WordPress. Her field is the intersection of the history of science, medical ethics, and gender. Her story passionately illustrates the problems of politicization of scholarship. She notes that science and democracy grew up together in the Enlightenment – the idea that freedom of inquiry, ideas, and speech began with Galileo and gave birth to democracy. “It is no wonder that so many of America’s founders were science geeks”.

I am personally sick of being told that truth and justice depend solely on one’s position in society and that there are no facts that can guide us in today’s world of fake news. Politics is about delegitimizing your opponents, not addressing the merits of their arguments.

Abstract

Probabilistic sea-level projections have not yet integrated insights from physical ice-sheet models representing mechanisms, such as ice-shelf hydrofracturing and ice-cliff collapse, that can rapidly increase ice-sheet discharge. … Under high greenhouse gas emissions (Repre- sentative Concentration Pathway [RCP] 8.5), these physical processes increase median pro- jected 21st century GMSL rise from ∼80 cm to ∼150 cm. Revised median RSL projections would, without protective measures, by 2100 submerge land currently home to > 79 million people, an increase of ∼25 million people. …

JCH: I glanced at your review article. Do any of these models hindcast changes that we should have observed in the last century of two? Or during the Holocene climate optimum, when the Arctic was warmer than today?

The scientific method involves testing hypotheses for how ice sheets should respond to warming – not merely projecting the consequences of hypotheses. Publicizing the projections of unvalidated hypothesis fits my definition of alarmism.

BS. An ice sheet is a structure. Structures are made of materials. Materials can suffer catastrophic failures. It would be abjectly irresponsible to not figure it out. On RC, a longtime ago, I wondered why fracture mechanics was not being applied to the ice-sheet problem. It actually already had been. So this effort started a long time ago and now they’re starting to get results.

An ice sheet is a structure. It’s CURRENT and PAST shape are the result of mechanismS by which it “flows” towards the sea under the force of gravity. When we can explain at least some key aspects of what we see today and the survival of the GIS in the HCO, then we have a validated theory.

For example, the GIS has a fairly pyramidal shape arising from gravitationally induced flow. In trivial engineering, a pile of sand has an angle of repose that depends on the properties of the sand. A slump test (the drop in height of a cone of wet concrete) is used to measure the properties of a batch of concrete. What angle of repose do these theories predict the GIS and WAIS should have today? Obviously they are predicting increasing warmth should produce a lower angle of repose in the coming century.

As a I scientist, I’d like to know what observations are explained by these HYPOTHESES about the internal properties of ice sheets. What range of parameters are consistent with what we observe and what constraints are placed by the HCO – two millennia that were warmer in summer than today.

JCH wrote: “On RC, a longtime ago, I wondered why fracture mechanics was not being applied to the ice-sheet problem. It actually already had been. So this effort started a long time ago and now they’re starting to get results.”

In science, results are experimental tests of hypotheses that may or may not convert them into established theories. Extrapolation of untested hypotheses is prophesy,

JCH,

RCP 8.5 is wildly unrealistic. Basing late century ice shelf behavior on that scenario is science fiction, not science.

Thanks for this very good paper . I feel less alone ….

A large part of the problem is depending on lumped global parameters to validate models. Patterns of change are much more interesting. GCMs used to be called global circulation models and the fact that they get the circulation pretty well right is a strong hint that they are on the right path

This comment might fit better in the previous article The Confirmation Bias – a Feature not a Bug, but I’ll put it here.

Many comments on this blog in various articles, and of course in other blogs, criticize James Hansen and paint him as someone who is an activist sounding the alarm and therefore his predictions – that are towards the dangerous end of the “distribution of possible outcomes” – are rendered worthless. Therefore, they can be dismissed.

Likewise I read comments, more on other blogs, that Nic Lewis believes climate sensitivity is low and clearly he is a motivated “skeptic” (see note) that’s why he comes up with these low numbers. Therefore, they can be dismissed.

How about James Hansen is sounding the alarm and trying to get government action because of what he found (i.e., he sees that the danger is more real than others do).

How about Nic Lewis is presenting low climate sensitivity because that is what he found.

Assuming motives or blindness first is easily done. As explained in the linked earlier article, I don’t believe in the “rationalist delusion” but that doesn’t mean we can discard views of people based on their presumed motivations or apparently selective blindness.

In a complex field it’s possible that a range of hypotheses have sufficient support from the evidence.

It’s also possible in a complex field with lots at stake for humanity that some hypotheses with lots of adherents are completely unsupported by the evidence.

So as this blog started, that’s how it should continue – assumed motivations are irrelevant.

Instead, what is the evidence?

Note: using the moniker of “skeptic” as someone not accepting the climate consensus.

Here’s an example from The Economist on forecasting ability:

Of course, we have legislative changes here that impacted on the result.

When we consider food prices we will have legislative changes, plus crop yields, plus demand changes, plus supply changes from legislative changes, plus supply changes from changing climate, perceived future climate changes, perceived future demand..

I could go on.

DeWitt made an important point in an earlier comment considering catastrophic scenarios:

In an earlier article – Impacts – II – GHG Emissions Projections: SRES and RCP I went into some depth on the various scenarios. In a follow on article – Impacts – III – Population in 2100 I showed some data on population projections.

The world population 80 years from now is highly uncertain. Different experts provide different answers.

What is a little bizarre about the RCP8.5 scenario is that it combines enough economic growth to drive large industry in under-developed countries (mainly sub-Saharan Africa) with not enough economic growth to create the demographic transition or cleaner energy or better technology.

All kinds of future worlds are possibilities. But to use this scenario as the standard “conservative business as usual scenario” seems like a ..

Well, the correct phrase eludes me due to blog policy. Let’s say “strange”. Baffling, perhaps.

Another striking point when reviewing lots of papers is that it is common to find a comparison of only 3 scenarios:

– RCP2.6 – a massive decarbonization effort starting yesterday, completely unlikely, but obviously needed as a “look what happens if we do this” scenario

– RCP4.5 – a more possible future if most major emitters all decided to take strong action to decarbonize starting soon

– RCP8.5 – the world where everything combines in a perfect storm to create the highest concentration of CO2

Missing in action in many papers is RCP6. This is a world where CO2 about doubles (from 280ppm in pre-industrial times) to around 560ppm by 2100.

This RCP6 scenario should be described as:

and RCP8.5 should be described as:

And for that reason RCP6 should be the scenario to be compared with RCP4.5 and RCP2.6.