In Part Three we looked at attribution in the early work on this topic by Hegerl et al 1996. I started to write Part Four as the follow up on Attribution as explained in the 5th IPCC report (AR5), but got caught up in the many volumes of AR5.

And instead for this article I decided to focus on what might seem like an obscure point. I hope readers stay with me because it is important.

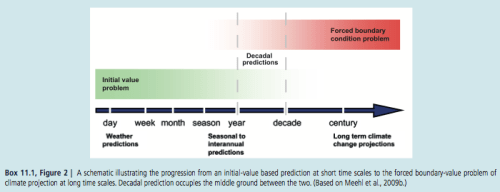

Here is a graphic from chapter 11 of IPCC AR5:

Figure 1

And in the introduction, chapter 1:

Climate in a narrow sense is usually defined as the average weather, or more rigorously, as the statistical description in terms of the mean and variability of relevant quantities over a period of time ranging from months to thousands or millions of years. The relevant quantities are most often surface variables such as temperature, precipitation and wind.

Classically the period for averaging these variables is 30 years, as defined by the World Meteorological Organization.

Climate in a wider sense also includes not just the mean conditions, but also the associated statistics (frequency, magnitude, persistence, trends, etc.), often combining parameters to describe phenomena such as droughts. Climate change refers to a change in the state of the climate that can be identified (e.g., by using statistical tests) by changes in the mean and/or the variability of its properties, and that persists for an extended period, typically decades or longer.

[Emphasis added].

Weather is an Initial Value Problem, Climate is a Boundary Value Problem

The idea is fundamental, the implementation is problematic.

As explained in Natural Variability and Chaos – Two – Lorenz 1963, there are two key points about a chaotic system:

- With even a minute uncertainty in the initial starting condition, the predictability of future states is very limited

- Over a long time period the statistics of the system are well-defined

(Being technical, the statistics are well-defined in a transitive system).

So in essence, we can’t predict the exact state of the future – from the current conditions – beyond a certain timescale which might be quite small. In fact, in current weather prediction this time period is about one week.

After a week we might as well say either “the weather on that day will be the same as now” or “the weather on that day will be the climatological average” – and either of these will be better than trying to predict the weather based on the initial state.

No one disagrees on this first point.

In current climate science and meteorology the term used is the skill of the forecast. Skill means, not how good is the forecast, but how much better is it than a naive approach like, “it’s July in New York City so the maximum air temperature today will be 28ºC”.

What happens in practice, as can be seen in the simple Lorenz system shown in Part Two, is a tiny uncertainty about the starting condition gets amplified. Two almost identical starting conditions will diverge rapidly – the “butterfly effect”. Eventually these two conditions are no more alike than one of the conditions and a time chosen at random from the future.

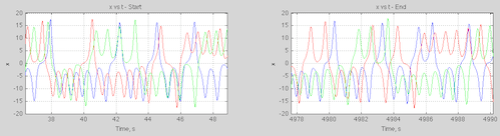

The wide divergence doesn’t mean that the future state can be anything. Here’s an example from the simple Lorenz system for three slightly different initial conditions:

Figure 2

We can see that the three conditions that looked identical for the first 20 seconds (see figure 2 in Part Two) have diverged. The values are bounded but at any given time we can’t predict what the value will be.

On the second point – the statistics of the system, there is a tiny hiccup.

But first let’s review what is agreed upon. Climate is the statistics of weather. Weather is unpredictable more than a week ahead. Climate, as the statistics of weather, might be predictable. That is, just because weather is unpredictable, it doesn’t mean (or prove) that climate is also unpredictable.

This is what we find with simple chaotic systems.

So in the endeavor of climate modeling the best we can hope for is a probabilistic forecast. We have to run “a lot” of simulations and review the statistics of the parameter we are trying to measure.

To give a concrete example, we might determine from model simulations that the mean sea surface temperature in the western Pacific (between a certain latitude and longitude) in July has a mean of 29ºC with a standard deviation of 0.5ºC, while for a certain part of the north Atlantic it is 6ºC with a standard deviation of 3ºC. In the first case the spread of results tells us – if we are confident in our predictions – that we know the western Pacific SST quite accurately, but the north Atlantic SST has a lot of uncertainty. We can’t do anything about the model spread. In the end, the statistics are knowable (in theory), but the actual value on a given day or month or year are not.

Now onto the hiccup.

With “simple” chaotic systems that we can perfectly model (note 1) we don’t know in advance the timescale of “predictable statistics”. We have to run lots of simulations over long time periods until the statistics converge on the same result. If we have parameter uncertainty (see Ensemble Forecasting) this means we also have to run simulations over the spread of parameters.

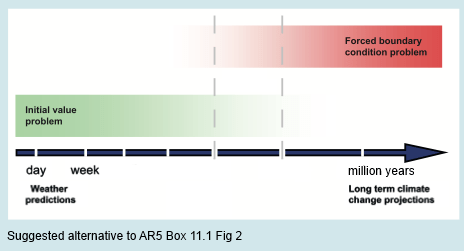

Here’s my suggested alternative of the initial value vs boundary value problem:

Figure 3

So one body made an ad hoc definition of climate as the 30-year average of weather.

If this definition is correct and accepted then “climate” is not a “boundary value problem” at all. Climate is an initial value problem and therefore a massive problem given our ability to forecast only one week ahead.

Suppose, equally reasonably, that the statistics of weather (=climate), given constant forcing (note 2), are predictable over a 10,000 year period.

In that case we can be confident that, with near perfect models, we have the ability to be confident about the averages, standard deviations, skews, etc of the temperature at various locations on the globe over a 10,000 year period.

Conclusion

The fact that chaotic systems exhibit certain behavior doesn’t mean that 30-year statistics of weather can be reliably predicted.

30-year statistics might be just as dependent on the initial state as the weather three weeks from today.

Articles in the Series

Natural Variability and Chaos – One – Introduction

Natural Variability and Chaos – Two – Lorenz 1963

Natural Variability and Chaos – Three – Attribution & Fingerprints

Natural Variability and Chaos – Four – The Thirty Year Myth

Natural Variability and Chaos – Five – Why Should Observations match Models?

Natural Variability and Chaos – Six – El Nino

Natural Variability and Chaos – Seven – Attribution & Fingerprints Or Shadows?

Natural Variability and Chaos – Eight – Abrupt Change

Notes

Note 1: The climate system is obviously imperfectly modeled by GCMs, and this will always be the case. The advantage of a simple model is we can state that the model is a perfect representation of the system – it is just a definition for convenience. It allows us to evaluate how slight changes in initial conditions or parameters affect our ability to predict the future.

The IPCC report also has continual reminders that the model is not reality, for example, chapter 11, p. 982:

For the remaining projections in this chapter the spread among the CMIP5 models is used as a simple, but crude, measure of uncertainty. The extent of agreement between the CMIP5 projections provides rough guidance about the likelihood of a particular outcome. But — as partly illustrated by the discussion above — it must be kept firmly in mind that the real world could fall outside of the range spanned by these particular models.

[Emphasis added].

Chapter 1, p.138:

Model spread is often used as a measure of climate response uncertainty, but such a measure is crude as it takes no account of factors such as model quality (Chapter 9) or model independence (e.g., Masson and Knutti, 2011; Pennell and Reichler, 2011), and not all variables of interest are adequately simulated by global climate models..

..Climate varies naturally on nearly all time and space scales, and quantifying precisely the nature of this variability is challenging, and is characterized by considerable uncertainty.

I haven’t yet been able to determine how these firmly noted and challenging uncertainties have been factored into the quantification of 95-100%, 99-100%, etc, in the various chapters of the IPCC report.

Note 2: There are some complications with defining exactly what system is under review. For example, do we take the current solar output, current obliquity,precession and eccentricity as fixed? If so, then any statistics will be calculated for a condition that will anyway be changing. Alternatively, we can take these values as changing inputs in so far as we know the changes – which is true for obliquity, precession and eccentricity but not for solar output.

The details don’t really alter the main point of this article.

On your suggested graph are some of the year marks missing as we aren’t sure what they are?

Ragnaar,

The marks in the original graphic relate to a specific question which is examined in Chapter 11 – about the possibility of decadal predictions and how initial values can assist this.

Once we consider the possibility that maybe “climate” – as long term statistics – might not be 10-100 years but instead 10,000 or 1,000,000 years averages, then this opportunity no longer exists.

I could have removed the marks, but I wasn’t so interested in the minutiae here.

I think there are many reasons why most ofcthe GCMs may not faithfully predict details of climate over intervals less than 100 years, but I also believI understand them well enough to say Lorenz style chaotic behavior isn’t one of them. They gave imperfections because the need for computational speed means some computations are in fact elaborate interpolations on tables of empirical values.

These models do not exhibit chaotic behavior when tested, rightly or wrongly.

I also think, saying with respect, that the figure of merit here may be a bit off. The key physical attributes they need to capture are GLOBAL and AVERAGE distributions of energy among subsystems, not for extreme instance, the number and size of cyclones that there’ll be in any paricylar year in say the north Atlantic. This is like the difference between considering a strictly Newtonian view of a mechanical system and contrasting it with a Hamiltonian description, in fact, *the* Hamiltonian.

There are plenty of simpler models which are physically based and have predictive skill at their level of resolution which don’t rely upon dynamical evolution of States. I refer the readership to those in Ray Pierrehumbert’s POPC for examples, as well as his computer codes. These are far more useful than Lorens philosophy.

Your suggested graph contain less year marks. Would say the IPCC’s graph overstated what is known about when the initial values/boundary values transition occurs?

‘The global coupled atmosphere–ocean–land–cryosphere system exhibits a

wide range of physical and dynamical phenomena with associated

physical, biological, and chemical feedbacks that collectively result

in a continuum of temporal and spatial variability. The traditional

boundaries between weather and climate are, therefore, somewhat

artificial.

The large-scale climate, for instance, determines the environment for

microscale (1 km or less) and mesoscale (from several kilometers to

several hundred kilometers) processes that govern weather and local

climate, and these small-scale processes likely have significant

impacts on the evolution of the large-scale circulation.’

James Hurrell, Gerald A. Meehl, David Bader, Thomas L. Delworth ,

Ben Kirtman, and Bruce Wielicki: A UNIFIED MODELING APPROACH TO CLIMATE SYSTEM PREDICTION, BAMS December 2009 | 1819: DOI:

10.1175/2009BAMS2752.1

At the scale of days weather is most obviously chaotic. At the scale of months to years we have this interim state that have been described as macro-weather. Beyond that are multi-decadal regimes with breakpoints identified at around 1912, the mid 1940’s, 1976/1977 and 1998/2002. These are synchronised changes in the Earth system seen in ocean and atmosphere indices and in the trajectory of surface temperatures.

Statistically a non-stationary system with changes in climate means and variance with a multi-decadal beat.

In the words of Michael Ghil (2013) the ‘global climate system is composed of a number of subsystems – atmosphere, biosphere, cryosphere, hydrosphere and lithosphere – each of which has distinct characteristic times, from days and weeks to centuries and millennia. Each subsystem, moreover, has its own internal variability, all other things being constant, over a fairly broad range of time scales. These ranges overlap between one subsystem and another. The interactions between the subsystems thus give rise to climate variability on all time scales.’

The theory suggests that the system is pushed by greenhouse gas changes and warming – as well as solar intensity and Earth orbital eccentricities – past a threshold at which stage the components start to interact chaotically in multiple and changing negative and positive feedbacks – as tremendous energies cascade through powerful subsystems. Some of these changes have a regularity within broad limits and the planet responds with a broad regularity in changes of ice, cloud, Atlantic thermohaline circulation and ocean and atmospheric circulation.

Dynamic climate sensitivity implies the potential for a small push to initiate a large shift. Climate in this theory of abrupt change is an emergent property of the shift in global energies as the system settles down into a new climate state. The traditional definition of climate sensitivity as a temperature response to changes in CO2 makes sense only in periods between climate shifts – as climate changes at shifts are internally generated. Climate evolution is discontinuous at the scale of decades and longer.

In the way of true science – it suggests at least decadal predictability. The current cool Pacific Ocean state seems more likely than not to persist for 20 to 40 years from 2002. The flip side is that – beyond the next few decades – the evolution of the global mean surface temperature may hold surprises on both the warm and cold ends of the spectrum (Swanson and Tsonis, 2009).

http://watertechbyrie.com/2014/06/23/the-unstable-math-of-michael-ghils-climate-sensitivity/

Models don’t exhibit chaotic behaviour?

Click to access 4751.full.pdf

http://www.pnas.org/content/104/21/8709.full

Can’t access the Total Sic article, but where in PNAS do they talk about chaotic behaviid? I Aldo don’t like the open ended and somewhat sloppy term “chaos”. I expect the deviation to be described in terms of aime Lyapuniv-like bound. That is, the norm of the M in

|y(t+d)-y(t)| = exp[M |x(t+d)-x(t)|]

You are obviously on your phone. Here’s the Royal Society article again – http://rsta.royalsocietypublishing.org/content/roypta/369/1956/4751.full

‘Lorenz was able to show that even for a simple set of nonlinear equations (1.1), the evolution of the solution could be changed by minute perturbations to the initial conditions, in other words, beyond a certain forecast lead time, there is no longer a single, deterministic solution and hence all forecasts must be treated as probabilistic. The fractionally dimensioned space occupied by the trajectories of the solutions of these nonlinear equations became known as the Lorenz attractor (figure 1), which suggests that nonlinear systems, such as the atmosphere, may exhibit regime-like structures that are, although fully deterministic, subject to abrupt and seemingly random change.’

What you expect and what you get may be two entirely different things. Are you really insisting that climate models are not chaotic?

(Apologies . Just catching up with what turned out to be a popular thread. And, yes, I was writing from my tablet, but unlike other Web sites, for some reason SoD kept wanting to return to the top of the Web page so I was typing blind.)

This response pertains to the comment “What you expect and what you get may be two entirely different things. Are you really insisting that climate models are not chaotic?”

There are three things.

First of all, as I believe I mentioned in another post last night, I believe, in dynamics, “chaos” is reserved for those situations where there is NO predictability at all. To use the Lyapunov setup I mentioned, actually, chaos would correspond to a situation where

|y(t+d) – y(t)| = exp[exp[exp[ … exp[|M(x(t+d) – x(t))|] …]]]

meaning that the change in output is arbitrarily sensitive to changes in input for a system y(t) = F(x(t)), and assuming non-trivial M. If there’s a different terminology for “chaos” used here, my pardons. If so, I’ll assume that “chaos” here is the lower order version I cited, that

|y(t+d) – y(t)| = exp[|M(x(t+d) – x(t))|]

meaning that the difference in output at different times is very sensitive to changes in difference of states, but not explosively so. In other words, predictability persists for a time, and then decays.

Second, there’s a distinction between the climate system in Nature, and climate models, which are descriptions of the former. One or both can exhibit “chaotic properties”. Whether Nature does or not is open to question here. As indicated, there are various coupled systems, each operating on different time scales, and even if it’s posited that one or more of these is chaotic, their different time scales and coupling suggested the behavior could be integrated away. Whether climate models do or not is a different question. Seen as a piece of numerical software, there are senses in which that software might exhibit “chaotic behavior”. But, then again, it may not. It depends upon the software.

Third, the meaning of whether or not “climate models” or even “climate” depends upon what’s precisely meant. A “climate observable” to me means a physical measurable or inferred parameter which is obtained by integrating over (averaging over, if you will) all “reasonable” initial states of the climate system for a period of evolution (or, if you will, of interest). If climate models are uncertain, it’s also sensible to talk about averaging over an indexed set of climate models to obtain an estimate of the measurable or parameter, although with uncertainty … In my preferred terms a posterior marginal density for the parameter.

Models have at their core a set of non-linear equations. Much as Lorenz’s convection model did. From there it is a modest step to the idea of sensitive dependence – perfectly deterministic but seemingly random in the words of Julia Slingo and Tim Palmer.

Or indeed James McWilliams.

‘Sensitive dependence and structural instability are humbling twin properties for chaotic dynamical systems, indicating limits about which kinds of questions are theoretically answerable. They echo other famous limitations on scientist’s expectations, namely the undecidability of some propositions within axiomatic mathematical systems (Gödel’s theorem) and the uncomputability of some algorithms due to excessive size of the calculation.’

http://www.pnas.org/content/104/21/8709.full

So these models are chaotic in the sense of complexity theory – and unless we get beyond mere definitional issues to the widespread understanding that it is so – then thee is nothing left to say.

Climate is chaotic – because it shifts abruptly. ‘What defines a climate change as abrupt? Technically, an abrupt climate change occurs when the climate system is forced to cross some threshold, triggering a transition to a new state at a rate determined by the climate system itself and faster than the cause. Chaotic processes in the climate system may allow the cause of such an abrupt climate change to be undetectably small.’ http://www.nap.edu/openbook.php?record_id=10136&page=14

Getting to an idea of what that means for the real system – is the problem.

‘‘Prediction of weather and climate are necessarily uncertain: our observations of weather and climate are uncertain, the models into which we assimilate this data and predict the future are uncertain, and external effects such as volcanoes and anthropogenic greenhouse emissions are also uncertain. Fundamentally, therefore, therefore we should think of weather and climate predictions in terms of equations whose basic prognostic variables are probability densities ρ(X,t) where X denotes some climatic variable and t denoted time. In this way, ρ(X,t)dV represents the probability that, at time t, the true value of X lies in some small volume dV of state space.’ (Predicting Weather and Climate – Palmer and Hagedorn eds – 2006)

Fundamentally – a probability density function of a family of solutions of a systematically perturbed model – rather than an ensemble of opportunity.

‘In each of these model–ensemble comparison studies, there are important but difficult questions: How well selected are the models for their plausibility? How much of the ensemble spread is reducible by further model improvements? How well can the spread can be explained by analysis of model differences? How much is irreducible imprecision in an AOS?

Simplistically, despite the opportunistic assemblage of the various AOS model ensembles, we can view the spreads in their results as upper bounds on their irreducible imprecision. Optimistically, we might think this upper bound is a substantial overestimate because AOS models are evolving and improving. Pessimistically, we can worry that the ensembles contain insufficient samples of possible plausible models, so the spreads may underestimate the true level of irreducible imprecision (cf., ref. 23). Realistically, we do not yet know how to make this assessment with confidence.’ http://www.pnas.org/content/104/21/8709.full

Whoa. That a model’s state at any particular time step is sensitively dependent upon initial conditions does not imply that a large-scale average of many many time steps’ worth of state (e.g., rolling 5-year GAT) is also sensitively dependent. That has yet to be shown.

I very much agree with you. I was just trotting out standard stuff about dynamical systems and chaos, not saying they applied to climate. I gave my doubts they do.

> So one body made an ad hoc definition of climate as the 30-year average of weather.

I don’t agree that its one body or particularly ad hoc, but I don’t think that’s your point, which appears to be:

> If this definition is correct and accepted then “climate” is not a “boundary value problem” at all. Climate is an initial value problem

I’m missing your leap of logic there. I could see that it is *possible* that in 30 year averages you could still be in the “weather” regime, and I’m sure you could construct systems in which that was true. But I don’t see where or how you’ve demonstrated the logical necessity of “climate is an IVP” following from “climate is 30y avg of weather”.

‘Technically, an abrupt climate change occurs when the climate system is forced to cross some threshold, triggering a transition to a new state at a rate determined by the climate system itself and faster than the cause. Chaotic processes in the climate system may allow the cause of such an abrupt climate change to be undetectably small…

Modern climate records include abrupt changes that are smaller and briefer than in paleoclimate records but show that abrupt climate change is not restricted to the distant past.’ (NAS, 2002)

You have to understand what is meant by an initial value problem in climate. A control variable pushes the system past a threshold at which stage internal processes interact to produce a different – emergent – climate state.

The resultant climate shift can be negative or positive – small or extreme. They happen every few decades – 20 to 40 years in the long proxy records.

I didn’t think there was ironclad evidence for big bifurcations in climate. In fact, I thought that, as of 2009 anyway, it was a serious computational challenge. See Simonnet, Dijkstra, Ghil, http://dspace.library.uu.nl/handle/1874/43777. Also thought that the mere existence of multiple equilibria in climate had not been established except for idealized versions, like aquaplanet (“Climate Determinism Revisited: Multiple Equilibria in a Complex Climate Model”), Ferreira, Marshall, Rose, http://journals.ametsoc.org/doi/abs/10.1175/2010JCLI3580.1. Equilibria need to exist to have bifurcations, even if they are unstable. If by “bifurcations” is meant transitions between states which are not siginificantly separated, then I wonder if they deserve the term “bifurcation”.

BTW, on the matter of climate bifurcations, I put to Professor Marshall something I read here, probably in a discussion of bifurcations in a comment thread pertaining to initiation of ice ages, that evidence was state transitions happened slowly if there were multiple equilibria. I am, of course, quoting him and could have gotten something wrong, but my understanding of his response was that if multiple equilibria existed, there was no particular physical reason to believe that transitions between them would necessarily take a long time.

‘Technically, an abrupt climate change occurs when the climate system is forced to cross some threshold, triggering a transition to a new state at a rate determined by the climate system itself and faster than the cause. Chaotic processes in the climate system may allow the cause of such an abrupt climate change to be undetectably small…

Modern climate records include abrupt changes that are smaller and briefer than in paleoclimate records but show that abrupt climate change is not restricted to the distant past.’ (NAS, 2002)

I quote this again. Abrupt change in the climate system – evident everywhere and at all scales – is what is explained by complexity theory. Really it is just the result of the interactions of system components.

The theory suggests that the system is pushed by greenhouse gas changes and warming – as well as solar intensity and Earth orbital eccentricities – past a threshold at which stage the components start to interact chaotically in multiple and changing negative and positive feedbacks – as tremendous energies cascade through powerful subsystems. Some of these changes have a regularity within broad limits and the planet responds with a broad regularity in changes of ice, cloud, Atlantic thermohaline circulation and ocean and atmospheric circulation.

“The winds change the ocean currents which in turn affect the climate. In our study, we were able to identify and realistically reproduce the key processes for the two abrupt climate shifts,” says Prof. Latif. “We have taken a major step forward in terms of short-term climate forecasting, especially with regard to the development of global warming. However, we are still miles away from any reliable answers to the question whether the coming winter in Germany will be rather warm or cold.” Prof. Latif cautions against too much optimism regarding short-term regional climate predictions: “Since the reliability of those predictions is still at about 50%, you might as well flip a coin.” http://www.sciencedaily.com/releases/2013/08/130822105042.htm

Realistically – you may as well flip a coin. But it is conceptually a better description of reality.

William,

It is my point.

A “typical simple” chaotic system has a timescale over which the statistics are repeatable.

I’m making the reasonable claim that for our climate this timescale is not 30 years. If someone wants to demonstrate that it is, I look forward to the evidence.

The “classical case” that climate is the 30 year statistics of weather was not concluded on the basis of some discovery on the nature of climate as a chaotic system.

Until you reach the timescale for repeatable statistics you are in a zone where small differences in initial values result in divergences that are as significant as the differences between two randomly selected states.

That is, unpredictable.

Let me be clear that I can’t prove that the repeatable statistics of weather are reached over 10,000 year periods either. It might be 300,000 years or 10,000,000 years.

Grant you, and as I suggested elsewhere, “disaster in Canberra” might be unpredictable, but, as I also suggested elsewhere, the World Line of Earth is only one of many possible statistical realizations from Now. And I think the domain and claim of models is that while it may be very difficult/impossible to pick out which World Line will be followed, the properties of the global state at some future time are far more stable, and decent confidence bounds can be given. It’s not like there’s a possibility of Runaway Greenhouse or Snowball Earth in the range of likelihoods, which seems to be the implication of this argument.

Sorry, I don’t buy categorical “unpredictability”. I buy a hierarchy of probable outcomes.

Another possibility is that the “attractor” for weather statistics is, from our point of view, quite limited in range. If that is the case, then even though we don’t know the result on a given day/month/year/decade – we would know that the results were confined to a small set of values.

Again, that idea would need to be demonstrated.

The point of this article is to show the divide between an arbitrary set of statistics and the long term statistics of a chaotic system.

It’s not science to conflate the two without evidence.

if we argue that the climate system is chaotic in some sense(s), we must then describe the region(s) over which chaos significantly affects the climate’s state. Where are the boundaries? Clearly there are some established by basic thermodynamics: e.g., GAT will not reach 400K under current forcings. The clear responses to diurnal and annual forcings further shrink the possible chaotic region(s), as do (to a lesser extent) analyses of the response to volcanic forcing (e.g., Pinatubo’s ~2.5W/m^2 SW forcing over ~2 y causing a ~0.25C drop in GAT over that period).

So where are the boundaries? What is the hypothesis of chaotic climate, and how can we test its predictions?

Meow,

It’s perhaps a little different from the way you are looking at the problem.

Let’s take a typical (dissipative) chaotic system, like the well-known Lorenz 1963 system, as an example.

There are 3 parameters, x = intensity of convection, y = temperature difference between ascending and descending currents, z = deviation of temperature from a linear profile

Here is how x varies at certain periods, the colors relate to a few very slightly different initial conditions (this figure was shown in the article):

The key points are that:

1. there are boundaries – we can see that x is constrained to certain values regardless of where we are in the timeline

2. these boundaries of x are defined by the equations of the system – and, therefore, the parameters in the equations

3. the long term statistics (of which the “boundaries” are just one statistic) are reliable

4. predicting the actual value of x at any given time is impossible

It’s not a case of which bit is due to the “forcing” and which bit is due to the “chaos”.

The statistics of x,y,x will be moved around by changing the parameters (or the form of the equation). The values of x,y,z at a specific time will be unknown. The statistics of x,y,x will be known.

So for example (given a model which perfectly reproduces the physics), given the parameters of the equation we can state at any given time the probability of x being in any given range.

If the “forcing” changes, this will change the statistics. The future value at a specific time will still be unknowable. The future statistics will be knowable.

In the case of the Lorenz system we can change the forcing by changing the parameter r. The statistics change.

Of course, in this simple system reducing r to a certain value takes us out of the chaotic region.

Increasing r to a certain value takes us out of the chaotic region.

It isn’t about saying which bit is due to chaos. Chaos never means unbounded possibility.

Chaos means that a given value can be anywhere on the “attractor”, but nowhere else. (More precisely, it can start somewhere else but will always end up on the attractor).

The attractor is just a name for the “set of possible values for the parameter”.

Change the “forcing” – or a parameter – and you change the attractor.

Thank you for the explanation. Perhaps my question should be restated as: how does the hypothesis of chaotic climate account for the effects of known forcings (e.g., annual)? Or: how large must a forcing be for us to enter the realm where we reasonably can predict the resulting change in GAT or ocean heat content? (I don’t, of course, mean by this “predict an exact value on a given day”, but rather something like “predict a yearly or multi-yearly average with reasonable confidence”).

Meow,

I’m not sure I understand the question.

I don’t know.

Simple chaotic systems have boundaries so you know that certain values will be within certain limits. Under one time period you have no ability to predict the statistics, only that the value will be inside this range. Over a longer time period you have ability to predict the statistics – mean, standard deviation, etc.

There is no a priori reason to expect that this “longer time period” is “multi-year” (where I assume from the way you write “multi-year” you mean like 10 years or something, not 100,000 years).

There’s a further complication that I will spend more time on a subsequent article – in brief, because of the complexity of the climate system the boundary of entire climate states is probably exceptionally large, but in particular periods the boundary of entire climate states will be a lot smaller.

But clearly the length of the time period over which some climate statistics become reasonably predictable is inversely correlated to the integrated magnitude of a postulated forcing. If TSI were to fall by 50% and stay there, all of us would predict a sudden, large drop in GAT.

What I’m trying to do is to understand the shape of the forcing magnitude/predictability horizon curve, and, more broadly, the testable predictions of the hypothesis of chaotic climate. I must admit to being skeptical that the characteristics of the Lorenz model have much to do with large-scale climate statistics like GAT or OHC.

There has been much interesting research on climate predictability, which I hope you’ll get into. A few papers that stand out are Shukla 1998, “Predictability in the Midst of Chaos: A Scientific Basis for Climate Forecasting”, http://w.monsoondata.org/people/Shukla%27s%20Articles/1998/Predictability.pdf , and Goddard et al 2001, “Current Approaches to Seasonal-to-Internannual Climate Predictions”, http://onlinelibrary.wiley.com/doi/10.1002/joc.636/abstract .

BTW, thank you for publishing this site. It is an excellent resource.

If “the repeatable statistics of weather are reached” over a longer-than-150-year period, there is no practical application for climate models as yet.

‘We construct a network of observed climate indices in the period 1900–2000 and investigate their collective behavior. The results indicate that this network synchronized several times in this period. We find that in those cases where the synchronous state was followed by a steady increase in the coupling strength between the indices, the synchronous state was destroyed, after which a new climate state emerged. These shifts are associated with significant changes in global temperature trend and in ENSO variability. The latest such event is known as the great climate shift of the 1970s. We also find the evidence for such type of behavior in two climate simulations using a state-of-the-art model. This is the first time that this mechanism, which appears consistent with the theory of synchronized chaos, is discovered in a physical system of the size and complexity of the climate system.’ http://onlinelibrary.wiley.com/doi/10.1029/2007GL030288/full

Climate chaos is ergodic over long periods – perhaps. What matters more is the statistically non-stationary abrupt shifts in climate states at decadal to millennial scales of variability. It is a new way of thinking about climate as a system rather than as disparate parts. As Marcia Wyatt said – climate ‘is ultimately complex. Complexity begs for reductionism. With reductionism, a puzzle is studied by way of its pieces. While this approach illuminates the climate system’s components, climate’s full picture remains elusive. Understanding the pieces does not ensure understanding the collection of pieces.’ Understanding climate begins by viewing it through the lens of complexity theory.

The US National Academy of Sciences (NAS) defined abrupt climate change as a new climate paradigm as long ago as 2002. A paradigm in the scientific sense is a theory that explains observations. A new science paradigm is one that better explains data – in this case climate data – than the old theory. The new theory says that climate change occurs as discrete jumps in the system. Climate is more like a kaleidoscope – shake it up and a new pattern emerges – than a control knob with a linear gain.

The theory of abrupt climate change is the most modern – and powerful – in climate science and has profound implications for the evolution of climate this century and beyond. A mechanical analogy might set the scene. The finger pushing the balance below can be likened to changes in greenhouse gases, solar intensity or orbital eccentricity. The climate response is internally generated – with changes in cloud, ice, dust and biology – and proceeds at a pace determined by the system itself. Thus the balance below is pushed past a point at which stage a new equilibrium spontaneously emerges. Unlike the simple system below – climate has many equilibria. The old theory of climate suggests that warming is inevitable. The new theory suggests that global warming is not guaranteed and that climate surprises are inevitable.

Many simple systems exhibit abrupt change. The balance above consists of a curved track on a fulcrum. The arms are curved so that there are two stable states where a ball may rest. ‘A ball is placed on the track and is free to roll until it reaches its point of rest. This system has three equilibria denoted (a), (b) and (c) in the top row of the figure. The middle equilibrium (b) is unstable: if the ball is displaced ever so slightly to one side or another, the displacement will accelerate until the system is in a state far from its original position. In contrast, if the ball in state (a) or (c) is displaced, the balance will merely rock a bit back and forth, and the ball will roll slightly within its cup until friction restores it to its original equilibrium.’(NAS, 2002)

In (a1) the arms are displaced but not sufficiently to cause the ball to cross the balance to the other side. In (a2) the balance is displaced with sufficient force to cause the ball to move to a new equilibrium state on the other arm. There is a third possibility in that the balance is hit with enough force to cause the ball to leave the track, roll off the table and under the sofa.

“Climate chaos is ergodic over long periods”. What the heck does *that* mean?

First, I continue to ask for a mathematical definition of “climate chaos”. As far as I know, none has been provided.

Second, generally speaking, “ergodic” means, among other things, every state in the system under consideration will in time be visited. No one has demonstrated anything of the kind for the Earth’s climate, Mars climate, Venus’ climate, climate models, or anything else. Why should it be plausible for this magical “climate chaos”?

The full sentence was – climate chaos is ergodic over long periods – perhaps. It was an ironic restatement of the premise of the post – and getting all uppity about it is quite tedious. Not quoting the entire sentence smacks of bad faith.

Ergodic is this sense is as you say – and there is no ergodic theory of spatio-temporal chaotic systems. Hence the qualifier – perhaps.

You may ‘continually ask’ – but what you ask for is not necessarily what you will get – as I said. As there are no concise governing equations for the climate system – a mathematical treatment of the evolution of those equations – a la Poincare’s three body problem or Lorenz’s convection model – may be a trifle unrealistic.

Have I quoted Julia Slingo and Tim Palmer yet?

‘Lorenz was able to show that even for a simple set of nonlinear equations (1.1), the evolution of the solution could be changed by minute perturbations to the initial conditions, in other words, beyond a certain forecast lead time, there is no longer a single, deterministic solution and hence all forecasts must be treated as probabilistic. The fractionally dimensioned space occupied by the trajectories of the solutions of these nonlinear equations became known as the Lorenz attractor (figure 1), which suggests that nonlinear systems, such as the atmosphere, may exhibit regime-like structures that are, although fully deterministic, subject to abrupt and seemingly random change.’

http://rsta.royalsocietypublishing.org/content/roypta/369/1956/4751.full

Abrupt and seemingly random seem to be the crux of the magic.

It may be the “crux of the magic”, but, as I’ve contended, it’s horrible science, unfalsifiable. Chaos theory applied to climate is like string theory applied to the universe.

Sure it’s falsifiable. Produce a numerical weather model that will forecast the local weather with good skill two months in advance. Then I’ll believe that weather isn’t chaotic. Chaos theory tells you what questions it’s pointless to ask.

That’s not a falsification of the Chaos hypothesis, because the Chaos hypothesis is not making any predictions. To the degree it doesn’t, it can’t be used as a guide for doing science.

But, to your point, would it also be falsified if sea level in 400 years were at least 65 feet above present day?

It’s obviously the same prediction you get from ‘cycles’ – but the mechanism is dynamical complexity.

But the comment about skill put me in mind of another footnote from James McWilliams.

‘Sensitive dependence and structural instability are humbling twin properties for chaotic dynamical systems, indicating limits about which kinds of questions are theoretically answerable. They echo other famous limitations on scientist’s expectations, namely the undecidability of some propositions within axiomatic mathematical systems (Gödel’s theorem) and the uncomputability of some algorithms due to excessive size of the calculation.’

Let me expand a little – and then I think I will give it a rest. I have quoted Michael Ghil – but will repeat for convenience. The ‘global climate system is composed of a number of subsystems – atmosphere, biosphere, cryosphere, hydrosphere and lithosphere – each of which has distinct characteristic times, from days and weeks to centuries and millennia. Each subsystem, moreover, has its own internal variability, all other things being constant, over a fairly broad range of time scales. These ranges overlap between one subsystem and another. The interactions between the subsystems thus give rise to climate variability on all time scales.’

I have also shown a graph below from Kyle Swanson at realclimate showing warming resuming around 2020. The regimes are more like 20 to 40 years in the long proxy records – and the paper says it is an indeterminate period.

So the suggestion is that the current lack of surface warming – or even cooling – will persist for 20 to 40 years from 2002. The system will then abruptly shift to a new state involving a new trend in surface temperature and a change in the frequency and intensity of ENSO events in particular. These are abrupt changes from interactions of components – and not slow changes due to the evolution of forcing.

The specific decadal changes in ENSO can be eyeballed. Blue dominant to 1976 – red to 1998 and blue again since.

Something to think about is the millennial high point in El Nino frequency in the 20th century. More salt in the Law Dome ice core is La Nina.

Imprecise predictions seems a better bet than precise predictions that are utterly wrong.

Insufficient information.

I mean, such a prediction regarding SLR can be made simply by looking at the historical record, without appeal to “climate models”. In this case, the last time atmospheric CO2 was 400 ppm SLR was +65 feet more than now, so, one would estimate, after equilibration, that’s where they’ll end up now. If manifestations in climate are “chaotic”, SLR might be 65 feet or it might not be. There is no historical or archaeological record suggesting seas have been 65 feet higher, at least since the Iron Age, so a prediction of SLR +65 feet is truly extraordinary. So, I ask again, what does this “emerging” science of climate chaos say about this? In contrast, such a prediction *is* available from at least *some* climate models being maligned here.

“So one body made an ad hoc definition of climate as the 30-year average of weather.”

I can’t see the point you are making – who defined “climate” as a 30-year average of weather?

My understanding is the various meteorological bodies use 30 years as a convention for getting meaningful trends out of noisy data ie bringing the signal to noise ratio down to acceptable levels.

No one is saying there is anything special about 30 years.

The WMO.

[emphasis added]

Phil Jones on the origins of the ’30 years is climate’ —

From: Phil Jones

To: “Parker, David (Met Office)” , Neil Plummer

Subject: RE: Fwd: Monthly CLIMATbulletins

Date: Thu Jan 6 08:54:58 2005

Cc: “Thomas C Peterson”

Neil,

Just to reiterate David’s points, I’m hoping that IPCC will stick with 1961-90.

The issue of confusing users/media with new anomalies from a

different base period is the key one in my mind. Arguments about

the 1990s being better observed than the 1960s don’t hold too much

water with me.

There is some discussion of going to 1981-2000 to help the modelling

chapters. If we do this it will be a bit of a bodge as it will be hard to do

things properly for the surface temp and precip as we’d lose loads of

stations with long records that would then have incomplete normals.

If we do we will likely achieve it by rezeroing series and maps in

an ad hoc way.

There won’t be any move by IPCC to go for 1971-2000, as it won’t

help with satellite series or the models. 1981-2000 helps with MSU

series and the much better Reanalyses and also globally-complete

SST.

20 years (1981-2000) isn’t 30 years, but the rationale for 30 years

isn’t that compelling. The original argument was for 35 years around

1900 because Bruckner found 35 cycles in some west Russian

lakes (hence periods like 1881-1915). This went to 30 as it

easier to compute.

Personally I don’t want to change the base period till after I retire !

Cheers

Phil

Robert,

This is more about baselines for describing anomalies. You could set it to an arbitrary value, the value for one year, the average over 20 years, 50 years. Changing the baselines can create a lot of work. Different groups using different baselines creates lots of work in comparisons.

The “definition” of climate as the statistics of weather over 30 years is much older than this email.

“No one disagrees on this first point.”

I do, follow the links to a forecast for late 2015 to early 2017:

The article was talking about the prediction of weather using models. Not about predicting the weather using astrology.

The dynamics of the Earth system includes mechanisms that operate over a large range of time scales.

Purely atmospheric phenomena operate on the shortest time scale up to weeks. Beyond that variations in the state of the ocean starts to dominate for the expectation, and we do not really know what’s the maximum period over which the oceans have memory that leads to significant unforced variability. Perhaps the multidecadal oscillations of a quasiperiod of around 60 years are significant, while nothing beyond that it important, but that’s only one possibility. Some think that unforced variability is weak even on this time scale, while others may propose that similar phenomena occur also over longer periods asking whether MWP and LIA were as strong as higher estimates tell and mostly unforced.

Looking at much longer periods we have the question on the nature of the glacial cycles of around 100,000 years. Is that mainly controlled by internal dynamics of external (Milankovic) forcing? And what about the whole range of time scales between 100 y and 100,000 y?

The internal variability has both causal dependence on initial values and effectively chaotic components on all time scales. Something is predictable, in principle, on every time scale, while something else is not. As one example we have on the time scale of few years some initial value dependence in ENSO, and through that in weather, while we cannot make forecasts that specify further the weather of a given day in 2015.

Stating values like 30 years for what’s climate makes sense only as a way of assuring that short term variability is averaged away. If we could observe an ensemble of parallel universes that have the same forcings, we could define climate even for a single day, but lacking that possibility our possibilities of observing climate are limited to longer periods. No single length of the period has any unique status. 30 years is just one possible choice with some advantages and some disadvantages.

‘”The ocean plays a crucial role in our climate system, especially when it comes to fluctuations over several years or decades,” explains Prof. Mojib Latif, co-author of the study. “The chances of correctly predicting such variations are much better than the weather for the next few weeks, because the climate is far less chaotic than the rapidly changing weather conditions,” said Latif. This is due to the slow changes in ocean currents which affect climate parameters such as air temperature and precipitation. “The fluctuations of the currents bring order to the weather chaos”. http://www.geomar.de/en/news/article/klimavorhersagen-ueber-mehrere-jahre-moeglich/

Latif is not perhaps a little imprecise in his language. These abrupt changes in ocean and atmospheric in 1976/77 and 1998/2002 he is discussing are chaotic – merely on a longer scale.

Anastasios Tsonis, of the Atmospheric Sciences Group at University of Wisconsin, Milwaukee, and colleagues used a mathematical network approach to analyse abrupt climate change on decadal timescales. Ocean and atmospheric indices – in this case the El Niño Southern Oscillation, the Pacific Decadal Oscillation, the North Atlantic Oscillation and the North Pacific Oscillation – can be thought of as chaotic oscillators that capture the major modes of climate variability. Tsonis and colleagues calculated the ‘distance’ between the indices. It was found that they would synchronise at certain times and then shift into a new state.

It is no coincidence that shifts in ocean and atmospheric indices occur at the same time as changes in the trajectory of global surface temperature. Our ‘interest is to understand – first the natural variability of climate – and then take it from there. So we were very excited when we realized a lot of changes in the past century from warmer to cooler and then back to warmer were all natural,’ Tsonis said.

Four multi-decadal climate shifts were identified in the last century coinciding with changes in the surface temperature trajectory. Warming from 1909 to the mid 1940’s, cooling to the late 1970’s, warming to 1998 and – at the least – little warming since. There are practical implications for disentangling natural from anthropogenic change in recent climate. Using 1994 to 1998 – for instance – to average climate over a full cool and warm regime. Instead of an arbitrary starting point of 1950.

Sorry for you that the signals in Hayes’ and Imbries’ sediment cores, with frequencies of ~23, ~42 and ~100 k years matching precession, obliquity and eccentricity, do not support your theories on ‘climate surprises’. In contrast to SoD, you should not put the 1976 paper of Hayes et al. on top of your theoretical papers. You should read it first.

Seeing as we are delving into scientific pre-history – perhaps you should try – http://web.vims.edu/sms/Courses/ms501_2000/Broecker1995.pdf

Milankovich cycles set the conditions for persistence of ice and snow feedbacks – due to low NH summer insolation – that are initiated by changes in thermohaline circulation. The physical principles are feedbacks in a chaotic system.

In the words of Michael Ghil (2013) the ‘global climate system is composed of a number of subsystems – atmosphere, biosphere, cryosphere, hydrosphere and lithosphere – each of which has distinct characteristic times, from days and weeks to centuries and millennia. Each subsystem, moreover, has its own internal variability, all other things being constant, over a fairly broad range of time scales. These ranges overlap between one subsystem and another. The interactions between the subsystems thus give rise to climate variability on all time scales.’

The theory suggests that the system is pushed by greenhouse gas changes and warming – as well as solar intensity and Earth orbital eccentricities – past a threshold at which stage the components start to interact chaotically in multiple and changing negative and positive feedbacks – as tremendous energies cascade through powerful subsystems. Some of these changes have a regularity within broad limits and the planet responds with a broad regularity in changes of ice, cloud, Atlantic thermohaline circulation and ocean and atmospheric circulation.

The orbital eccentricities are not forcing as such but control variables in a chaotic system.

The paradigm emerges from observation of abrupt changes in the Earth system – such as is not explained by (relatively) smoothly evolving orbits.

‘Recent scientific evidence shows that major and widespread climate changes have occurred with startling speed. For example, roughly half the north Atlantic warming since the last ice age was achieved in only a decade, and it was accompanied by significant climatic changes across most of the globe. Similar events, including local warmings as large as 16°C, occurred repeatedly during the slide into and climb out of the last ice age. Human civilizations arose after those extreme, global ice-age climate jumps. Severe droughts and other regional climate events during the current warm period have shown similar tendencies of abrupt onset and great persistence, often with adverse effects on societies.’ Abrupt climate change: inevitable surprises – NAS 2002

As as reference to cite proof, Tsonis and colleague Swanson are tricky, since they tend to redefine terms like “random variable” from that taught in basic probability courses to, I would argue, less useful definitions in terms of computer programs. See http://onlinelibrary.wiley.com/doi/10.1029/2004EO380002/pdf.

Also, I would argue, a purely classical approach to climate series — or any series, for that matter — devoid of underlying physics, is limited by the accuracies of the represented series. In particular, I note, in little of the work reported, are uncertainties of individual by-year points included to weight the results. Surely, these *are* available.

Don’t forget Sergey Kravtsov. The great breakthrough of Tsonis and colleagues was the use real world data in a network model to demonstrate synchronous chaos in the Earth system. These indices were considered as chaotic oscillating nodes on a network and the resultant timing of synchonisation identified. That it is also the inflection points of global surface temperature – and of global hydrology – says that real connections were identified.

It is very much a systems approach that stimulated the development of the stadium wave idea of Marcia Wyatt and – latterly – Judith Curry. It is very much about the evolution of the Earth system as a whole.

.

I remembered this one – talking about the underlying physics Tsonis is aiming at.

Click to access PhysicaD.pdf

In “Emergence of synchronization in complex networks of interacting

dynamical systems”, theory is fine, and networks of coupled oscillators are interesting and fine, but apart from fitting these to a few series, what do they have to do with climate science? Moreover, since the approach is frequentist, where in this work are the corrections for overfitting?

Anastasios Tsonis, of the Atmospheric Sciences Group at University of Wisconsin, Milwaukee, and colleagues used a mathematical network approach to analyse abrupt climate change on decadal timescales. Ocean and atmospheric indices – in this case the El Niño Southern Oscillation, the Pacific Decadal Oscillation, the North Atlantic Oscillation and the North Pacific Oscillation – can be thought of as chaotic oscillators that capture the major modes of NH climate variability. Tsonis and colleagues calculated the ‘distance’ between the indices. It was found that they would synchronise at certain times and then shift into a new state.

‘The distance can be thought as the average correlation between all possible pairs of nodes and is interpreted as a measure of the synchronization of the network’s components. Synchronization between nonlinear (chaotic) oscillators occurs when their corresponding signals converge to a common, albeit irregular, signal. In this case, the signals are identical and their cross-correlation is maximized. Thus, a distance of zero corresponds to a complete synchronization and a distance of the square root of 2 signifies a set of uncorrelated nodes.’ http://onlinelibrary.wiley.com/doi/10.1029/2007GL030288/full

These indices are physical realities with major effects on global hydrology. – e.g.

That behave very much like chaotic oscillators at various scales. The method attempts to see how these are linked in a global networked system. A whole new approach – a new way of looking at these things – hence the title of the paper.

https://hypergeometric.wordpress.com/2014/12/01/tsonis-swanson-chaos-and-s__t-happens/

‘First, there is no instance where their series based explanation makes a prediction that is falsifiable. I challenge them to make one.’

‘Second, while they offer a statistical explanation of series, they have not advanced a physical mechanism for its realization, something essential for both taking it seriously as a hypothesis and for supporting additional scientific work based upon it. They can’t say what additional measurements are to be taken or where. Indeed, from their perspective, doing additional measurements is somewhat pointless. due to the “chaotic” nature of outcomes.’

Three great physical theories emerged in the 20th century – relativity, quantum mechanics and chaos. The letter is the theory of complex and dynamic systems. The expectation of the behaviour of these systems include an increase in autocorrelation (e.g http://www.pnas.org/content/105/38/14308.full) and noisy bifurcation (e.g. http://arxiv.org/abs/0907.4290). It is all completely deterministic – but ultimately too complex to determined completely as yet.

‘Third, they embrace the popular understanding of “chaos” from the Lorenz setting rather than a technical one, so the scientific reader really doesn’t know from paragraph to paragraph what exactly they are talking about.’

I certainly don’t agree. Perhaps related (to non-linearities in climate) reading first might help.

e.g. http://www.fraw.org.uk/files/climate/rial_2004.pdf

There is first of all the fact of abrupt change – at decadal scales even – in the climate system. Then there is a paradigm that explains the observations. The US National Academy of Sciences (NAS) defined abrupt climate change as a new climate paradigm as long ago as 2002. A paradigm in the scientific sense is a theory that explains observations. A new science paradigm is one that better explains data – in this case climate data – than the old theory. The new theory says that climate change occurs as discrete jumps in the system. Climate is more like a kaleidoscope – shake it up and a new pattern emerges – than a control knob with a linear gain. .

Regarding definitions of “chaos”, I was simply going back to dynamical systems work which originated the concept. It doesn’t matter whether or not NAS used the term, or some peer-reviewed publication used the term, any more than poor use of t-tests appear in climate, meteorological, or physical literature. Poor use of t-tests is done in medical literature all the time. There is no definition of “chaos”. There are only grades. The chaotic pendulum where the predictive response is the number of times the pendulum rotates about the mount before settling back in the well has a spectrum of behaviors, depending upon impulse of launching it. Where *exactly* is the chaotic boundary? There isn’t one! “Chaos” refers to the phenomenon that in some region of impulse, the prediction of the number of rotates before settling back into the energy well are less and less uncertain. But they aren’t *inherently* uncertain! Start modeling the frictive forces, per tribology, and predictions become sharper.

Regarding ” A new science paradigm is one that better explains data – in this case climate data – than the old theory”, I disagree. “Better explains” is more than being logically consistent. “Better explains” means *predicting* with smaller error bars. In this case, a “better hypothesis” would need to hindcast and forecast with smaller error bars. The “chaotic theory” gives up on being able to do that. That’s one reason why, in another place, I say it’s “not even wrong”.

‘AOS models are members of the broader class of deterministic chaotic dynamical systems, which provides several expectations about their properties (Fig. 1). In the context of weather prediction, the generic property of sensitive dependence is well understood (4, 5). For a particular model, small differences in initial state (indistinguishable within the sampling uncertainty for atmospheric measurements) amplify with time at an exponential rate until saturating at a magnitude comparable to the range of intrinsic variability. Model differences are another source of sensitive dependence. Thus, a deterministic weather forecast cannot be accurate after a period of a few weeks, and the time interval for skillful modern forecasts is only somewhat shorter than the estimate for this theoretical limit. In the context of equilibrium climate dynamics, there is another generic property that is also relevant for AOS, namely structural instability (6). Small changes in model formulation, either its equation set or parameter values, induce significant differences in the long-time distribution functions for the dependent variables (i.e., the phase-space attractor). The character of the changes can be either metrical (e.g., different means or variances) or topological (different attractor shapes). Structural instability is the norm for broad classes of chaotic dynamical systems that can be so assessed (e.g., see ref. 7).’ http://www.pnas.org/content/104/21/8709.full

The original dynamic analysis was Poincaré’s 3 body problem.

http://www.upscale.utoronto.ca/GeneralInterest/Harrison/Flash/Chaos/ThreeBody/ThreeBody.html

Chaos is in the sudden shifts in trajectory between the attractor basins that comprise the solution space for the equations.

The idea lay dormant for 60 years until it was rediscovered in Lorenz’s set of non-linear equations. It has since been extended to a ‘broader class’ of dynamical systems in applications ranging from ecology to economics. As James McWilliams says in the quote – there are expectations about their behaviour.

SoD,

whenever I have to think about chaos theory, the first thing I always do is to pick up a copy of the old paper from Hays, Imbrie and Shackleton (1976) on obliquity and precession and to put it on top of the stack of papers on chaos theory. It’s the perfect antidote against loosing ground amongst strange attractors (which, I fear, happens to you right now). Forced, predictable responses are not something you get only in the output of climate models. They are real. They are imprinted in almost any climate archive. Don’t forget them.

The orbital parameters set conditions for ice and snow feedbacks Think abrupt change rather than chaos.

verbascose,

I suggest you read the series here Ghosts of Climates Past (part 1 here). I don’t think you will look at Hays, Imbrie and Shackleton (1976) (see part 3) quite the same afterwards.

Nope, doesn’t change anything. My point here is solely empirical: there are these frequencies in the sediment cores (and in many other climate archives as well). If chaotic processes would dominate and would be able to override forced responses with ease, one would not find regular patterns in cores.

verbascose,

If the chaotic behavior of the climate system were not able to override forced responses, the period of glacial/interglacial transitions would still be ~40ky. The signal from eccentricity at 100ky is simply not strong enough to explain this change in period. Not to mention that the most recent transitions do not, in fact, correlate with eccentricity. Then there is also the circularity of the dating of ocean cores, and hence glacial/interglacial transitions, by using Milankovitch cycles. In logic, that’s called begging the question.

As SoD points out, you need more than a hypothetical mechanism. You have to show that the mechanism works. That hasn’t been accomplished so far.

verbascose,

I’m not claiming that they “override forced responses with ease”, or even that they “override forced responses”.

What is the time period between the last 4 ice age terminations? It’s not 100kyrs.

I did a calculation based on one set of dates:

I-II = 124 kyrs

II-III = 111

III-IV = 86

IV-V = 79

V-VI = 102

Peter Huybers & Carl Wunsch came up with probably one of the best theories, which is that ice age terminations are paced by obliquity, i.e. they occur in multiples of 40kyrs. That’s how strong the 100kyr theory is!

Obliquity pacing of the late Pleistocene glacial terminations, Huybers & Wunsch, Nature (2005):

Of course, there are other models. A recent one, also published in Nature, by Abe-Ouchi uses an ice sheet model, with the hysteresis of the isostatic rebound being a big part of the terminations.

Insolation-driven 100,000-year glacial cycles and hysteresis of ice-sheet volume, Ayako Abe-Ouchi et al, Nature (2013)

Verbascose: Are you an emerging prebiotic?

http://pubchem.ncbi.nlm.nih.gov/compound/Verbascose#section=Top

Click to access Casey-Johnson-Lentils-A-Prebiotic-Rich-Whole-Food-for-Reducing-Obesity-and-NCDs.pdf

If so, cool. Thanks for kicking up dust and creating interesting reading.

WRT the main topic:

Looking at the 5.5MY temperature record

http://en.wikipedia.org/wiki/Geologic_temperature_record

It appears that since the Pliocene closure of Panama (and the Atlantic becoming a type of Chua’s circuit) the ~100K cycles represent the onset of dynamic equilibrium. Therefore, an equatorial oceanic block is a *forcing* that shifts the climate to a new plateau in ~2MY. The current tectonic climate configuration appears to be ~100K +/- 33%. The 40K cycle is damped by the land ice-sea ice-ocean system.

Blame the Great Lakes

As the temperature continued to drop from 3 to 1-MY, the scoured out Ice Sheet along the Great Lakes basin made roots that allowed for greater ice thickness, massive buildup and reduced linear flow to calving.

A Land-Ice Bubble.

The Ice Sheets became *Too Big To Fail* surviving multiple DO events pushing past the previous 41K limits, then crashed from their immense mass coated with teratons of dust from an increasing dry and exposed crust.

Is that Chaos?

verbascose,

I reviewed the opinions of climate scientists on ice age terminations in Ghosts of Climates Past – Eighteen – “Probably Nonlinearity” of Unknown Origin.

Many climate scientists believe that ice age terminations are related in some way to solar insolation changes via either eccentricity or precession or obliquity but none of them can come up with a theory. Or at least just about all of the ones I reviewed did come up with a theory but not a theory repeated by anyone else.

And other climate scientists don’t agree with this or just state “it is widely believed”.

I’m not much for myths. If no one can agree on a mechanism then it’s not a theory that relates to “forcing” or even “physics”.

On the other hand the waxing and waning of the ice sheets over 20 kyrs and 40 kyrs is clearly explained by solar insolation changes and just about everyone agrees on that.

This article is aimed at just one single myth.

If climate science didn’t believe that climate was chaotic this article would be different. But most appear to believe it and many papers that the IPCC referenced for chapter 11 have chaotic climate as their working assumption.

That said, there certainly is a consensus belief (in the papers I reviewed) that the forcing of GHGs will move (and has already moved) the climate into a region that is different from its recent state.

I’m not arguing against the idea that changes in the distribution of solar radiation can affect climate, or that changes in GHGs can affect climate.

I’m just pointing out the obvious impact of chaos theory on climate that I can’t find discussed anywhere in the various chapters of AR5.

SoD,

keep things simple. I am not talking on opinions on the mechanism of ice age terminations (they vary). I am only pointing on the empirical facts: that there are regular patterns in the ice and sediment cores. Chaotic processes do not generate regular patterns.

There is also a very simple explanation for the 30 years of the WMO: just plot the standard deviation of the first (or last) n years of some temperature data set against n. You will notice that the standard deviation is very large at small n, but settles at a more or less stable values when you reach a certain n. That’s all.

verbacose,

Sure they do. They’re called quasi-periodic oscillations, see, for example, ENSO. And they can be quite regular for an unpredictable length of time. The fact that you can find evidence of Milankovitch cycles in, for example, ocean bed cores is not proof that the climate system isn’t chaotic.

verbascose,

Also, there are many strange events in the proxy record that we have. Of course, we lack the detailed data that we have on today’s climate but many events are “unexplained”.

You don’t need an external forcing to create significant change in a complex non-linear system. The different components interacting will do this by themselves. Each, in the end, has a physical basis, but climate shifts without “external” influences appear to be the norm.

I expect we will get lots of opportunity to discuss this topic in this series.

‘What defines a climate change as abrupt? Technically, an abrupt climate change occurs when the climate system is forced to cross some threshold, triggering a transition to a new state at a rate determined by the climate system itself and faster than the cause. Chaotic processes in the climate system may allow the cause of such an abrupt climate change to be undetectably small.’ http://www.nap.edu/openbook.php?record_id=10136&page=14

It is perhaps not strictly true that emergent climate states occur without external influence. It may be better to consider these as control variables than forcing in the usual sense.

There is a gradation between the climate variability we can attribute to chaotic (internal) behavior and that we can attribute to external forcings. None of us seriously would argue that we cannot closely predict the effect of cutting insolation by 50%. I also doubt that many here would deny that the ~0.25C drop in GAT in 1992-93 was due largely to the mid-1991 Pinatubo eruption, which probably cut insolation by ~2.5W/m^2 (~0.7%) for ~2 y.

The issue, then, is characterizing the region where there are both plausible internal causes and plausible forced causes of some observed behavior and, if possible, attributing the appropriate share to each.

‘It is hypothesized that persistent and consistent trends among several climate modes act to ‘kick’ the climate state, altering the pattern and magnitude of air-sea interaction between the atmosphere and the underlying ocean. Figure 1 (middle) shows that these climate mode trend phases indeed behaved anomalously three times during the 20th century, immediately following the synchronization events of the 1910s, 1940s, and 1970s. This combination of the synchronization of these dynamical modes in the climate, followed immediately afterward by significant increase in the fraction of strong trends (coupling) without exception marked shifts in the 20th century climate state. These shifts were accompanied by breaks in the global mean temperature trend with respect to time, presumably associated with either discontinuities in the global radiative budget due to the global reorganization of clouds and water vapor or dramatic changes in the uptake of heat by the deep ocean.’ http://onlinelibrary.wiley.com/doi/10.1029/2008GL037022/full

The unpredictability of these shifts both in size and sign present insurmountable difficulties for attribution and prediction at present. But it does have implications for the rate of ‘greenhouse gas warming’ during the 20th century – and for whether the rate is likely to continue into the 21st century.

It suggests as well that we should be looking at cloud cover changes associated with ocean and atmospheric circulation changes.

SOD: I’m not sure I understand the practical implications of your statement that “Climate is a Boundary Value Problem”. Is the following interpretation correct?

Climate is sometimes defined as the average and standard deviation of at least 30 years of weather (after accounting for seasonal change). If the proverbial butterfly of chaos had flapped its wings differently, our climate could have been “significantly” different. In other words, a butterfly could cause “climate change”! Defining climate as a centennial or millennial average won’t necessarily fix the problem.

(I don’t know how to define “significantly different” climate. Normally I’d ask if the 95% ci for the difference in the means of an observable in two possible climates includes zero. Given enough observables, however, such differences can be found by chance.)

I think your interpretation is correct.

If climate is defined as the 30 year statistics of weather then climate will depend on the current state.

If climate is defined as the “long term statistics” of weather then climate will not depend on the existing state.

That is, we can’t go applying what we know about the predictability of simple chaotic systems (predictability of their long term statistics) by writing an arbitrary definition of “long term”.

Reiterating stuff well known as well as it was new, not you, but the entire shtick: http://www.realclimate.org/index.php/archives/2005/11/chaos-and-climate/

What makes it difficult to draw strong conclusions on the significance of chaos for climate is that the Earth system is extremely complex. It’s not controlled by a few exact equations like the Lorenz model. It’s not a closed system that’s controlled by even a large set of exact equations like typical GCMs. On the scale of details it’s actually stochastic, i.e., it’s disturbed all the time by random external events like variations in solar radiations and wind, cosmic rays, meteorites, etc. Even the internal mechanisms have a huge number of details that are effectively random, not derivable from exact equations.

My own thinking is that the stochastic input to the Earth system dynamics changes many arguments that are used in describing chaos. The butterfly flapping its wings is one of the innumerable stochastic inputs. The totality of small stochastic inputs leads also to dissipation. The other butterflies and other stochastic inputs remove the influence of the single event. The effect does not grow according to the exact equations in the spirit of chaos, but the equations of stochastic dissipation are likely to tell that the effect dies out.

In this spirit we see in weather rather amplified stochastic behavior than deterministic chaos.

Moving to climate, the relevant questions concern the nature of persistent modes.

– Does the Earth system have modes that have little dissipation and persist therefore for long (decades, perhaps centuries)?

– If there are such modes, is their nature more like quasiregular oscillations, or “chaotic” phase shifts from one attractor to another?

More similar questions could be posed, but I stop here.

Thank you for citing that Realclimate article. In a comment by R. Pierrehumbert (well worth reading), editor Stefan said:

SoD, what do you think of this point?

Response?

Response?

Response?

Meow,

It’s an interesting article with interesting comments. I think I read it quite a while ago and to some extent it confused me about how climate science generally thinks about the subject of chaos.

I’d like to come back to those questions in subsequent articles.

This article was around one specific point, that I hope is clear. Chaos theory of simple systems says that the statistics of a chaotic system can be reliably known, over a long enough time period.